SGU Episode 1003

| This episode needs: proofreading, links, 'Today I Learned' list, categories, segment redirects. Please help out by contributing! |

How to Contribute |

| SGU Episode 1003 |

|---|

| September 28th 2024 |

|

"Exploring the future of genetic preservation: Who wants to live forever?" |

| Skeptical Rogues |

| S: Steven Novella |

B: Bob Novella |

C: Cara Santa Maria |

J: Jay Novella |

E: Evan Bernstein |

| Quote of the Week |

"You don't get rich writing science fiction. If you want to get rich, you start a religion." |

L Ron Hubbard |

| Links |

| Download Podcast |

| Show Notes |

| SGU Forum |

Intro

Voice-over: You're listening to the Skeptics' Guide to the Universe, your escape to reality.

S: Hello and welcome to the Skeptics' Guide to the Universe. Today is Wednesday, September 25th, 2024, and this is your host, Steven Novella. Joining me this week are Cara Santa Maria...

C: Howdy.

S: Jay Novella...

J: Hey guys.

S: Evan Bernstein...

E: Good evening everyone.

S: And we have a special guest, Andrea Jones-Roy. Andrea, welcome back to the SGU.

AJR: Thank you so much. Hi, everyone.

S: Always great to have you on.

E: Hi, Andrea.

AJR: Great to be here. Always love hanging out with you all.

S: Now, Andrea, you are a political scientist, right?

AJR: It's true. Yes. Regrettably.

S: So this must be a really busy time of year for you.

AJR: Yeah. I was thinking about it. I was like, this is maybe my, Evan, you can tell me if I'm out of bounds here, but maybe my tax season. It only comes every four years, unlike Evan's, which apparently is every year according to the IRS. But it is very busy. A lot of people want to know what's going to happen. And unfortunately, I don't know. So I'll just get that out of the gates right now.

S: And how do you call yourself a scientist? How does a scientist become an absolute certitude? What's going to happen?

AJR: Forty percent. That's the answer. I won't tell you of what, but that's the correct answer.

S: The answer is three.

AJR: It's also a time when everyone claims to be a political scientist. I don't know if there are times, Steve, when people claim to be neurologists.

S: Everyone thinks they're an expert on whatever they've experienced in their life.

AJR: So everyone's actually telling me how the election is going to go when I don't have an answer.

E: And nobody has the answer.

S: What irritates you the most? What does the media get wrong the most?

AJR: Well, I think it's, so I was speaking at a conference a couple of days ago and they referenced politics and said, don't worry, we're not going to argue and scream. This isn't politics. And what gets me angry is that politics doesn't have to be screaming. And political science is certainly not screaming. We're a bunch of nerds looking at spreadsheets about people participating in politics. But generally speaking, this idea that because it's politics, it has to be an angry fight really stresses me out. And a lot of what I love about political science is, yes, there are things to be angry about. There are real world ramifications that I personally, as a human, am furious about. But there's also a lot that we can do to kind of take a bigger picture and say, well, what's actually working and what isn't working, and how can we improve things? And we can talk, even if we disagree, about our political views. We can actually have conversations. And it really frustrates me that people assume that you can't. And it wasn't that long ago that people who disagreed with one another could have a conversation.

S: Right.

AJR: It's easy for me to say that. I haven't spoken to someone I disagree with vehemently in a really long time.

S: I have. I've had pleasant conversations with people on the opposite end of the political spectrum from myself. Just agree that that's what you're going to do.

E: Right.

AJR: I mean, and this is also, again, the hardest part maybe about being a political scientist right now is trying to be as non, trying to separate my partisan identity from my work as a scientist.

E: Right. Yeah. How do you? Yeah.

AJR: It's difficult. Yeah. One thing that helps is that my particular area of research is about China, and I have less personally at stake. Obviously, we all have things personally at stake with what China does and how they behave and the decisions they make, but it riles me up a little bit or a lot less than, say, a particular governor doing something that I find to be egregious.

E: Andrea, I'm sorry, for clarification, are you talking about Chinese politics or how China influences U.S. politics?

AJR: I talk about Chinese politics. I study Chinese media specifically. Which the United States media, I haven't studied this. This is not my scientific take. This is my citizen take. The US media increasingly looks like just two Chinese media's fighting each other. But insofar as you have media outlets that roughly say what the government or the party talking points are.

C: Yeah.

AJR: Yeah, but this time of year is very hard to separate those two. I mean, in the United States, for many years, it's been hard to separate those two. I have some friends who study, professionally, things they care about personally, so I'm very interested in things around capital punishment and abortion access, but I don't research that, and part of the reason I don't research that is I don't think I could be a particularly good scientist from an ethics perspective. But I have friends who do manage to do that, and hats off to them.

J: Yeah, right? Yeah. Andrea, do you know like a lot of information comes out of the US government about what the government is doing?

AJR: Yes.

J: And there's just endless debates online or whether or not that data is trustworthy. Do you have any opinion on that? Like is there any insight you have about that?

AJR: Do you mean like, so like jobs data or crime data that everyone-

J: Yes, all those statistics.

AJR: Well, Jay, I'm so glad you asked because I'm actually launching a new podcast. I did not plan on plugging this, but I have a podcast about data and where it comes from. And a couple of the episodes are specifically about data from, let's say, quote unquote, the government. And I guess there is no real answer in the sense that each one of these data sets, whether it's jobs or crime or inflation or whatever, comes from, of course, imperfect series of sources, of methods, of collection, of ways of reporting, and ways of aggregating it. I mean, crime data is very, very messy and very difficult. Like a crime takes place, that doesn't mean a number shows up in a spreadsheet. It has to go through a lot of people with a lot of different incentives in order to end up in that spreadsheet, for example. And the jobs data is revised, and half the country decides that that means you throw away all the data. And I think the whole thing is, it's talked about in a frustrating way, because all data is imperfect. And basically, if the data is pointing in a direction that my political side likes, then we say, yes, it's government data. We believe it. And then if it's pointing in a direction that my political side doesn't like, we say, see, there's an error. See, they issued a correction. See, they revised these numbers. See, this was wrong. And the real answer is that, and you all know this, is that any piece of data, any study, there are going to be errors in it. We're going to update it over time. We're going to learn more and we're going to collect things better. And so the entire conversation, some of it's right and some of it's wrong. Generally, I'm inclined to roughly take seriously the broader trends. I don't think it's useful to argue over whether the increase was .01 or .02. I don't think that matters. And I think it's way too noisy to say anything specific. But we can say things like broadly, some evidence is coming out that murder went down in 2023. I don't think we can say much more than that as far as specific percentages, but I am relatively confident in that particular claim. But I wouldn't say much more than that. And we definitely can't say anything causal about any of this stuff.

J: Yeah, I mean, just in general, when you hear job statistics and you hear, you read about economic information, there's information that comes from the government, but then there's economists that write about it.

AJR: Right.

J: And even though that they have their internal debates, there is somewhat of a consensus of what's going on and what the truth is. And I just find it very challenging to convince people who don't like the results of that data that this is the data, this is how we judge presidencies and bills and all that stuff. This is how it's done. How else can you do it if you're not looking at data that's coming from the departments that are spending that money and running these projects?

AJR: You're absolutely right, Jay, and I agree that you can't just ignore data that you don't like. And frankly President Trump, when he was president, made the point of, oh, if we stop measuring, giving people COVID tests, the COVID numbers will go down, is the perfect illustration of the fact that what's in data and what's in reality do not necessarily track exactly correctly, right? We would have still been having COVID even if the numbers went down because we weren't testing. But you're absolutely right. I mean, there are measures that we all sort of roughly agree on. So take something like GDP, right? It's a measure of productivity in the economy and it measures the US GDP versus Canada's GDP, GDP per capita, all those things. It's a very messy, very imperfect, rough metric. And it goes up as horrible things like terrorism and natural disasters can cause GDP to go up. And so we can make claims that indicate health of the economy that may be under the hood really aren't. But it's still a useful piece of information, and it's one that has some consistency from quarter to quarter, year to year, decade to decade, roughly. So we can still learn things. My friend Drumel, who is a journalism professor and a researcher on polls at Columbia University, basically put it well to me the other day. He was like, we can learn from data. We just can't worship data. And I think that's a really nice way to summarize it. The problem, yeah, is that people pick on the one problem they have and say, therefore, we throw it all away. Or I agree with it, therefore, we say data is capital T truth. And both of those interpretations are incorrect.

S: Yeah, so in other words, like all of science.

AJR: Yeah, exactly.

S: We talk about it all the time. And this makes our messaging as skeptics and science communicators very, very difficult because you say all data is imperfect. And I just went through this just very simple question what's the effect of net metering on? I'm not going to talk about this again, don't worry about it.

E: Our email box is full of them.

S: It ultimately came down to like, do you believe the studies or not? That's what it came down to. And of course, they're not perfect. So you can say, yeah, but this is all the data we have. And this is basically what it shows. And on the other side, they could say, yeah, but that study was done by a proponent of solar power. So I don't trust it. And this study was done by a utility. So the other side doesn't trust it, or whatever. Or you could just say, I just don't believe it, because it doesn't match what I want to believe. So it's true pretty much of everything.

C: What I love, though, is when people argue against, because data come in different forms, right? Like you were just talking about all the different ways that something can be measured and all the different ways that something can be interpreted. I would say the same thing about demographic data, but I think that demographic data in some ways is like a different animal. And I just, I love it when people just argue against demographic data in such a passionate way. And that's always amazing with me, just like these claims that are data-less. And if I just repeat it enough times, somebody is going to buy into it.

AJR: No, you can say anything, and this is true with any field as well. Politics gets us really riled up, but it's true anywhere where the kind of louder and more with certainty you say a number or a result, the more people are inclined to believe you. Unfortunately, most people aren't reading original studies and they aren't looking at data and they haven't been trained in data literacy or critical thinking and they don't all listen to your podcast. And so really we just go off of what political scientists and many people call elite cues, which is this guy is the one I trust. Therefore, I'll believe the numbers he says. And so if someone in a debate says this is happening and that's happening, then I'm going to believe them without questioning it. And then what was the point of the research? You know, I mean, and this happens outside of politics, too. Like, I'll do a research project and show the results. And then, yeah, even when there aren't political stakes, people are just like, nah, I don't like it. And so you just dismiss the whole thing. OK. You know, or there's the motivated reasoning, right? That every study is imperfect. But you find the flaws in the ones whose conclusions you don't like, and you gloss over the flaws in the ones whose, again, this is exactly what you all are talking about all the time. Yeah.

S: Yeah, because no scientific study is perfect. If you want to find fault with it, you can. So you have to give people an understanding, and this is where you have to get wonky, right? There's just no way around it. You've got to get down into the weeds or you've got to listen to somebody, again, that you trust who gets wonky for you. This is why scientists don't really put a lot of weight on this data, or this is why this is a consistent result that keeps coming up over and over again. We're getting pretty confident that this is telling us something about reality. People don't like talking in those squishy terms. about it could be true it probably true it you know whatever they they want more absolutes and it's just so much simpler just to listen to the guy that agrees with your tribe.

AJR: And then, Cara, you tell me, I'm probably going to get this wrong, but then you get the dopamine hit or the adrenaline hit when they say something that makes you outraged or makes you scared or makes you whatever. And so you listen to it from like a, I don't know, a salivating dog Pavlovian kind of way, too. And Steve, you're right. I mean, humans are bad at interpreting and making sense of things like probabilities and confidence intervals and uncertainty under the best of circumstances. And nothing in our media environment right now between social media and headlines and 24-hour news and sound bites of presidents saying wild things in debates, nothing about that lends itself to thoughtful discussion of these things, you know? And even when I'm teaching it, and maybe this is just a fault on my teaching, I have 14 weeks to help students think probabilistically, and I don't know that I succeed, you know? It's not intuitive per se unless you've really had a lot of exposure to it, and even then you kind of have to be thoughtful, because we just want certainty, I think, as people.

E: Well, yeah, and we only have so much brainpower, right, to devote to these kinds of questions in our lives. We're all doing a million other things at once. Really, how much effort and time can a person reasonably put into this?

S: Well, it depends on if it's your job or not.

E: It depends on if it's your job, yeah. But for the majority of people, it is not their job.

S: It isn't, yeah. No, I agree. It's like in medicine, we spend 8 to 10 years teaching students how to think probabilistically in very complicated and nuanced ways. It's hard, and it's hard to get it right, and you fall for a lot of pitfalls. So you're dealing with several layers of things. On the one layer, our brains are good at some things and not good at other things. Like statistics were terrible. On the other side, we also have motivation not to be good at thinking when it doesn't lead to conclusions that we like. I don't want to give the impression that we're in a business-as-usual kind of period in American politics, because I don't think that's what you're saying, but I want to give that impression, because there are degrees. You know, if there's motivated reasoning, then there's motivated reasoning. I mean, there is what we would call spin, right, where there's a core of facts, and both sides try to spin reality and emphasize the facts that they prefer, et cetera. And then there's gaslighting, right? Then there's like an order of magnitude where you just chuck out reality and substitute it with a complete and utter knowing fabrication. These are not equivalent, just because they both have a a strained relationship with the truth. There are orders of magnitude difference between these kind of levels of behavior. And don't you agree that we're in this—not saying this has never happened before, but we're in a period of time that is different than it was certainly 20 or 30 years ago?

AJR: Oh, for sure. And for so many reasons, too, right? I mean, the media landscape and the way we talk to each other and the way we consume and hear information is changing by the second, basically, especially with generative AI. But even in the last 10 years, I think that's changed incredibly. And yes, every other underlying force that's contributed to the various types of disagreements and polarization and whether it's between leaders and citizens and the way we talk about things and it's over issues or it's more affective polarization where we just simply dislike the other person. It's very different, and I think Steve, at the beginning you asked what's the most frustrating part. I think I'll update my answer to say that a lot of times when I tell people I'm a political scientist, people say, well, politics isn't a science. And it's like, well, politics isn't, but you can study politics in a scientific way. But that means assuming there is some to your to your point just now, assuming there is something to consistency in the underlying, say, reality that we're studying or the data generating processes like we are assuming that Studies I did 10 years ago, 50 years ago, can help me understand the present moment. And in some cases it can, sort of the underlying things like what motivates someone to show up to vote, what makes someone have the political views that they do well, it depends a lot on their parents and where they grow up those sorts of things don't tend to change. But a lot of the way we're doing politics and the environment of politics has changed a lot. And so it's really an open question how much of even research from, say, the Obama or the Bush administration applies to now because it just, and again, hears me as a citizen, it just feels so different and bad. It's like what used to motivate people to vote might be totally different. And yeah, we used to all agree on underlying facts and we simply don't. And I don't know what that does to things we thought we knew about how humans behave politically.

S: Yeah, it's like a game where the rules can change. If you're playing by the old rules, you're going to get it wrong. You're studying it on two levels. What happens with these rules, and then how are the rules changing over time?

AJR: There's a really interesting, just one tiny example of research that I think is really interesting is from a couple of scholars, I believe they're at Syracuse, but don't quote me on that, who study the role of anxiety in politics. And I think Cara and Steve, you'd have a lot to say about this, but basically they kind of, they try to hit on this and say, look, anxiety has always played a role in politics. It's one of the things fear and other things related to anxiety do motivate us to behave in certain ways in the political sphere, whether it's voting or campaigning or what have you. And basically they say, look, the level of anxiety in the United States political discourse right now has never been higher. Is it the case that these experiments we ran even 10 years ago still apply today because you're exposed to just so much more? And we don't know. And unfortunately, the consequences of all these things are going to play out in a month and a half from now. And we're not going to have the studies about it for four years.

J: So should we be angry with you about this?

AJR: It's uh, and I do apologize for, for everything I have to say.

S: For all of American politics.

AJR: I'm so sorry. Yeah, I really botched it.

S: We're going to come back to this when you, when you talk about your new segment, but we're going to go through the regular show now.

What's the Word? (18:55)

- Pitch

S: We're going to start with Cara doing a, what's the word?

C: What's the word? I just made up that song.

AJR: I like it.

C: So I am going to talk about a word that was recommended by William Patterson from Sonoma County. He said, I think a good word to analyze is pitch. It has tons of different meanings, some of which are completely unrelated. And you are right, William, I don't think we will have time to talk about every single definition of the word pitch. But let's start by kind of digging into the etymology a little bit. Because in most of the places that I looked, the word pitch is divided into two separate etymological roots. So it's sort of like a convergent evolution thing happening with this word. The first one is the one that I think many of us are probably the least familiar with. And that is like this substance. It's sap, basically, pitch that comes from trees, it's sticky and it comes out of trees. And you also, it's everywhere, you also have another variant of that, which is this viscous material that is left over after you distill crude oil.

E: Yeah, petroleum, right?

C: Yeah, that's also called pitch. And in geology, there's pitch stone. And so these all come from an original root that really kind of going all the way back from like the Middle English to the Old English to the Latin to with the cognate in the Ancient Greek. Really across the board, the roots for this just mean the word sap or sap from a pine. You'll often see pine being utilized in the definitions. And that's where the term pitch black or pitch dark comes from. Does that make sense?

AJR: I was going to ask if that makes sense, because I never questioned it before, but yeah.

C: So that comes from that deep dark color of the sap from that pine. Now there's another etymological root, which I can't, so I'm not going to pronounce Middle English here, but like pitchin' or pikin' that goes to the Old English that goes down to the Germanic. And that route has to do with thrusting or fastening.

S: Like pitching a tent?

C: Or picking, yeah. So you can throw a pitch at a baseball game. You can pitch a tent by driving stakes into the ground. The distance between some objects is considered a pitch. The angle at which an object sits is considered to be a pitch, right? Very often, like in UK English, the area where a campsite sits or certain types of sports fields are called the pitch. On the pitch, exactly. And that all does seem to come back to that early about that early definition around thrusting or driving a stake or piercing with a sharp point those stakes of your tent and throwing things and even pitching in working really vigorously.

AJR: What a cool word.

C: Yeah, it's a really cool word. It like has so many different definitions. The musical sense came much later. That came around the 1630s. And around the same time was the the term that we use for like ships, like their motion, right? They pitch on the ocean. They kind of rise and fall. And so those seem to be slightly newer definitions of the term, but it does have scientific definitions as well. We think of pitches as the frequency, right, of a sound wave is going to give us its pitch. And often you'll see pitch used in like mathematics and geometry to describe distances between things and angles. So it's a super interesting word that has these sort of similar but different roots. And I think we use it all the time without recognizing it. I love that. I love when you have a word that's really, really old, and it just evolves and evolves and evolves. And you can sort of trace it back to where it started. And sometimes the modern usage feels wildly different. But if you go through the series of changes, it all kind of follows.

S: It makes sense, yeah.

C: Yeah, an understandable pattern.

S: I remember, like when we were in Atlanta for an event, there's Peachtree the area of around Atlanta. And we were told, and this is what I found when I looked into it, that actually derives from pitch tree. And it's sort of a bastardization of that, like the original, it was actually a pitch tree, but people then confuse it with peach tree. And that's the name that stuck.

E: But Georgia is the peach state, so you can sort of-

S: Understand why they would have made that conclusion.

AJR: Or is Georgia the pitch state? And we've messed it up all along.

E: Oh, no. Change the license plates.

J: Oh, the pitch there is delicious.

E: Fresh peach pie.

E: When I was digging into this word you go to some obscure places on the Internet because you're trying to learn about how people are grappling with these things. And I found a forum, like a rock climbing or mountaineering forum. And apparently, the word pitch is often used to describe a segment of a long climb between two, how do you pronounce this word? Belays? Belays? Belays?

E: I don't know.

C: Yeah, because I'm not a rock climber. I have no idea. So on this forum, one person's like, oh, it came from the days before rock climbing, when climbers used to get their jollies by climbing large trees. Pine and spruce trees for the most part. And so they would accumulate all this sap on their hands. So trees that have a lot of sap would mean that like a pitch was typically short. But now they use ropes and ropes are longer. And then somebody else was like, okay, right track, totally wrong definition. That's actually, it does come from tree climbing, but the climbing style back then involved throwing a rope as high as possible over a branch, and then going up that way. So they would measure the height of a tree by the number of times they had to throw or pitch the rope to get to the top of the tree. And they're saying that's where the word pitch comes from. And then it devolves into them arguing over which one is an urban legend. So...

AJR: Classic internet.

C: But also, like, I guess that's what happens when a word has so many different definitions. It's easy to come up with some sort of lore around why this new usage came to be.

AJR: And you can kind of, yeah, tell a story based on like, oh, there used to be trees on fields and now we play sports on fields. Therefore it's a pitch, right? Like I could make up a story based on a different definition.

E: Whosever story wins had the best sales pitch.

News Item #1 - Crystal Genome Storage (25:22)

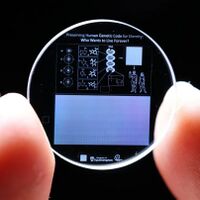

S: All right, Jay, tell us about preserving the human genome forever.

E: Oh, thank goodness.

J: All right. So, I mean this one's cool, but it's also kind of strange. So scientists-

S: That's kind of our sweet spot, Jay. Cool, but strange.

J: So the scientists, some scientists at the University of Southampton developed this this very awesome way of to store the entire human genome on a 5D, right? It's a five dimensional memory crystal. You can think of this as creating a backup of humanity in case something horrible happens, right? Zombie apocalypse, whatever. I mean, your imagination could take you to the places that would end humanity. So the crystal is it's coin sized and it's made from fused quartz. And the really amazing thing is that the storage crystal is designed to last billions of years. That's a long time, guys. It can withstand pretty extreme conditions, right? It can handle cosmic radiation. It can handle pretty severe impact forces, wild ranges in temperature, like from freezing to over 1800 degrees Fahrenheit. What would that be in Celsius, guys? It's hot, very hot. Celsius hot. Their idea is that in the whatever, and we throw a scenario at you guys in the distant future some advanced species is searching the ruined surface of the earth. And I don't know where they put it. I don't know. They didn't really talk about like the delivery mechanism, but like they they enter this old underground thing and they find this crystal. And that crystal will have the information. So whatever these robots or advanced species are, they can recreate humanity. Pretty weird, right? That's strange if you think about it from different perspectives. But the technology is awesome, right? So, it's a crystal. It can store up to 360 terabytes of information, and it can keep that information stable for up to 300 quintillion years. Yeah, right. So that's a long time. That's longer than the universe has existed, quite obviously. It's absurdly long. It's over 21 billion times the current age of the universe. How about that for statistics? So researchers use this ultra-fast lasers to etch tiny voids in the crystal silica structure, right? Sounds pretty simple. And these nanostructures can hold data, but they hold it in five dimensions. And this is where it got its name, height, length, width, orientation, and position. I think that's really complicated, right? Just on the face, right? The height, length, width, orientation, and position. Imagine trying to reverse engineer that with the complexity of the human genome encoded in it. It is very complicated. So to help whoever or whatever, robots to find the crystal to help them understand what's inside. The researchers added some visual clues on the crystal surface. So they included images, Of the four essential chemical elements for life, hydrogen, oxygen, carbon, and nitrogen, that's nice. You know, hopefully, whatever thing would find this, they'd be able to figure out that, yeah, we know what the elements are because we're an advanced species. So these are the four that you need in order to, like do what the crystal is going to give you the information for. They also give a depiction of the DNA double helix. So basically, you're going to create this and diagrams of human, man and women, human, men and women. Right. OK, so make one of these using these four things and follow the instructions that are encoded in these little voids that are inside this crystal. They're going to hopefully, whatever future technology is out there, their AI can look at that and figure it out within maybe a few hundred million years. So there's also a molecular breakdown of chromosomes and their structures within this cell, other data that they're going to need. They're meant to guide whoever's reading these in the future to interpret the stored genome. It's complicated, very, very complicated. Of course, there's a catch because no one knows whether the technology to read this data will exist in the future. Now that's a funny way to put the idea that it's not like the future beings that would read this and use this thing are going to like find our old technology and just insert it. And somehow that'll even make sense to them. They would literally have to reverse engineer the whole thing. Now keep this in mind, I'll give you a little bit of perspective, Andrea, tell me a device that you owned 15 an electronic device you owned 15 years ago, 10, 15, 20 years ago.

AJR: More like 20 years ago, I had drives that I could put floppy disks into.

J: Okay, now imagine taking one of those and trying to make it work today.

AJR: I have one and I would like to make it work for some, and I can't.

J: But yeah, but you know, but the idea is you, you own this thing. You know exactly what it is. You have tons of technology all around you. You could read about this thing forever. You could just keep reading about all the different pieces of information that are out there on the web about it, but could you really make it work? And this is all made by humans in your lifespan, right? And we can't, we can't you have an iPad or an iPod. Remember our iPods?

E: Sure.

J: Dig out your old one if you still have it and try to put a file on it. Good luck.

S: Honestly, that's not a good analogy, Jay. The real question is, could engineers figure out a way to access the data on that floppy disk? Probably.

J: Yeah, I would, I would think, oh wait, wait, you're talking of Andrea's floppy disk? Of course. I'm just saying it's a complicated mess from our own technology from 20 years ago. Like I'm just giving you like a...

S: I hear you. But yeah, but, but to put things into perspective, I mean, you're right. It would, they would require like very advanced, intelligent, not only species, but individuals within that species who are in the equivalent of engineers and scientists, whatever, probably working for years. To reverse engineer it, but what's the other option right it's going to involve?

J: Oh I know that I know I'm just trying to, look Steve but I can't help but almost joke about the idea that something is going to be able to reverse engineer this in the future. But of course what else can you do like if they wanted to do it they did it and I don't see there really can't be a simpler way. You want the thing to be self-contained you want it to last, and you want to give them some information on how to kind of figure it out.

C: Yeah, that's the important thing, right? Like, it's like the gold record. Didn't they actually print, like, how to build a record player on it?

S: No, there was a record player built into the program.

C: Oh, but I thought there was also instructions for, like-

S: How to do it. How to use it, yeah.

C: Oh, how to use it. Okay, yeah, yeah.

J: Cara, you realize that aliens did find that record and they sent us a message. You know what it was?

C: What was it?

J: Send More Chuck Berry.

E: Oh, I remember that. Sorry, Nightline. Yeah, 70s. Oh boy.

C: Wow.

J: Sorry, I'm old.

J: So there is the issue of recreating a human from genetic data, right? This is something that scientists aren't capable of doing today. You know, there has been tons of advancements in biology and our understanding of all of the material that it would take to actually do this. It is likely that we will be able to do it, right? It's not like something that's out of our, like we're not saying, oh my God, this is going to be hundreds of years. Like it is going to happen. But we can't do it yet. So we don't really know what it's going to fully take to do it. But they are storing the human genome. They want to like other institutions and companies want to store frozen animal cells and craters on the moon. We have seed vaults. You know, this is something that humans do. We keep coming back to this disaster recovery situation, some of which might be done by humans themselves. Like, hey, we had an extinction and we want to bring back the Hoosy Watser animal whatever it is, or bring back this plant. That seems very reasonable to me. But thinking of aliens or machines in the future, finding this thing alone, that's a Herculean effort just to find the crystal. So anyway, so there is a few little doodads I want to tell you about this thing. So they were inspired by the Voyager Golden Records. That was one of the inspirations for doing this project. And the other cool thing is there are applications for this data storage beyond the human genome, right? The technology has a lot of broader applications. I don't know how fast the read-write is on it, but that amount of data storage is phenomenal. That's a phenomenal amount of storage, guys. You could back up incredible amounts of data with that. So I would love to see this technology make its way out to where we could use it today. Again, I really need to know how fast you could read write on it. But even still, like if you just wanted to do like a big backup, like let's say that Google wants to back up YouTube.

AJR: Right.

J: But I think that this thing would handle it nicely.

AJR: Better than my floppy drive, for sure.

J: Yeah, but we could start with the floppy drive.

AJR: Yeah, start there.

J: As long, but you let us know when you get it working and then we'll start doing that.

AJR: Anyone listening who's an engineer who can help me with this, get in touch because this thing isn't working. I got to get YouTube on my floppies. So someone reach out.

J: So you try to visualize this huge amount of data storage, right? So there was a picture that Bill Gates took. I remember him being, I think he was like hoisted up super high in the air and he had a CD in his hand and they had stacks of paper that went up. I don't know. I mean, it looked like he was up like 60 feet in the air worth of paper. The idea was that all of that data can fit on this wafer-thin piece of plastic, and this was new technology. I remember seeing that picture and getting the visual and going, wow, that's a lot of data. That is nothing compared to this thing. It's nothing. The amount of data storage that this thing can handle, I wish that Bob were here because he's really good at coming up with things like that, but it might be the size of the Earth of paper like, I don't know, I'm just making that up, but it's phenomenally bigger than what could fit on a CD, orders of magnitude more. So anyway I hope we never need to use it. I hope it doesn't have to be used. But the way things are going here with the politics in the U.S., we probably do need this.

AJR: Check in again in late November and let's see.

J: Yeah, I'll see you in November.

AJR: Now, you bring up an interesting point about our human obsession with making sure that we'll last beyond our own lifetimes, right? I mean, humans having kids, but it's got to be this like deeper evolutionary thing, right? Like you said, with the putting animal cells on the moon and storing seeds, like we really do. We are obsessed with it. Is it just evolution? Is it that simple?

C: No.

J: I'd like to I'd like to think...

C: 100% it's not.

J: I mean, I would think it's probably likely that it's a combination of things. I mean, people want to preserve their cultures and their societies and all this stuff like it's kind of cooked into human psychology, I think.

C: Yeah, but it's also reinforced.

J: Sure. But again, it's the nurture of nature like it's probably both.

S: I also think it's a little bit of projection because, I mean, imagine how cool would it be if we found a time capsule from an alien civilization that had massive amounts of data on it? You know, we would of course launch a project to try to figure it out, to try to reverse engineer it and try to see what kind of information is in there.

E: Contact.

S: I think we have a sense of history and talking to history in the future, preserving knowledge for later, partly because we wish older civilizations had done it for us.

AJR: Right.

E: Sure.

J: Yeah.

AJR: They were so lazy back then.

E: Right?

News Item #2 - How Reliable are Presidential Forecasts (37:16)

S: All right, Andrea, we're coming back to you in political science. Tell us how reliable presidential forecasts are.

AJR: All right. Well, I would love to tell you this and it's actually going to connect to Jay's news in and we'll see how in just a moment. This is a research from a preprint. So it's not peer reviewed. It's a preprint from some political scientists, one of whom I know personally. So maybe a small conflict of interest. But they are writing about political forecasting and particularly political polling. And I think all you really need to know about their argument, or you can learn a lot about their argument from the file name if you go to download the PDF, the file name is EndForecasting. And so that's kind of going to be the whole punchline. I've got to start using more fun file names is one takeaway. So they are taking on the polling industry and a lot of the claims from pollsters and polling aggregators about how accurate they are and how useful they are. And the other conflict of interest to share is that the big antagonist they have in this research paper is Nate Silver, and I also worked for Nate Silver for a year and a half, so this is like watching my to quantitative parents fight, I guess. And so basically the thrust of this research and a lot of political scientists are skeptical of political forecasting, including me. But the thrust of this particular paper that I really enjoy is it says, look, forecasting, in their words, captivates the public. It drives media narratives, right? We cannot get away from hearing about polls and horse race coverage and who's ahead and who's behind. I don't even look and seek out polling information very much, though I do cave as we get closer and closer to the election. And I certainly looked at it when I was making a living working on it, but I try not to. But you can't avoid this stuff. And what they say and what I think is something that you all talk about very elegantly on this podcast a lot is what they call, quote, a veneer of scientific legitimacy around polls. And Jay you kind of hit on this earlier. And we put the word data next to something and we think therefore it's true and it's correct and so we say well Harris is at 48%, Trump is at 46% therefore we can say something about what's going to happen a month and a half from now. And there are lots of problems, of course, as far as the scientific legitimacy of polls and in particular of polling aggregators, right? So we don't know for sure that we're working with representative samples. There's an effort to do so. We don't know for sure. There's selection bias and non-response. And if you get a random call from a number you don't recognize, what are the odds you're going to pick it up? If you do pick it up, what are the odds you're going to stay to answer their questions when you realize it's just a survey? And then what are the odds you're actually going to tell them what you really think about politics to some random person? So we're not working with randomized samples in the way we'd like to in science. We don't know a lot about how different survey firms or aggregators, we don't know what's under the hood in these models. You know, Nate Silver, the New York Times, Politico, other outfits that run these aggregators make a living from these aggregators. No the 538 or now it's Nate Silver has his own forecast. They aggregate all the polling data that's out there. They weight it according to how good the pollsters have been in the past based on their own metrics. I worked a little bit on collecting data for pollster evaluations back in the day, and I don't know how Nate ultimately took all that data on accuracy and weighted whether a pollster was an A or an A minus or whatever. And so it's proprietary information. So we don't know what goes into the theory and into these models. So we can't really evaluate them that way. We know that we're not reaching all of the electorate, but we also know that we're not reaching all of them in different ways. Maybe the people we reached in 2016 and left out in 2016 are not the same as 2016. So there's no shortage of really serious empirical research problems, despite many pollsters and polling aggregators working from the best of intentions. Okay, so there are some problems in polling. We all kind of know, and if you paid attention to polling in 2016, you have some probably built-in skepticism or maybe knee-jerk fear when you hear new polling data come out. But people like Nate Silver argue that this is still better. This is sort of the moneyball argument for elections, right? This is still better than punditry. This is still better than commentators and talking heads just assuming and thinking and expounding on what they think is going to happen. Here's where this paper comes in and where I think we start to have a lot of fun and where Jay's article from our news piece is also relevant. So these researchers who study, use machine learning to make predictions in politics say, look, usually in machine learning, if we want to evaluate how good a model is, we have a ton of training and testing data that we train the model on and then we compare it to whether it predicted reality correctly. So if you think of market data, stock data, weather data, you can evaluate these forecasts and sort of say roughly how good they were or how not good they were. The problem with elections is at least presidential elections in the United States are only once every four years. And that means we've only had about 60 in the United States. So that's a lot. That's a very limited quantity of data that we can compare the outcomes of these polls to. We also haven't been doing the polling that long, right? Now some argue that, well, we have state data and we have local elections. So isn't that a lot more data? The problem is, is that a lot of state data is correlated. How one state goes tells you a lot about how a lot of other states are going to go. And the way that the United States polling, the way the United States election works with swing states in the electoral college mean that even if we can get state polling correct, it's still not necessarily the case that we're going to be particularly accurate at national polling because as we all have lived through, teeny tiny, seemingly random changes can totally flip how an entire nation... So state data doesn't really solve the problem. So what these researchers did is they said, okay, we are going to, and it's worth taking a look at the paper, it's open source at the moment, open access at the moment. They said, we're going to simulate how long it would take to actually have enough data to evaluate whether, say, Nate Silver's model is accurate. It's not, oh, did they get the last handful right? Because we can't really statistically separate that from we happen to throw heads three times in a row or whatever, right? So they said, let's simulate and see how long it's going to take. And basically the punchline, and Jay, this is where we're going to need those crystals, is it will take decades more of elections and research to find out if these forecasts that we're all clicking refresh on right now, to find out if these probabilistic forecasts are more accurate than pundits and talking heads. It will take centuries of data to actually statistically evaluate and compare the models to see if these forecasters are actually reliably and helpfully predicting state and national elections. And it will take millennia, they say, to figure out what techniques are actually best, like which of these models that purport to be the best one is actually the best one. And they even say, look, maybe we can do better because we're all still living in the present. We don't have these crystals yet. We do all want to know what's going to happen in November and four years from now and so on. So one thing they say is, well, you could say, what if we look at forecasters who make predictions about other things? So maybe congressional races that happen more often, maybe sports outcomes. But even that, who's to say that the forces underlying a presidential model are going to be comparable to, say, someone who's accurately predicting if the bills are going to win or whatever. So even that kind of generous version is not particularly helpful. And I guess the last thing I'll say here, and the reason I really like this, is there is an underlying tension that I should be candid about between kind of generally political scientists and polling, where in political science, most of the time we try to predict elections on what we call fundamentals. So the economy, things like that, and not really what people say they're going to do. It's more like what is happening in the country. And so there's sort of this head-to-head between fundamentals versus polling and kind of more public opinion, real-time data collection. And basically the punchline of all of this is that we don't know and we don't have enough data to know. And the last thing I'll say is, and this kind of reminds me, Steve, of things you've said, where people say, well, why are horoscopes so dangerous? Why are crystals, not Jay's crystals, but like salt and stuff that people buy that's like, what's the harm in spending 50 bucks on a blue rock? Well, there is a lot of harm. And it turns out, and there are experimental studies, and there's some great links in this paper to existing peer-reviewed studies, where we say, look, it turns out that there is a lot of evidence to suggest that polling, as we currently talk about it, makes people feel a false sense of confidence or security about what's going to happen. That can cause depressed turnout. For example, we think that might be some of what's going on in 2016. These studies are more experimental. So we're in a lab and we say, hey, here are the polling outcomes. Are you going to vote? So it's not the same thing. And you can't really say for sure. But it really can mess with our understanding of what's going to happen. It can mislead the public about how viable certain candidates are and cause them to abandon a candidate that actually could be relatively viable because we're not interpreting probabilities correctly. And it also encourages this kind of horse race coverage that we're all hearing about, as opposed to talking about issues and policies and other things that might matter. So it's not harmless. So their headline is, look, yeah, file name, right? End forecasting. My view on it, if I may, is I think there's a lot of money being made in the polling industry and I don't see it going away. I would like us to be a lot more thoughtful, and we said this at the beginning of the episode today, a lot more thoughtful about what are these results actually able to tell us and what can't they tell us? And that includes things like thinking about probabilities, understanding that if someone is at 58% approval, that doesn't mean they're going to get 58% of the vote, basic things like that, changing how we report on these things, talking about uncertainty a lot more, and I think instilling this kind of doubt. But it's not the same thing as saying, oh, polls are wrong or polls are right. The punchline here is we don't know and we don't have enough data to scientifically know. So it's some information, but to this moment, we don't know if it's better or worse than a coin flip or a pundit.

S: Andrea, are you distinguishing polling data from forecasting? Because polling data—every time I read a poll, they're like, this is a snapshot of what people are saying right now. This does not predict what's going to happen in the future. These are two different things.

AJR: That's true. So the polls are the data that go into the forecast, and that's a fair distinction, yes.

S: Yeah, but you could say like the poll is the poll, and a poll, yeah, I agree that again, the more I read about it, it's like, oh, yeah, the answer rate on this poll was 1%. So it's really telling us almost nothing. That's just telling us who's willing to answer the poll. That's probably overwhelming any other factors in there.

C: But then the forecasts are based upon those.

AJR: The forecasts are based on those, and then they're based on other things that are added in around the forecasters' own assumptions about how different demographics are going to show up and how much it's going to matter. And the problem is you're always looking through the rear view mirror because you're saying, oh, gosh, we really underestimated how Trump voters would turn out in 2016. So now let's I'm oversimplifying. This isn't quite how it works, but like now let's overestimate how likely anyone who they're going to turn out. But then it's like, well, who's to say that this election is going to operate the way from the past? And so we're kind of this jerking around to read through. But we also and this is what this article is saying is we don't know. 2016, there were polls in Nate Silver's defense, he was more accurate, closer to more conservative on Hillary's likelihood of winning than many other outfits. But we don't know what was going on in that model that needed to change, right, or what we were missing or if it was absolutely correct. And that was the percentage. And we just saw the outcome that was slightly less likely, which is totally reasonable.

S: So I wonder if this system is inherently unpredictable, or if it's just an order of magnitude more difficult than the tools we're using right now. And I wonder if, what if again, we seek AI and supercomputer simulations on this, could we do a thousand years of simulations and test our models that way? Or is it just only real world world data is really going to make it is really going to help?

AJR: I mean, I think there is a version of the world where you could put all of that in. What I genuinely don't know, and again, this kind of goes back to where we started this conversation, is what's going to happen in the underlying data generating processes? Like, if politics continues roughly as it is now, or it has been for the past, say, eight years, then we could probably stimulate a whole bunch of things and we could maybe make some claims with enough computational power and AI. You know, it's sort of the equivalent of someone in the 1960s trying to get right what's going on here. And it's I don't want to use this term lightly, but it's more chaotic system than anything else where teeny tiny little differences can lead to wildly different outcomes. So it's hard to me. It seems hard to imagine, but I'm open to it.

S: It also reminds me of Psycho History from the Foundation series. And I mean, Donald Trump is the mule, right? I mean, he is this random factor that nobody could have predicted that changes the rules. Like you talk about the fundamentals, the fundamentals are meaningless when you have something like this going on, right?

AJR: Yes, yeas.

S: You don't know that it really tells us anything much not enough, not enough to predict what's going to happen.

AJR: Right. I mean, and that's and that goes back to Steve, to your distinction there between polls and forecasts, which is like the the polls. There's there's information in the polls. And the best way to look at them is with a lot of skepticism. There's all this selection bias that we just talked about. There's very low response rates. There's all kinds of things. We don't really know the methodology, all this stuff. But if you look at the overall suite of the polls and look at them in aggregate, set aside forecasting, just sort of look at the snapshots that we're getting. The stunning thing is that Trump's approval rating does not seem to budge or Trump's, you know.

S: Yeah.

AJR: I'm planning on voting for Trump. Doesn't seem to budge no matter what's what's happening. That just would not happen 16 years, 10 years ago, right? President Howard Dean yelled the wrong way and we never heard from him again.

S: I know it's amazing. Right, lots of theories about why that's the case, but I guess we can't get into that right now.

AJR: But I think it's an interesting area of I think it's worth trying to study politics and these sort of squishy or more complex things through these empirical tools that we have, but we also kind of see these spin-offs like pollster and forecasting generating this sense of false security or false certainty, and I think that that, it's sort of it's worth doing the science, but but not when you're not careful about it, the science becomes pseudoscience.

S: All right. Thanks, Andrea.

AJR: Sure.

News Item #3 - Myopia Epidemic (52:25)

S: So are you guys aware that we're having a myopia epidemic?

AJR: Is it contagious?

J: What could possibly be causing that?

S: So what percentage of the world do you think has myopia?

J: Twenty five.

C: Me.

AJR: I'm going to say 30. I like Jay's answer.

C: I think it's higher than that. Let me think. All the people that wear glasses, how many of them are like under 45, 50?

E: The aggregate polling on this, Steve, says about 12.3%.

C: I'm going to say it's higher than that.

AJR: I'd like to know the turnout of people with myopia in the next election.

S: It's 35.81% in 2023. It was 24% in 1990. So it's gone up from basically 25% to 35% over the last 30 years.

C: Is it really though, or are we just diagnosing kids younger?

'S: So yeah, that's always the question, right? Is it an artifact or is it real? We talked about this with ADHD, I think it was just last week. And it's real. It's an absolutely real increase. It's not an artifact of diagnosis or changing the definition or whatever. It's really happening. What's interesting is that it's it's continuing to increase and there was a recent paper, which is what prompted this discussion, that estimates that by 2050, we'll be at 40%, although other estimates put it closer to 50%. Imagine half the world being myopic, basically at some time after 2050 is sort of the consensus of opinion here. Now you say, wow, but there's already parts of the world where it's higher. In China, it's already like 70, 80%. So what's funny is that if you go back to the 1940s, 1950s, the typical stereotype of somebody from China was somebody with those small round glasses. That's because there was so much myopia and that was a very common style of glasses that they wore. So it was actually real. You know what I mean? It was a stereotype, but it was based upon reality.

C: But what was the difference, sorry, back then, what was the difference in the number of people in China versus the US?

S: I mean, so in the 1950s, like in the US, it was 2%, 3%, and it was already in the double digits in China. It was already 20%. So it went from 20% to like 80%, but we were at like 2%, 3%, and now we're at 24%, 25%.

E: Why do I feel like screens are going to be a factor here?

C: And I also think we cannot say that it's a... We can say it's a real phenomenon now, but I don't think we can say that the 2% or 3% of Americans who wore glasses for myopia in 1950 were... That we were catching all Americans. Were we screening for vision?

S: Yeah. So that's a good question. When did... Because the thing is, we do screen children in school for myopia, for eye problems, for vision.

C: Now.

S: Yeah. And that goes back at least to the 50s and 60s.

C: Really?

E: I took many eye tests in school.

AJR: I was screened.

S: So yeah, I was screened in the 1960s.

AJR: I was checked for scoliosis.

E: Oh yeah.

C: I remember that test.

E: And they used to do lice checks.

S: So it goes back to some time like that. Certainly goes back to the second half of the 20th century. How far does good data go? I don't know. That's a little bit harder to say, but a lot of the studies that I was looking at starts to track it in 1950, so I'm assuming that's when they started getting good data. So even... Certainly in the last 30 years, we have pretty consistent data, and there's a steady, steady trend of increasing prevalence and incidence of nearsightedness of myopia. So this is a physical problem with the eye, right? I mean, in myopia, the eye is too elongated, and so the image gets focused too far forward, like in front of the retina, which makes it hard to see in the distance, so they're hence nearsighted. So what do you guys think is causing it?

E: Well, it sounds like screens are a factor.

S: Evan thinks it's screens.

J: I would think that that's potentially a huge factor. I mean, I know the reason why screens affect you that way is because you're focusing too close for too long. I don't know. I mean the other thing would be what, diet?

C: Or neurotoxin, not neurotoxin, sorry, but yeah toxins that affect development, I mean.

E: Plastics?

C: Yeah, like we live in a, pre-1950 there was no plastic. We have like so many things in our blood now that didn't exist, like Teflon and all of that.

AJR: I'm gonna go with, I'm with you all on all of those and I'm gonna say maybe we, it's like we stopped thinking of people with glasses as less attractive and so we're all marrying each other with glasses and we're reproducing with myopia now. It's no longer like evolutionarily disadvantageous.

C: Yeah, assortative mating.

AJR: There we go, that's what I want.

S: Interesting. Those are some good thoughts, very creative.

E: However.

C: These are all wrong.

S: The short answer is we don't know.

C: It's all of those things.

S: There are two basic theories that are the most plausible. Evan's the closest. It could be partly screens, and what Jay said is like you're spending a lot of your day focusing close up, but this phenomenon predates screens though, and it doesn't really explain why some countries have a much higher rate than other countries, because it does not track with screen use.

E: Then it would be genetics?

S: No, it's not genetic. It's too fast for this to really to be genetic.

C: It's developmental.

S: It's developmental, but what's the factor? There's no signal, there's no hypothesis or notion or evidence that it's anything environmental like...

E: Literacy rates?

AJR: Yeah, I'm with Evan. Literacy, studying...

S: No. What it is is, again, very good hypotheses, but the leading hypothesis is that it's sunlight. It's that kids specifically are spending less time outside.

E: Vitamin D?

S: No.

C: Vitamin D is made from absorption in your skin. So this is direct sunlight to the eyeball?

S: Sunlight causes dopamine to be released in the eye, which also is important for growth. And so in the absence of that, the eye develops abnormally, right? So it is a developmental problem. As the eye grows, it becomes abnormally shaped. So it could be the absence of sunlight, but the other hypothesis is that it's a relative decrease in being in an environment where you have to focus in the distance at infinity, right? If you're inside all the time, you're never focusing at infinity. And so just from use over years, your eye develops with a bias towards focusing close up. So it doesn't necessarily require screens, but screens certainly are part of that phenomenon. It's really just that kids are not spending enough time outside. That's the bottom line.

C: But you keep calling that a hypothesis. Is there any evidence to support that?

S: Yes, there's a lot of evidence. There's a lot of evidence to support that. That's why I said these are the leading hypotheses, because there's evidence to support that. And it comes both ways. It correlates with urbanization. It correlates with time spent inside versus outside. It correlates. And also, if you, as a matter of policy, give kids more time outside, it reduces their risk of myopia, it reverses the trend. That's critical, because if in order to prove a cause and effect, you have to show that if you eliminate the cause, the effect goes away, right? So we have that data, but they-

E: Locked up 100 children outside for a year and studied them and see what they did.

S: See, at this point, there's pretty much no question that it's outdoors time is the critical element.

C: So like the stereotype that little book nerds all have glasses?

S: Yeah, is actually, what we don't know is the relative contribution of the dopamine from sunlight versus the focusing in the distance. But it's being outside is the critical element.

AJR: Is it only for children, or should I go outside and stare at the distance?

S: So that's a really good question. It's developmental, so it's definitely true through high school. Not really sure if there's any effect once you're already an adult. So probably not adults. But doesn't mean it's not good to get outside and get some sunshine.

E: Nothing wrong with that.

S: So the good news is the fix is simple. I'm not going to say easy. But it is simple. It is get kids outside, get them out in the sun and away from screens.

E: But off my lawn.

S: Right. But it's not necessarily going to be easy because that cultural changes, public health measures like that are hard. They're hard to get people to change.

C: Do you guys remember, I think we talked about this on the show before, but there's like this viral video. The dad's in the kitchen doing dishes or something and the kid's outside the kitchen window and he's like, can I come in yet? And he's like, no, you're grounded. Stay outside. I just want to come inside.

E: Totally reversed.

C: Like go play. What am I supposed to do? I don't care.

E: I don't want to see you until supper.

C: Yeah. That's amazing.

S: I mean when we were kids, we spent the entire day outside.

E: That was it. Saturday morning, 7 AM. See you for lunch. Thanks. Out. See you at dark.

C: They didn't know where we were.

J: I mean, my kids like recently, like in the last few days after school, they've been asking me to take them to the park. And I'm thrilled. You know, I'm like, you want to be outside? You got it. You can stay there as long as you want. You know, the problem is, we have shifted to a screen culture, and it is so deeply embedded now. Last year, I decided that I was not going to use my phone as much as possible, and I did it for about five or six months. I was not looking at the news, I was mostly off of my phone, and I creeped all the way back to a massive screen addiction again. It just happens like I don't even know it's happening and all of a sudden I'm like I can't sit without looking at a screen like I have to like tell myself you're okay you know what I mean?

S: But again as we've said previously it's probably a better approach to not try to minimize your screen time but to maximize your not screen time. So like, say I'm going to do some activity that gets me outside take up gardening or whatever, go play catch with your kid outside. Just do something that has to be happening outside, especially in the sunlight.

E: Go for a walk.

S: Yeah, go for a walk. Just do something. If you exercise outside versus inside, do something where you're getting more sunlight. There's also lots of evidence that, yeah, we Americans generally are undersunned. You know, we do have relative vitamin D deficiency. And also, contact with green spaces is psychologically healthy. And yeah, so that also means that like, again, this is a big problem of urbanization. And the reason why China was was probably had such a big problem is because of their rapid urbanization of their population. There are just lots of people crammed into cities. So that's why there's such an epidemic there especially, but this is happening everywhere. So yeah, having green spaces in cities, more parks, and school time should incorporate outside time as much as possible. Classes outside, resets outside. Gotta get those kids outside.

AJR: Steve, what about the Google vision, or what is it, Apple vision, and all the goggles? What if we look at the world through those outside, but project our text messages and the news really far into the future? Far into the distance. Into the distance in the goggles.

E: Artificial horizon kind of thing.

AJR: Does that mean I can keep up my phone addiction or my children's phone addiction and future children's phone addiction and let them not get myopia?

S: So that's actually been studied.

AJR: Perfect.

S: And the answer is mixed. We don't know. So that's the idea. You put somebody in VR because when you I don't know how much you've used VR goggles.

AJR: Almost none.

S: I've used them extensively. So one thing that's interesting is that I don't have to wear reading glasses when I use VR. But also, when you're focusing in the distance, you're focusing in the distance. It's a real experience, you know what I mean? At least for that part of it, it seems like it should work, but it wouldn't necessarily replace the natural sunlight part of it, unless we simulate that in the VR experience, which I guess is feasible. But maybe, so this is just like using VR. I wonder if we like have optimized VR programs to, that we test, right? That to treat myopia, to prevent developmental myopia. And that becomes just like you take your vitamin, you'd spend an hour in VR every day to make sure you don't get myopia. You know what I mean? Like that just becomes part of adapting to an urbanized life is you have to spend, get your VR time.

C: You guys, myopia's not that bad.

AJR: That was the other thought I was having.

C: You just wear glasses.

S: It's horrible, Cara.

C: It's not.

J: I hate wearing glasses.

AJR: Isn't that what laser surgery is for?

S: It's not that bad, but if we could prevent it, it's also not bad to prevent it. It's all good. You're just telling people to spend more time outside.

E: Outside, I'm all for outside.

C: Of course, but I don't think we have to go to insanely great lengths to, I don't know.

J: No, Cara, we take this all the way.

C: Okay.

AJR: Sure, we can't get rid of diseases that are killing everybody but myopia will come to an end. You know, that Twilight Zone where the guy breaks his reading glasses.

E: It's not fair.

AJR: We can't let that happen.

E: Burgess Meredith.

J: Yeah, I saw that.

S: But there is a systematic review that I'm looking at where it does conclude that it can be effective if done properly the right program. VR can work to prevent myopia. Yeah, very interesting.

E: Some insanity, though.

News Item #4 - Biotwang Explained (1:07:01)

S: Evan, tell us about the bio twang. Am I saying that right?

E: Yeah, bio twang. Isn't that a cool word? Alright, but I've got to set this up a little bit, and I'm going to start with three questions. Alright, here's the first question. What is the collective noun for a group of crows?

S: A murder.

E: A murder of crows, correct. And do you know what the collective noun for a group of lions is?

S: Lions? Pride.

E: Yes, and the last one. What's a group of scientists' collective noun?

AJR: A fight.

E: Well, I know. Nobody really knows. I came up with the term. It's called a baffle because scientists are always baffled, it appears. Try searching a news item using the term scientist baffled, and you will come up with news items every single day. I kid you not because I've done it. And whenever something is weird or unusual or unexplained in the context of science, that means scientists are baffled.

S: Evan, do you remember when we, like this is going back 15 years, one of the bits that we did for the show was coming up with a skeptical, I mean a collective word for skeptics?

E: For skeptics, yes.

S: Yeah, I can't remember what we came up with.

E: Oh yeah, I can't remember either. It must not have been great, otherwise it would have stuck.

S: Yeah, it didn't stick.

E: A doubt of skeptics or something.

S: Yeah, that was one of them.

E: That was something like that. You know, very predictable.

S: A superciliousness of skeptics.

E: I don't recall if we tackled, Steve and Jay, this subject when this news first broke in 2014. Underwater audio recordings captured what was described as, well, a bio twang. And this was during an acoustic survey in the Mariana Trench, which is the world's deepest oceanic trench. It's located in the Western Pacific Ocean, about 200 miles, which is 322 kilometers, southwest of Guam and southeast of the Mariana Islands. Stretches to around 1,500 miles, 2,500 kilometers roughly, and it's about 43 miles or 69 kilometers wide.

S: So I know we've covered the bloop.

E: We've covered the bloop. I wasn't sure about the bio twang though. We don't want to confuse these things. We're talking strictly of the bio twang. There's news about it this week. But back in 2014, the researchers were using underwater gliders and they recorded this mysterious sound that had two distinct components to it, a low rumble followed by a high pitched metallic sound. And you can find this on YouTube and other places online, and go listen for yourself. It lasts for about between two and a half, three and a half seconds, and includes five separate parts with a dramatic range of frequencies. The low is 38 hertz, and then it has this sort of metallic finale to it, 8,000 hertz. And this, at the time, had scientists what? Baffled. Yep, what the heck could be causing that kind of sound in that area of the ocean? And apparently there were no other recordings of this noise to compare it against. Was the noise artificial? You know, human-made noise? Or was it something living? A large mammal? Whale or something? Was it a kraken or a leviathan? Maybe it was one of those frozen aliens delivered by Xenu 75 million years ago to Earth on a Boeing DC-8. No, that's Scientology, folks.

S: That's Scientology.

E: That is actual. That's, yeah. So they were baffled for a while. 2016, though, a scientific paper was published in the Journal of the Acoustical Society of America. There's a new one for me. Authored by researchers from the Cooperative Institute for Marine Resource Studies at Oregon State University and the National Oceanic and Atmospheric Administration, in which they reported the low frequency moaning part is typical of baleen whales. And that kind of twangy sound that it makes is really unique. We don't find many new baleen whale calls. OK. Their team analyzed the sound. They found it was similar to what they had called the Star Wars sound, a call produced by the dwarf mink or minke, M-I-N-K-E, dwarf minke whale, a type of baleen whale that lives in the northeast coast of Australia near the Great Barrier Reef. OK, so geographically, that checks out. They said the species is the smallest of the baleen whales and it doesn't spend much time at the surface. OK, so way down below. It has an inconspicuous blow and often lives in areas where high seas make sighting difficult. But they call frequently, making them good candidates for acoustic studies. So that was their suggestion at the time. However, they didn't really have, what, a sighting or something to corroborate this. It's just really a theory that they're putting out there, but it would seem to be reasonable. But they also said there might be some complications with that theory because baleen whale calls are typically heard in conjunction with their winter mating season. But this bio twang has been recorded throughout the year. So, OK, there's an inconsistency. They needed to study this some more. So, yeah, 2016 scientists were maybe less baffled, but still baffled because they couldn't definitively say that this was a whale. More evidence was needed. So fast forward to now, this week's news item, update on the bio twang. And I can think we can now say the scientists are even less baffled than before because the study has revealed the exact origin of the Pacific Ocean's mysterious bio twang noises emanating from a whale called Bryde's whales. That's B-R-Y-D-E apostrophe S, Bryde's whales. And it has a taxonomical name, which I can't pronounce. Balaenoptera edeni.

C: I like it. I will say, Evan, or I might ask the group. Sorry I've waited so long. Is that a New England pronunciation of baleen?

S: No, that's an Evan pronunciation.

C: Oh, okay, I'm just making sure.

E: No, I have my own dialect.

C: Yeah, I was like, maybe it is, I'm just a weird Texan, but I've always heard baleen.

S: I've heard baleen, I've heard baleen.

E: But I said baleen.

S: I'd never heard baleen until Evan just said it.

E: But it works. We've got all three of them.

AJR: I didn't even connect it to baleen, even though it's about whales.

E: I'm going to present a scientific paper to the whale-ologist community and offer it up as a correction. But in any case, these brides-whales. They are using the call to locate one another, what they describe as a giant game of Marco Polo. That's what the researchers said. Basically, the whales are playing games with us, and we primates are scratching our collective heads while the whales are making fun of us everywhere. The new study was published a week ago tonight, and it was in the journal Frontiers in Marine Science, where they proved that the brides whales were making the noise thanks to new artificial intelligence tools. Which were able to sift through over 200,000 hours of audio recordings containing various ocean sounds. They suspected that the Brides Whales were behind the Biotwang. When they spotted, here we go, they actually found 10 of them swimming near the Mariana Islands and they recorded 9 of them making this distinctive noise. So here we go, that's better evidence for us. From, let's see, Anne Allen, the study's lead author. Once as a coincidence, twice as a happenstance, nine times though, definitely a bride's wail. They conclusively proved that they were the ones making the call. The team matched the occurrence of the noises to the migration patterns of the species as well, and that meant sorting through, wow, years of audio recordings captured by monitoring stations all across the region. The study also found that the biotwang could only be heard in the Northwest Pacific despite Bryde's whales roaming across a much wider area, suggesting that only a specific population of Bryde's whales are the ones making this specific noise. So there you go. Scientists, for the most part, are now no longer baffled in regards to the biotwang.

AJR: I'm going to notice scientists being baffled every day. I mean, you obviously looked into it, but I can't believe how I've missed out on years of being baffled.

E: Political scientists are baffled.

AJR: Oh, we're baffled. I tell you what.

E: All right.

J: Thanks, Evan.

E: You're welcome.

Who's That Noisy? + Announcements (1:15:55)

J: All right, Jay, it's Who's That Noisy time.

J: All right, guys, last week I played this noisy. [plays Noisy] What is that? Any ideas guys?

AJR: Attack of killer mechanical hornets?

C: I don't think we can read that.

E: It's a combination dentist drill and and slot machine.