SGU Episode 891

| This episode is in the middle of being transcribed by Hearmepurr (talk) as of 2022-11-05. To help avoid duplication, please do not transcribe this episode while this message is displayed. |

| This episode was transcribed by the Google Web Speech API Demonstration (or another automatic method) and therefore will require careful proof-reading. |

| This transcript is not finished. Please help us finish it! Add a Transcribing template to the top of this transcript before you start so that we don't duplicate your efforts. |

Template:Editing required (w/links) You can use this outline to help structure the transcription. Click "Edit" above to begin.

| SGU Episode 891 |

|---|

| August 6th 2022 |

|

| Skeptical Rogues |

| S: Steven Novella |

B: Bob Novella |

C: Cara Santa Maria |

J: Jay Novella |

| Quote of the Week |

If anyone can refute me–show me I'm making a mistake or looking at things from the wrong perspective–I'll gladly change. It's the truth I'm after, and the truth never harmed anyone. What harms us is to persist in self-deceit and ignorance. |

Marcus Aurelius, Roman emperor |

| Links |

| Download Podcast |

| Show Notes |

| Forum Discussion |

Introduction, passing of Nichelle Nichols

Voice-over: You're listening to the Skeptics' Guide to the Universe, your escape to reality.

[00:12.720 --> 00:17.200] Today is Tuesday, August 2nd, 2022, and this is your host, Stephen Novella.

[00:17.200 --> 00:23.000] Joining me this week are Bob Novella, everybody, Kara Santamaria, howdy, and Jay Novella.

[00:23.000 --> 00:24.000] Hey, guys.

[00:24.000 --> 00:25.800] Evan is off this week.

[00:25.800 --> 00:31.600] Yeah, he is otherwise engaged, some family matter, but he'll be joining us for the next

[00:31.600 --> 00:32.600] episode.

[00:32.600 --> 00:38.080] This next episode we're going to record actually is Nexus, which is, it's happening probably

[00:38.080 --> 00:42.240] right now as you're listening to this, and, or maybe it happened already if you're listening

[00:42.240 --> 00:47.460] to this after Saturday, and that's, that show will be airing in two weeks.

[00:47.460 --> 00:53.560] So guys, the big news this week, sad news, Nichelle Nichols passed away.

[00:53.560 --> 00:57.120] She was 89.

[00:57.120 --> 00:58.120] Good age, good age.

[00:58.120 --> 00:59.120] Yeah, 89.

[00:59.120 --> 01:00.120] Lived a long life.

[01:00.120 --> 01:01.120] Always great.

[01:01.120 --> 01:06.000] So that means that only Kirk, Sulu, and Chekov are left from the bridge crew of the original

[01:06.000 --> 01:07.000] series.

[01:07.000 --> 01:08.000] Yeah.

[01:08.000 --> 01:13.040] You know what I loved about her and that original bridge crew was when I was a kid watching

[01:13.040 --> 01:18.900] the original Star Trek series, I didn't realize that there was anything special about the

[01:18.900 --> 01:22.240] fact that it was a multicultural crew.

[01:22.240 --> 01:23.240] You know what I mean?

[01:23.240 --> 01:25.280] And it wasn't just humans and aliens.

[01:25.280 --> 01:29.400] There was people from different parts of the world, but you know, different, the differences

[01:29.400 --> 01:30.600] were important back then.

[01:30.600 --> 01:35.680] And it was one of the reasons why Gene Roddenberry actually, you know, constructed it that way.

[01:35.680 --> 01:39.760] And she was, she was key to that because not only was she black, but she was a woman.

[01:39.760 --> 01:44.180] Yeah, she was one of the first black women on TV, like in a major series.

[01:44.180 --> 01:45.180] And she had a significant role.

[01:45.180 --> 01:50.000] I know it's very easy to, to, you know, to denigrate say, well, she was basically answering

[01:50.000 --> 01:51.000] the phone, right?

[01:51.000 --> 01:54.360] Sort of the comedian, one line about her.

[01:54.360 --> 01:58.600] But she actually, you know, had, she was part of the bridge crew, man.

[01:58.600 --> 02:00.640] And I remember there was one episode, I just watched it.

[02:00.640 --> 02:05.920] I just happened to watch it because I was, we're going to be reviewing it on AQ6, Balance

[02:05.920 --> 02:09.760] of Terror, you know, where they're fighting the Romulans for the first time.

[02:09.760 --> 02:12.120] And she is called to the helm.

[02:12.120 --> 02:16.040] She has to actually work at the helm because the helmsman had to be called away.

[02:16.040 --> 02:20.360] So she, you know, if you're on the bridge crew, you're, you're able to handle any station

[02:20.360 --> 02:21.960] that you're called upon to.

[02:21.960 --> 02:28.320] So anyway, it was, the point is it wasn't, it wasn't a small position on the Enterprise

[02:28.320 --> 02:33.560] and it was, and her character was a significant character in the show.

[02:33.560 --> 02:38.560] But even she, you know, had doubts about, about that character that she was portraying.

[02:38.560 --> 02:39.560] Yeah.

[02:39.560 --> 02:43.640] You know, the story is she was, she actually gave her resignation letter after season one

[02:43.640 --> 02:47.400] to Gene Roddenberry and Martin Luther King Jr.

[02:47.400 --> 02:52.440] She was talking to him at some point, not too soon, not too far after that.

[02:52.440 --> 02:56.240] And he told her like, you absolutely have to stay on the show.

[02:56.240 --> 03:00.800] You don't know how unbelievably important it is as a black woman that you're on this

[03:00.800 --> 03:02.080] particular TV show.

[03:02.080 --> 03:03.080] Yeah.

[03:03.080 --> 03:04.080] She's a role model.

[03:04.080 --> 03:05.080] Yeah.

[03:05.080 --> 03:06.080] And he convinced her to stay.

[03:06.080 --> 03:09.900] And it was, you know, it was really, he felt that it was very important to have that representation

[03:09.900 --> 03:10.900] on TV.

[03:10.900 --> 03:11.900] It really was important.

[03:11.900 --> 03:12.900] It was very important.

[03:12.900 --> 03:13.900] Yeah.

[03:13.900 --> 03:17.820] You know, Gopi Goldberg was talking about it recently and she said that when she saw

[03:17.820 --> 03:22.120] it growing up, she was running around the house and she was saying, you know, she was

[03:22.120 --> 03:26.640] very excited, very happy because from her point of view, this was the first time that

[03:26.640 --> 03:30.000] she had seen a black woman on TV that wasn't a maid.

[03:30.000 --> 03:33.880] And to her, to her, that was huge, huge.

[03:33.880 --> 03:38.480] And it was, I think it was huge for lots of, lots of people growing up at that time.

[03:38.480 --> 03:43.880] So yeah, it was clearly a very important role at a very important time.

[03:43.880 --> 03:47.940] It's a point that it's kind of the point that Dr. King was making.

[03:47.940 --> 03:52.040] He was saying that if you leave, they could just fill anybody in that role.

[03:52.040 --> 03:55.320] Like the great thing about the role you have is that it's not, there's nothing about it

[03:55.320 --> 03:56.380] that's black.

[03:56.380 --> 03:58.200] There's nothing about it that's female.

[03:58.200 --> 03:59.340] It's just a strong role.

[03:59.340 --> 04:00.920] They could stick an alien in there.

[04:00.920 --> 04:03.500] They could stick anybody to fill your role.

[04:03.500 --> 04:09.540] And so it shows that, you know, black women are people, fully formed people who can have

[04:09.540 --> 04:13.260] skills and who can engage.

[04:13.260 --> 04:16.800] It's not just, like you said, like you're not just the maid.

[04:16.800 --> 04:21.840] And how important for not just young people, not just young black people, not just young

[04:21.840 --> 04:26.720] black women to see that, but white men to see that, you know?

[04:26.720 --> 04:31.960] I mean, Kara, I was a kid, I was a kid watching those Star Trek episodes.

[04:31.960 --> 04:36.520] And it absolutely had an impact on my life in so many different ways.

[04:36.520 --> 04:41.960] But I, you know, I remember thinking about her on that crew and not thinking anything

[04:41.960 --> 04:42.960] odd about it.

[04:42.960 --> 04:43.960] And that's, that's my point.

[04:43.960 --> 04:44.960] Yeah.

[04:44.960 --> 04:45.960] It normalizes it.

[04:45.960 --> 04:48.200] As a young kid, I wasn't looking at her as black or as a woman.

[04:48.200 --> 04:51.720] I was just looking at her as one of the, one of the people on the bridge.

[04:51.720 --> 04:54.200] And I know it had an impact on me in my life.

[04:54.200 --> 04:59.800] Do you guys know that after Star Trek that Nichelle Nichols went to work for NASA recruiting

[04:59.800 --> 05:02.960] women and minorities into the space program?

[05:02.960 --> 05:09.000] And she actually, she recruited Sally Ride, who was the first American female astronaut.

[05:09.000 --> 05:10.000] That is awesome.

[05:10.000 --> 05:11.000] I didn't know that.

[05:11.000 --> 05:15.840] Oh, and let's not forget that in 1968, wow, what a year, 1968.

[05:15.840 --> 05:17.680] Think about everything that happened that year.

[05:17.680 --> 05:21.240] It was the first interracial kiss on television.

[05:21.240 --> 05:26.400] Yeah, that wasn't, in the US it was, but there was a British one that preceded it,

[05:26.400 --> 05:27.400] you know?

[05:27.400 --> 05:28.400] Okay.

[05:28.400 --> 05:29.400] Yeah.

[05:29.400 --> 05:30.880] First US, first interracial kiss on US television.

[05:30.880 --> 05:31.880] Yeah.

[05:31.880 --> 05:32.880] Yeah.

[05:32.880 --> 05:33.880] Yeah, which was significant.

[05:33.880 --> 05:34.880] Absolutely.

[05:34.880 --> 05:35.880] Yeah.

[05:35.880 --> 05:39.580] And I think if I remember, I'm trudging up some old memories here, they wanted to do

[05:39.580 --> 05:48.920] multiple takes because they were very nervous because the people were very strict with what

[05:48.920 --> 05:53.520] you could have and what you can't have on the show in terms of that kind of thing and

[05:53.520 --> 05:55.380] sexual situations and all that.

[05:55.380 --> 06:01.140] So if I remember correctly, William Chattner actually screwed up all the other takes to

[06:01.140 --> 06:05.400] such a degree that they would never be able to use any of them except the one that you

[06:05.400 --> 06:06.400] saw.

[06:06.400 --> 06:07.400] Well, he and her did that.

[06:07.400 --> 06:08.400] They did it together.

[06:08.400 --> 06:09.400] Oh, even better.

[06:09.400 --> 06:10.400] Even better.

[06:10.400 --> 06:11.400] Even better.

[06:11.400 --> 06:12.400] Yeah.

[06:12.400 --> 06:13.400] That's actually accurate, Bob.

[06:13.400 --> 06:16.280] They deliberately screwed up all the alternate takes.

[06:16.280 --> 06:17.280] Good for them.

[06:17.280 --> 06:19.880] Because they knew the system so well, they knew how much time they had.

[06:19.880 --> 06:21.620] They couldn't keep doing this.

[06:21.620 --> 06:22.620] That was it.

[06:22.620 --> 06:23.620] Their time was allotted to do it.

[06:23.620 --> 06:28.680] So they had no choice in the editing room but to put that in or not show the episode,

[06:28.680 --> 06:29.680] you know?

[06:29.680 --> 06:30.680] Mm-hmm.

[06:30.680 --> 06:34.420] And I didn't realize that she put out albums throughout her life.

[06:34.420 --> 06:41.800] She did two albums, and one of them was standards, and then the other one was rock and roll.

[06:41.800 --> 06:48.960] But they were put out while she was filming Star Trek, and they have those themes, like

[06:48.960 --> 06:53.160] down to earth and out of this world are the names of the albums.

[06:53.160 --> 06:57.320] And I can guarantee you that they are far superior to the albums that William Chattner

[06:57.320 --> 06:58.320] put out.

[06:58.320 --> 07:05.080] Bob, actually, I could do a better job.

[07:05.080 --> 07:09.280] If you want to know what we're talking about, go on YouTube and look up William Chattner

[07:09.280 --> 07:13.200] Tambourine Man, and you'll see everything that you need to know.

[07:13.200 --> 07:14.200] Yikes.

[07:14.200 --> 07:15.200] All right.

[07:15.200 --> 07:16.200] Let's move on.

Quickie with Bob: Friction (7:15)

- Friction: Atomic-scale friction between single-asperity contacts unveiled through in situ transmission electron microscopy[1]

[07:16.200 --> 07:17.640] Bob, you're going to start us off with a quickie.

[07:17.640 --> 07:18.640] Thank you, Steve.

[07:18.640 --> 07:19.640] Gird your loins, everyone.

[07:19.640 --> 07:22.160] This is your Quickie with Bob.

[07:22.160 --> 07:26.920] Friction in the news, specifically from the journal Nature Nanotechnology.

[07:26.920 --> 07:32.840] Recently, using an electron microscope, researchers were, for the first time, able to image two

[07:32.840 --> 07:37.240] surfaces coming into contact and sliding across each other with atomic resolution.

[07:37.240 --> 07:43.000] A professor of mechanical engineering and material science, Guo-Fang Wang, said, in

[07:43.000 --> 07:47.160] the study, we were able to actually see the sliding pathway of interface atoms and the

[07:47.160 --> 07:52.600] dynamic strain and stress evolution on the interface that has only previously been shown

[07:52.600 --> 07:53.600] by simulations.

[07:53.600 --> 07:58.560] Now, after seeing this atomic process of friction in the real world, researchers were able to

[07:58.560 --> 08:03.680] go back to the simulation to verify not only what the microscopic visualization showed,

[08:03.680 --> 08:08.760] but also understand more about the specific forces at play at the atomic scale.

[08:08.760 --> 08:13.720] Wang describes one of his main takeaways from this experiment when he said, what we found

[08:13.720 --> 08:19.040] is that no matter how smooth and clean the surface is, friction still occurs at the atomic

[08:19.040 --> 08:20.040] level.

[08:20.040 --> 08:21.360] It's completely unavoidable.

[08:21.360 --> 08:25.680] However, this knowledge can lead to better lubricants and materials to minimize friction

[08:25.680 --> 08:29.760] and wear as much as possible, extending the life of mechanical systems.

[08:29.760 --> 08:34.240] And also, this new method can now apparently be applied to any material to learn more about

[08:34.240 --> 08:36.600] the role friction and wear plays on it.

[08:36.600 --> 08:40.320] And who knows what this research can do for astroglide.

[08:40.320 --> 08:43.280] Loins un-girded, this has been your Quickie with Bob.

[08:43.280 --> 08:45.080] I hope it was good for you, too.

[08:45.080 --> 08:46.080] Jesus, Bob.

[08:46.080 --> 08:47.080] Hey, man.

[08:47.080 --> 08:48.080] It's thematic.

[08:48.080 --> 08:54.160] Yeah, it's only because you said loins un-girded right after you said astroglide.

[08:54.160 --> 08:55.160] All right.

[08:55.160 --> 08:56.160] Oh, yes.

[08:56.160 --> 08:57.160] Thanks, Bob.

[08:57.160 --> 08:58.160] Sure.

News Items

S:

B:

C:

J:

E:

(laughs) (laughter) (applause) [inaudible]

The Neuroscience of Politics (8:59)

[08:58.160 --> 08:59.160] All right.

[08:59.160 --> 09:02.720] I'm going to start off the news items.

[09:02.720 --> 09:06.120] This is one about the neuroscience of politics.

[09:06.120 --> 09:07.120] Oh, geez.

[09:07.120 --> 09:08.120] Yeah.

[09:08.120 --> 09:09.120] Well, this is interesting.

[09:09.120 --> 09:17.080] And so there's a study that came out looking at fMRI scans of different people, subjects

[09:17.080 --> 09:23.000] that took a survey, answered a bunch of questions so that they could be characterized on a Likert

[09:23.000 --> 09:29.440] scale from one to six, from very liberal to very conservative.

[09:29.440 --> 09:33.480] And so they're just using that just one axis, liberal or conservative, which is a massive

[09:33.480 --> 09:36.120] oversimplification of the political landscape.

[09:36.120 --> 09:39.380] But whatever, that's the scale they used.

[09:39.380 --> 09:46.080] And comparing that to different functions in the brain, looking at functional connectivity

[09:46.080 --> 09:52.120] directly, under nine different tasks, one of which is doing nothing.

[09:52.120 --> 09:57.900] And a quote unquote task is just something, it's just a neurological environment that

[09:57.900 --> 09:59.800] you're putting the subject under.

[09:59.800 --> 10:04.040] For example, you could be showing them images of people expressing emotion.

[10:04.040 --> 10:06.960] That would be one quote unquote task, right?

[10:06.960 --> 10:09.200] Another one might be a memory task.

[10:09.200 --> 10:13.720] Another one was trying to get a reward by hitting a button as quickly as you could.

[10:13.720 --> 10:17.960] Now, none of these tasks had anything to do with politics or ideology.

[10:17.960 --> 10:23.720] They were considered politically neutral, but they just wanted to get the brain to light

[10:23.720 --> 10:27.640] up in different ways and see if there are statistical differences between liberal brains

[10:27.640 --> 10:29.800] and conservative brains, right?

[10:29.800 --> 10:31.680] That was the goal of this research.

[10:31.680 --> 10:37.760] This is not the first study to do this, although this is the first one to try to look at functional

[10:37.760 --> 10:44.520] connectivity, previous research of the neuroanatomical correlates of political ideology, as we would

[10:44.520 --> 10:50.120] say, looked at more of like modules in the brain, like which piece of the brain is lighting

[10:50.120 --> 10:54.720] up versus, you know, this one is looking at different circuits in the brain, lighting

[10:54.720 --> 10:55.720] up.

[10:55.720 --> 10:56.720] You know, the differences are interesting.

[10:56.720 --> 11:02.020] Now, you know, this is a technically very complicated study and I don't have the expertise

[11:02.020 --> 11:06.720] to dissect it technically, like I don't know if they're using the right fMRI technique

[11:06.720 --> 11:12.120] or they used AI to sort of analyze the data and I have no idea if their analysis is valid

[11:12.120 --> 11:13.120] or not.

[11:13.120 --> 11:16.880] So I just wanted to talk conceptually about the research itself, you know, sort of just

[11:16.880 --> 11:22.980] take the results at face value for now, you know, it got through peer review and it will

[11:22.980 --> 11:26.680] go through further analysis and attempts at replication.

[11:26.680 --> 11:32.920] So we'll put that aside, the technical analysis for now and just see, I'm sorry, it was published

[11:32.920 --> 11:37.160] in the Proceedings of the National Academy of Science, right?

[11:37.160 --> 11:38.160] Right?

[11:38.160 --> 11:41.600] So, which is a good journal.

[11:41.600 --> 11:46.120] So let's back up a little bit and look at this research in general.

[11:46.120 --> 11:51.700] Are there brain differences between people with different political ideology?

[11:51.700 --> 11:57.680] So I've already alluded to one of the issues with this research paradigm and that is how

[11:57.680 --> 11:59.920] are you choosing the ideology to look at?

[11:59.920 --> 12:06.360] In this research, they did a one-dimensional liberal to conservative scale, right?

[12:06.360 --> 12:11.440] But we know that politics is about a lot more than that.

[12:11.440 --> 12:18.240] You know, we have a two-party system in the United States and so things tend to sort out,

[12:18.240 --> 12:23.980] you know, it's actually Democrat to Republican, but Democrats are at least several different

[12:23.980 --> 12:29.120] ideologies and Republicans are at least several different ideologies.

[12:29.120 --> 12:35.360] You know, there are sort of coalitions of ideologies and they're actually somewhat in

[12:35.360 --> 12:38.160] flux in the US in the last few years.

[12:38.160 --> 12:39.480] But that's not what they looked at.

[12:39.480 --> 12:40.480] They didn't look at Democrat versus Republican.

[12:40.480 --> 12:41.480] No, they didn't.

[12:41.480 --> 12:42.480] They looked at liberal versus conservative.

[12:42.480 --> 12:46.120] But the point of why, you know, what is, why choose that, you know?

[12:46.120 --> 12:50.160] Well, I think that that's the prevailing model across all political science.

[12:50.160 --> 12:57.400] I know that, but my point is, does that reflect reality or is that a cultural construct that

[12:57.400 --> 13:01.320] may not reflect any kind of neurological reality?

[13:01.320 --> 13:02.320] Oh, I see.

[13:02.320 --> 13:06.680] Yeah, I think the interesting thing is that it's a cultural construct that seems to hold

[13:06.680 --> 13:08.400] in most cultures.

[13:08.400 --> 13:11.600] Yeah, but so what parts of it though?

[13:11.600 --> 13:17.360] Because what, so what's, so for example, are we talking about socially liberal, right?

[13:17.360 --> 13:26.200] Or economically liberal or liberal in terms of foreign policy or what, you know, it breaks

[13:26.200 --> 13:32.060] down multiple different ways and they don't always align, you know?

[13:32.060 --> 13:39.120] And so you can define it in different ways and then you're looking at basically completely

[13:39.120 --> 13:42.320] different phenomena that you're just labeling liberal and labeling conservative.

[13:42.320 --> 13:47.680] I think the issue, I mean, obviously this is, you dug a lot deeper, but I think the

[13:47.680 --> 13:53.840] issue is that if we use that sort of, let's say, fiscal and socially liberal conservative

[13:53.840 --> 13:58.840] quad, you're going to find that there are people who are extremely liberal, which means

[13:58.840 --> 14:03.240] that they are both socially and financially or economically liberal.

[14:03.240 --> 14:06.320] And then you're going to find people who are extremely conservative.

[14:06.320 --> 14:10.400] So they are both financially and socially conservative and they're going to be the most

[14:10.400 --> 14:11.480] severe ends.

[14:11.480 --> 14:17.520] And so if you can take the most severe ends and almost caricature them as an archetype,

[14:17.520 --> 14:20.240] then you might have a better chance to see differences.

[14:20.240 --> 14:29.240] But I think, so they also did look at extremists versus moderates in this study and which to

[14:29.240 --> 14:35.760] me provokes yet another question, which is not answered by the data.

[14:35.760 --> 14:43.860] And that is, so is it, is an extreme liberal someone who is just very liberal or are they

[14:43.860 --> 14:49.680] a liberal who happens to have other cognitive qualities that make them extreme?

[14:49.680 --> 14:51.880] How do you even define extreme liberal?

[14:51.880 --> 14:55.920] Because that definitely, as you mentioned, is culture bound.

[14:55.920 --> 15:00.800] Like what we consider super liberal in America is like moderate in a lot of European countries.

[15:00.800 --> 15:04.520] Well, yeah, it's relative to the culture, but in this case, this is a study of Americans

[15:04.520 --> 15:09.040] and they looked at and they were using a survey.

[15:09.040 --> 15:13.600] You answer these questions and then we grade you on these questions, liberal to conservative.

[15:13.600 --> 15:18.620] You're right, that spectrum may be different in different countries.

[15:18.620 --> 15:21.840] They may use the labels differently too, which we won't get into.

[15:21.840 --> 15:22.960] That's a different thing.

[15:22.960 --> 15:29.720] But my point is like, are there extremists and extremists can end up as an independent

[15:29.720 --> 15:34.740] variable of whether they're liberal or conservative or they just people who are really conservative

[15:34.740 --> 15:38.860] and people who are really liberal and maybe it's both.

[15:38.860 --> 15:39.860] Maybe it's both.

[15:39.860 --> 15:44.500] Maybe there are people who are, whatever ideology they have, they're going to be extreme, right?

[15:44.500 --> 15:53.040] And if they happen to fall on the conservative side, then they would rank as an extreme conservative.

[15:53.040 --> 15:57.000] That doesn't necessarily mean that they're really more conservative than a moderate conservative.

[15:57.000 --> 16:03.120] They're just conservative and they have a cognitive style that makes them extreme.

[16:03.120 --> 16:06.460] And this is just speculation because again, this study didn't really have any way of

[16:06.460 --> 16:10.960] sorting all this out, but it's just sort of treating it as one dimensional.

[16:10.960 --> 16:13.520] Are they all neuroscientists on the study?

[16:13.520 --> 16:16.540] Are there any psychologists that are in their political neuroscience?

[16:16.540 --> 16:18.080] They are political neuroscientists.

[16:18.080 --> 16:19.080] Political neuroscientists.

[16:19.080 --> 16:20.080] Interesting.

[16:20.080 --> 16:21.080] Okay.

[16:21.080 --> 16:24.240] But with all that in mind, let's look a little bit at the data and then you'll also see what

[16:24.240 --> 16:25.920] I mean a little bit.

[16:25.920 --> 16:31.760] So the bottom line is what they found is that all nine states, all nine tasks that they

[16:31.760 --> 16:37.980] gave them showed statistical differences between people who ranked liberal and people who ranked

[16:37.980 --> 16:42.920] conservative on this, on their study, which is interesting.

[16:42.920 --> 16:47.400] Why would, you know, they basically chose nine tasks mainly because they could do that,

[16:47.400 --> 16:48.400] right?

[16:48.400 --> 16:50.120] There are just easy ways to do them for fMRI studies.

[16:50.120 --> 16:54.000] We'll give them a reward task and a memory task and whatever.

[16:54.000 --> 16:58.320] I mean, they weren't picked because they thought they would relate to ideology.

[16:58.320 --> 17:01.400] In fact, they thought that they wouldn't relate to ideology.

[17:01.400 --> 17:02.800] That's why they picked them.

[17:02.800 --> 17:08.840] So why did they all show statistical differences between liberal and conservative?

[17:08.840 --> 17:17.400] The authors suspect that it's because that there are just some fundamental differences

[17:17.400 --> 17:23.800] between the liberal to conservative neurological function, if you will, that just shows up

[17:23.800 --> 17:27.040] in every fMRI you do, even when they're doing nothing.

[17:27.040 --> 17:31.120] You know, it's just, you know, the brains are just functioning differently and it just

[17:31.120 --> 17:34.520] contaminates every state that you look at.

[17:34.520 --> 17:39.440] But did they only look at people who, like on those self-report surveys, who kind of

[17:39.440 --> 17:43.280] fell at a certain threshold of liberality or conservatism?

[17:43.280 --> 17:47.760] No, again, there was a Likert scale, a six-point scale, so you could have been in the middle,

[17:47.760 --> 17:48.760] right?

[17:48.760 --> 17:49.760] Right.

[17:49.760 --> 17:50.760] But they didn't use anybody who's like, meh?

[17:50.760 --> 17:56.000] Well, yeah, it was mild liberal, liberal, extreme liberal, right?

[17:56.000 --> 17:59.200] Mild conservative, conservative, extreme conservative.

[17:59.200 --> 18:01.580] Those are the six points on this scale.

[18:01.580 --> 18:05.660] And they found significant differences between mild liberals and mild conservatives across

[18:05.660 --> 18:06.660] all six?

[18:06.660 --> 18:08.160] Because that's surprising.

[18:08.160 --> 18:13.880] No, I think if they like included all of the liberals and included all of the conservatives,

[18:13.880 --> 18:15.880] they showed differences.

[18:15.880 --> 18:19.440] But they also looked at extremists versus moderates.

[18:19.440 --> 18:23.780] And for that, so I'm going to back up a little bit and just ask you guys a question.

[18:23.780 --> 18:30.520] What do you think is the factor that predicts somebody's political ideology more than any

[18:30.520 --> 18:31.520] other factor?

[18:31.520 --> 18:32.520] Because this could be anything.

[18:32.520 --> 18:35.960] You mean like, for example, like education, something like that?

[18:35.960 --> 18:36.960] Yeah.

[18:36.960 --> 18:37.960] Age.

[18:37.960 --> 18:38.960] Like a demographic factor?

[18:38.960 --> 18:39.960] I'm going to say where they're born.

[18:39.960 --> 18:40.960] Family history.

[18:40.960 --> 18:41.960] Bob's correct.

[18:41.960 --> 18:44.800] You know, pretty much, it's their parents, right?

[18:44.800 --> 18:51.420] So whatever your parents' ideology are, that is the strongest predictor of your ideology.

[18:51.420 --> 18:55.600] It's not where you're born, because if you're a liberal born in a red state, you're still

[18:55.600 --> 18:59.360] a liberal parent, you're still going to be liberal, not red, right?

[18:59.360 --> 19:01.000] Not conservative.

[19:01.000 --> 19:03.280] And then, of course, this cuts both ways.

[19:03.280 --> 19:07.060] This doesn't tell you if it's nature versus nurture, because you could say that they inherited

[19:07.060 --> 19:11.720] the genes from their parents, but they also were raised by their parents to be liberal

[19:11.720 --> 19:13.080] or to be conservative.

[19:13.080 --> 19:14.620] And so you don't know.

[19:14.620 --> 19:19.340] But there are twin studies, you know, you do like twins separated at birth, and that

[19:19.340 --> 19:21.940] shows that it's at least partly genetic.

[19:21.940 --> 19:26.800] It does appear to be at least partly genetic, but not fully, like pretty much everything

[19:26.800 --> 19:27.800] with the brain, right?

[19:27.800 --> 19:33.440] It's a combination of genetic and, you know, hardwiring and also environmental factors.

[19:33.440 --> 19:40.040] Okay, so with that in mind, they said, how much does every, you know, all of these states

[19:40.040 --> 19:44.640] predict whether or not somebody will be on the liberal or the conservative end?

[19:44.640 --> 19:51.800] And also, does it predict who will be extreme versus moderate, like who will, you know,

[19:51.800 --> 19:57.160] counting extreme as the two ends of the spectrum, the very liberal and very conservative?

[19:57.160 --> 20:02.280] So first of all, they found that overall, the functional connectivity patterns were

[20:02.280 --> 20:08.760] as predictive as the ideology of the parents, which is like the gold standard.

[20:08.760 --> 20:12.120] So it was as predictive as parental ideology.

[20:12.120 --> 20:17.600] And for the tasks that they looked at, there were three that correlated the most.

[20:17.600 --> 20:20.000] So there were three standouts.

[20:20.000 --> 20:24.320] One was the empathy task, which was looking at pictures of people who are expressing an

[20:24.320 --> 20:25.320] emotion.

[20:25.320 --> 20:28.800] Right, so it was supposed to be like, how much are you reacting to the emotion that

[20:28.800 --> 20:29.800] you're seeing?

[20:29.800 --> 20:32.800] Aka bleeding heart liberals.

[20:32.800 --> 20:33.800] Yeah.

[20:33.800 --> 20:35.320] Well, that's not correct.

[20:35.320 --> 20:36.320] Oh, really?

[20:36.320 --> 20:42.120] What that correlated with is being politically moderate or ideologically moderate.

[20:42.120 --> 20:46.560] The reward task, on the other hand, correlated with extremism.

[20:46.560 --> 20:53.200] You know, the reward task was trying to win a prize by hitting the button fast.

[20:53.200 --> 20:59.760] And a retrieval task, which involved, you know, it was a memory retrieval thing.

[20:59.760 --> 21:06.480] And that task was the most different among liberal to conservative spectrum, right?

[21:06.480 --> 21:08.400] So you could see that you could predict.

[21:08.400 --> 21:09.400] In what direction?

[21:09.400 --> 21:13.320] I'm just saying, like, you could, you know, you can predict based upon the way their brains

[21:13.320 --> 21:17.320] looked on that task, if they're liberal or conservative more than other tasks.

[21:17.320 --> 21:21.060] Yeah, but I'm saying, like, what was the pattern?

[21:21.060 --> 21:25.320] It's a statistical thing using AI looking at the patterns on fMRI scans.

[21:25.320 --> 21:28.360] So I'd have to show you pictures of fMRI scans.

[21:28.360 --> 21:33.360] Okay, so they weren't using predefined circuitry that they know is like, okay, this is a reward

[21:33.360 --> 21:38.520] circuitry, or this is a retrieval circuitry, and we want to see who has is loading more

[21:38.520 --> 21:39.840] on it, and who's loading less.

[21:39.840 --> 21:42.480] No, they were just saying, what's happening in the brain when we have them do this?

[21:42.480 --> 21:47.560] Oh, and this now, let's now let's see if they sort out into different patterns.

[21:47.560 --> 21:54.680] Can we use those patterns to predict how they scored on the test, you know, on the liberal

[21:54.680 --> 21:55.680] to conservative survey?

[21:55.680 --> 21:56.680] Interesting.

[21:56.680 --> 21:59.720] So it's almost like it's meaningful insofar as it has predictive power, but it's not really

[21:59.720 --> 22:02.160] meaningful insofar as it tells us anything.

[22:02.160 --> 22:03.160] Right.

[22:03.160 --> 22:04.840] We don't know what it tells us.

[22:04.840 --> 22:09.480] So this is really hypothesis hunting, if you will, because they're just saying, hey, what's

[22:09.480 --> 22:13.040] going on in the brain when we give liberals and conservatives different tasks?

[22:13.040 --> 22:14.760] Oh, I wonder what that means.

[22:14.760 --> 22:18.720] What does it mean that certain patterns in your brain when you're doing a reward task

[22:18.720 --> 22:22.480] predict if you're an extremist or a moderate, you know?

[22:22.480 --> 22:27.560] There's also no way to know if we're just loading on a completely different construct

[22:27.560 --> 22:31.560] that like it exactly confabulating variable kind of like those old studies where it's

[22:31.560 --> 22:35.520] like, we took smokers and compared them to non smokers, and we asked them X, Y and Z.

[22:35.520 --> 22:38.840] And it's like, well, but maybe it's because the smokers drink more coffee, or maybe it's

[22:38.840 --> 22:44.360] because our problems, I'm sure there's confounding factors go in this kind of study because we

[22:44.360 --> 22:45.360] don't get we don't.

[22:45.360 --> 22:49.680] We this is what I was alluding to at the top of this is that we don't know that we are

[22:49.680 --> 22:56.120] dealing with fundamental phenomena or just, you know, one or two layers removed from those

[22:56.120 --> 22:59.120] fundamental phenomena.

[22:59.120 --> 23:02.000] What is it about someone that makes them liberal?

[23:02.000 --> 23:05.880] Like liberalness may not be a thing unto itself neurologically.

[23:05.880 --> 23:10.400] It's just a cultural manifestation of more fundamental neurological functions.

[23:10.400 --> 23:13.360] Like you could think of things like empathy, for example.

[23:13.360 --> 23:14.600] And but then what's that?

[23:14.600 --> 23:15.600] What is empathy?

[23:15.600 --> 23:17.960] Is that even a fundamental neurological function?

[23:17.960 --> 23:21.800] Or is that a manifestation of other things that are happening in the brain, other, you

[23:21.800 --> 23:23.840] know, circuits that are firing?

[23:23.840 --> 23:28.880] And so we're trying to dig down, but we are not at the base level yet.

[23:28.880 --> 23:29.880] No.

[23:29.880 --> 23:35.320] And but there's something about the there's like a face validity kind of component to

[23:35.320 --> 23:40.080] this, which has long interested me, and I'm assuming it really interests the authors too,

[23:40.080 --> 23:44.720] that there's something that feels fundamental about ideology.

[23:44.720 --> 23:49.680] Because once you sort of start to develop an awakening into ideology, you know, as your

[23:49.680 --> 23:52.520] as your child, you don't really know what's going on in the world.

[23:52.520 --> 23:57.400] But once you start to say, this becomes part of my identity, it actually is very fundamental

[23:57.400 --> 23:59.780] to a lot of people's identities.

[23:59.780 --> 24:08.340] It's very rare for people to switch parties, unless there's some sort of personal insult

[24:08.340 --> 24:12.600] to their reasoning, like their party fails them.

[24:12.600 --> 24:13.600] Yeah.

[24:13.600 --> 24:17.960] Or like there were you going through a realignment like we're doing now.

[24:17.960 --> 24:18.960] Exactly.

[24:18.960 --> 24:19.960] Yeah.

[24:19.960 --> 24:25.040] So this study does not tell us if what the error of causation is, right, looking for

[24:25.040 --> 24:26.200] only for correlation.

[24:26.200 --> 24:30.480] So it doesn't tell us that people are liberal because their brains function this way.

[24:30.480 --> 24:33.120] They could be that their brains function this way because they're liberal.

[24:33.120 --> 24:34.120] Right.

[24:34.120 --> 24:35.120] Because these are pathways.

[24:35.120 --> 24:36.120] These aren't hard.

[24:36.120 --> 24:37.400] Like this is just use and disuse kind of stuff.

[24:37.400 --> 24:38.400] Right.

[24:38.400 --> 24:39.400] Exactly.

[24:39.400 --> 24:40.400] This could just all be learned.

[24:40.400 --> 24:42.820] You know, this could be the patterns of functioning in the brain because this because you were

[24:42.820 --> 24:47.640] raised this way, rather than predisposed to being liberal or conservative.

[24:47.640 --> 24:48.640] Doesn't answer that.

[24:48.640 --> 24:53.440] I also think it doesn't answer if these things that we're looking at, like the circuits in

[24:53.440 --> 24:59.080] the brain that we're looking at, if they are fundamental to ideology or incidental to ideology.

[24:59.080 --> 25:00.080] We don't know that.

[25:00.080 --> 25:03.560] But there's one other way to sort of look at this data, which is interesting.

[25:03.560 --> 25:07.440] And that is, so what are the parts of the brain that were different?

[25:07.440 --> 25:11.880] Let's just ask that question from liberal to conservative, not the necessarily the functional

[25:11.880 --> 25:17.080] circuits or what tasks they were doing, but just what parts of the brain were involved.

[25:17.080 --> 25:21.520] And they were mostly the hippocampus, the amygdala and the frontal lobes.

[25:21.520 --> 25:26.480] So those are all involved with emotional processing.

[25:26.480 --> 25:31.760] And that's very provocative, in my opinion, because that suggests that ideology is really

[25:31.760 --> 25:36.480] tied very strongly to emotional processing.

[25:36.480 --> 25:40.560] It wasn't so much the more rational cognitive parts of the brain, it was the emotional parts

[25:40.560 --> 25:46.240] of the brain that were able to predict liberal to conservative, extreme to moderate.

[25:46.240 --> 25:51.160] So it's really, you know, political ideology may say more about our emotional makeup than

[25:51.160 --> 25:56.780] our cognitive style, which is interesting to think about, which kind of does jibe with

[25:56.780 --> 26:04.560] other, you know, other research that shows that we tend to come to opinions that are

[26:04.560 --> 26:13.060] emotionally salient to us based upon our emotional instincts, and then post hoc rationalize them

[26:13.060 --> 26:19.080] with motivated reasoning, which is why it's so hard to resolve political or ideological

[26:19.080 --> 26:21.920] or religious, you know, disagreements because people aren't reasoning their way to them

[26:21.920 --> 26:22.920] in the first place.

[26:22.920 --> 26:28.400] They're just using motivated reasoning to backfill their emotional gut instinct.

[26:28.400 --> 26:31.160] And that's their worldview, what feels right to them.

[26:31.160 --> 26:35.340] Now, this is the way it is because it feels right to me, and I will make sure I figure

[26:35.340 --> 26:37.240] out a way to make it make sense.

[26:37.240 --> 26:42.560] And it's why sometimes you have to shake your head at the motivated reasoning that the quote

[26:42.560 --> 26:44.040] unquote other side is engaging.

[26:44.040 --> 26:46.000] But of course, we all do this.

[26:46.000 --> 26:48.280] This is a human condition.

[26:48.280 --> 26:52.400] It also kind of speaks to I mean, I'm curious if you agree, but it speaks to, I think, a

[26:52.400 --> 26:57.880] fundamental construct that's involved in political discourse or political thinking,

[26:57.880 --> 26:59.760] which is moral reasoning.

[26:59.760 --> 27:03.040] And moral reasoning is fundamentally emotive.

[27:03.040 --> 27:07.720] Like it is cognitive and emotive, but you can't strip emotive reasoning away from moral

[27:07.720 --> 27:08.720] philosophy.

[27:08.720 --> 27:09.720] It's part of it.

[27:09.720 --> 27:10.720] Totally.

[27:10.720 --> 27:11.720] Yeah, totally.

[27:11.720 --> 27:12.720] Yeah.

[27:12.720 --> 27:13.720] Like we feel injustice.

[27:13.720 --> 27:14.720] Yes.

[27:14.720 --> 27:15.720] Absolutely.

[27:15.720 --> 27:16.720] And then we then we then we rationalize why that's unjust.

[27:16.720 --> 27:17.720] Yeah.

[27:17.720 --> 27:18.720] Because if we feel it first, absolutely.

[27:18.720 --> 27:24.760] If we were totally cognitive and like a kind of extreme example of like a cool, cold, calculated

[27:24.760 --> 27:28.000] cognitive, the humanism would be not there.

[27:28.000 --> 27:29.840] And that's also dangerous.

[27:29.840 --> 27:30.840] Mm hmm.

[27:30.840 --> 27:31.840] Yeah.

[27:31.840 --> 27:34.880] I mean, that's why I kind of like, you know, science fiction shows that explore that through

[27:34.880 --> 27:40.000] characters that have different emotional makeup than humans like Vulcans or androids or whatever,

[27:40.000 --> 27:45.480] because it's like they are they have only rational reasoning, no emotional reasoning.

[27:45.480 --> 27:46.960] And it's just a good thought experiment.

[27:46.960 --> 27:48.840] What would that result in?

[27:48.840 --> 27:55.440] And even to the point of taking what seem like really extreme moral positions, but they

[27:55.440 --> 27:57.240] make perfect rational sense.

[27:57.240 --> 27:58.240] Right.

[27:58.240 --> 27:59.240] Exactly.

[27:59.240 --> 28:00.240] But they don't feel right.

[28:00.240 --> 28:01.240] They don't feel right to us.

[28:01.240 --> 28:02.240] So they got to be wrong.

[28:02.240 --> 28:03.240] All right.

[28:03.240 --> 28:04.240] Let's move on.

[28:04.240 --> 28:05.240] Very fascinating.

[28:05.240 --> 28:06.240] So this is something that I sort of follow.

[28:06.240 --> 28:09.320] And so I'm sure we'll be talking about this and again in the future.

[28:09.320 --> 28:13.520] And this is a one tiny slice of obviously very complicated phenomenon.

[28:13.520 --> 28:17.440] No one study is going to give us the answer as to what like a liberal brain is or a conservative

[28:17.440 --> 28:19.860] brain or even if there is such a thing.

[28:19.860 --> 28:21.520] But it is very interesting.

[28:21.520 --> 28:22.520] All right.

Cozy Lava Tubes (28:21)

[28:22.520 --> 28:28.280] Jay, this is cool, actually, literally and figuratively cool.

[28:28.280 --> 28:32.960] Tell us about lava tubes and the temperature of them.

[28:32.960 --> 28:33.960] All right.

[28:33.960 --> 28:36.400] First, I want to start by saying, Bob, just calm down.

[28:36.400 --> 28:37.400] Yep.

[28:37.400 --> 28:40.040] I tried to select this topic for this weekend.

[28:40.040 --> 28:41.040] I was shut down.

[28:41.040 --> 28:44.360] So I was very surprised when you said Jay and not Bob.

[28:44.360 --> 28:46.080] Bob, I need you to breathe.

[28:46.080 --> 28:47.080] Okay.

[28:47.080 --> 28:48.080] I'm breathing, man.

[28:48.080 --> 28:49.080] Just get your facts right, baby.

[28:49.080 --> 28:50.080] All right.

[28:50.080 --> 28:57.200] So we've talked about lava tubes as potential locations for future moon habitats, haven't

[28:57.200 --> 28:58.200] we?

[28:58.200 --> 28:59.200] Quite a bit.

[28:59.200 --> 29:00.200] Yes.

[29:00.200 --> 29:04.920] Well, a little later in this news item, I'm going to blow your mind about lava tubes.

[29:04.920 --> 29:09.760] But let me fill your brain a little bit with some interesting things that'll make you appreciate

[29:09.760 --> 29:10.940] it even more.

[29:10.940 --> 29:14.860] So I recently talked about, I think it was last week, about the Artemis 1 mission that

[29:14.860 --> 29:16.840] could be launching very soon.

[29:16.840 --> 29:21.560] This mission sends an uncrewed command module in orbit around the moon.

[29:21.560 --> 29:26.400] If everything goes well, the Artemis 2 mission, which will be crewed, can launch as early

[29:26.400 --> 29:27.760] as 2025.

[29:27.760 --> 29:32.720] It's NASA's intention, this is important, it's their intention to send people to the

[29:32.720 --> 29:36.920] surface of the moon, build habitats, and have people live there.

[29:36.920 --> 29:38.120] That's what they want.

[29:38.120 --> 29:40.560] And I couldn't agree with this more.

[29:40.560 --> 29:43.640] This is the best thing that I think they could be doing right now.

[29:43.640 --> 29:47.480] I imagine that this whole effort is going to be similar to how people stay on the space

[29:47.480 --> 29:48.480] station, right?

[29:48.480 --> 29:51.400] You know, they rotate crew on and off.

[29:51.400 --> 29:54.800] So they probably will rotate crew to and from the moon.

[29:54.800 --> 29:58.480] Some of them will be staying for longer periods of time.

[29:58.480 --> 30:01.900] They'll conduct experiments and at some point they'll start building a place for future

[30:01.900 --> 30:03.380] visitors to live.

[30:03.380 --> 30:08.640] There's a ton of details that we all have to consider about people living on the moon,

[30:08.640 --> 30:09.640] especially NASA.

[30:09.640 --> 30:12.760] NASA has been thinking about this for a long time.

[30:12.760 --> 30:13.760] First, what?

[30:13.760 --> 30:16.520] The moon has no atmosphere, almost.

[30:16.520 --> 30:18.480] There's a tiny little bit of atmosphere on the moon.

[30:18.480 --> 30:22.760] It's about as dense as the atmosphere that's around the space station, which is in low

[30:22.760 --> 30:23.760] Earth orbit.

[30:23.760 --> 30:28.080] There is atmosphere there, but it is essentially a vacuum.

[30:28.080 --> 30:29.080] Not a perfect vacuum.

[30:29.080 --> 30:30.520] It's fascinating, too.

[30:30.520 --> 30:31.520] It's tiny, tiny, tiny, tiny.

[30:31.520 --> 30:32.520] Yeah.

[30:32.520 --> 30:37.000] I mean, one example I heard, Jay, if you took the air that's in like a baseball or football

[30:37.000 --> 30:41.600] stadium in the United States, that kind of size, if you take the air that's inside that

[30:41.600 --> 30:45.160] and spread it around the moon, that's the density we're talking about.

[30:45.160 --> 30:46.160] Yeah.

[30:46.160 --> 30:47.160] It's nothing.

[30:47.160 --> 30:48.160] Quite thin, but fascinating, though.

[30:48.160 --> 30:49.160] It's a thing.

[30:49.160 --> 30:50.160] It's a thing.

[30:50.160 --> 30:54.920] So, Bob, as a point of curiosity, an average human can stay conscious for about 20 seconds

[30:54.920 --> 30:55.920] in a vacuum.

[30:55.920 --> 30:56.920] Yeah.

[30:56.920 --> 30:57.920] About.

[30:57.920 --> 31:03.920] And the next thing about the moon that we have to be concerned with is the temperature.

[31:03.920 --> 31:05.800] The moon has extreme temperatures.

[31:05.800 --> 31:11.880] The daytime temperature there is 260 degrees Fahrenheit or 126 degrees Celsius.

[31:11.880 --> 31:19.720] Nighttime, it goes way down to minus 280 degrees Fahrenheit or minus 173 degrees Celsius.

[31:19.720 --> 31:21.520] Super hot, super cold.

[31:21.520 --> 31:27.940] So with no atmosphere or magnetosphere, visitors on the moon will also be exposed to solar

[31:27.940 --> 31:30.020] wind and cosmic rays.

[31:30.020 --> 31:34.500] This means that the moon's habitat has to provide a lot of amenities in order for people

[31:34.500 --> 31:36.480] to stay for long periods of time.

[31:36.480 --> 31:41.840] So all that said, keeping in mind how hostile the surface of the moon is, it's looking like

[31:41.840 --> 31:44.920] lava tubes are even more awesome than we thought.

[31:44.920 --> 31:50.840] NASA figured out that some lava tubes have a consistent inner temperature of 63 degrees

[31:50.840 --> 31:54.160] Fahrenheit or 17 degrees Celsius.

[31:54.160 --> 31:55.920] Do I need to repeat that?

[31:55.920 --> 31:57.320] That's amazing.

[31:57.320 --> 31:58.320] Amazing.

[31:58.320 --> 32:00.360] It's like the perfect temperature.

[32:00.360 --> 32:03.960] It's the perfect freaking temperature, you know, or within 10 degrees of the perfect

[32:03.960 --> 32:07.000] temperature for people to live.

[32:07.000 --> 32:10.440] It's like the perfect fall day, let me put it to you that way.

[32:10.440 --> 32:19.360] These lava tubes can be as big as 1,600 to 3,000 feet or 500 to 900 meters in diameter,

[32:19.360 --> 32:23.240] which is very, very large, which is fascinating as well.

[32:23.240 --> 32:26.580] There's a reason why lava tubes are large on the moon.

[32:26.580 --> 32:28.040] It's because there's less gravity, right?

[32:28.040 --> 32:31.360] So the more gravity a planet has, the smaller the lava tubes get.

[32:31.360 --> 32:35.680] Well, the moon doesn't have a lot of gravity, so the lava tubes got to be really big.

[32:35.680 --> 32:41.960] Now lava tubes can also, if we build habitats in them, they also can help block harmful

[32:41.960 --> 32:46.760] effects of radiation and micrometeor impact, which happens quite often on the moon.

[32:46.760 --> 32:50.240] It might even be possible to pressurize a lava tube.

[32:50.240 --> 32:55.840] Even if like we can't pressurize a lava tube, for example, there's still a massive benefit

[32:55.840 --> 32:58.880] to building a habitat inside of a lava tube itself.

[32:58.880 --> 33:02.960] So first of all, who would have thought that lava tubes have a cozy temperature?

[33:02.960 --> 33:06.040] That's the thing that I've been rattling around in my head the last few days.

[33:06.040 --> 33:11.600] I just simply can't believe that these things are a perfect temperature for humans to live

[33:11.600 --> 33:12.600] at.

[33:12.600 --> 33:18.200] Now NASA figured this out by analyzing data from the Diviner Lunar Radiometer that's onboard

[33:18.200 --> 33:20.480] the Lunar Reconnaissance Orbiter.

[33:20.480 --> 33:25.960] The data shows that the consistent lunar cycle, which is 15 straight days of light and then

[33:25.960 --> 33:30.880] 15 days of dark, the Diviner instrument measured the temperature of the lunar surface for over

[33:30.880 --> 33:32.120] 11 years.

[33:32.120 --> 33:37.640] And when the sunlight is hitting the surface, the temperature, like I said before, it skyrockets

[33:37.640 --> 33:38.640] way up.

[33:38.640 --> 33:43.040] And then when it gets dark, the temperature plummets very quickly way, way down to a very,

[33:43.040 --> 33:44.040] very low temperature.

[33:44.040 --> 33:47.840] So there's things that are called pits that are on the moon, and most of these were likely

[33:47.840 --> 33:49.800] created by meteor strikes, right?

[33:49.800 --> 33:56.960] Now 16 of these pits so far that have been discovered likely dropped down into a lava

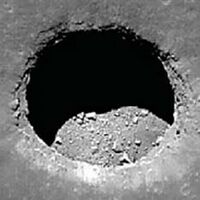

[33:56.960 --> 33:57.960] tube, right?

[33:57.960 --> 33:59.840] So there's a meteor strike.

[33:59.840 --> 34:01.640] It creates a hole.

[34:01.640 --> 34:05.600] That hole cracks into a lava tube that was below the surface, right?

[34:05.600 --> 34:07.040] Can you visualize that?

[34:07.040 --> 34:14.720] Sixteen of them are probably collapsed into a lava tube, but there's no reason to think

[34:14.720 --> 34:19.920] that they're from meteor strikes, it's just that the ground over the lava tube collapsed

[34:19.920 --> 34:21.080] into the lava tube.

[34:21.080 --> 34:23.560] Oh, it sounded to me like those were meteor strikes.

[34:23.560 --> 34:27.040] No, the other ones, the other pits are caused by meteor strikes.

[34:27.040 --> 34:35.320] All right, but the important fact here is that they collapsed down into a lava tube

[34:35.320 --> 34:36.320] for some reason, right?

[34:36.320 --> 34:37.320] Yeah.

[34:37.320 --> 34:38.320] So you have a hole.

[34:38.320 --> 34:42.960] The ceiling of the lava tube collapsed, you know, fell in because it was unstable, whatever.

[34:42.960 --> 34:48.400] It could have been from a nearby impact, you know, shook it and cracked the rocks, whatever.

[34:48.400 --> 34:51.040] Just something happened and it eventually collapsed down.

[34:51.040 --> 34:53.720] Now, the pits have something, though, that's important.

[34:53.720 --> 34:57.920] They have a protective overhang that blocks some of the sunlight, right?

[34:57.920 --> 35:00.480] That's critical, it seems, for this cozy temperature.

[35:00.480 --> 35:01.640] That seems very critical.

[35:01.640 --> 35:06.900] Yeah, this, I guess, Bob, without that rocky overhang that's, you know, partly covering

[35:06.900 --> 35:12.040] up the hole that was made, things wouldn't behave the exact right way in order to create

[35:12.040 --> 35:14.000] this nice, even temperature.

[35:14.000 --> 35:15.320] But so it does a couple of things.

[35:15.320 --> 35:20.560] It blocks light from coming in and it also inhibits retained heat from leaving too fast.

[35:20.560 --> 35:24.400] Now, this is probably why the temperature stays at such a nice temperature.

[35:24.400 --> 35:30.160] This is called blackbody equilibrium, by the way, with a constant temperature of 17 degrees

[35:30.160 --> 35:32.740] Celsius or 63 degrees Fahrenheit.

[35:32.740 --> 35:38.300] There's less than, and this is mind blowing, there's less than a one degree Celsius variation

[35:38.300 --> 35:40.640] throughout the 30-day lunar cycle.

[35:40.640 --> 35:41.720] That's amazing.

[35:41.720 --> 35:43.280] How efficient that is.

[35:43.280 --> 35:48.840] So if we get lucky, one of these pits will indeed connect to a preexisting lava tube.

[35:48.840 --> 35:51.600] And if we find that, we're in business.

[35:51.600 --> 35:58.320] Yeah, they also, their simulations also show that that one degree variance, that 63 degrees

[35:58.320 --> 36:03.720] or 17 degrees Celsius with one degree Celsius variance, probably holds true throughout the

[36:03.720 --> 36:05.520] entire lava tube.

[36:05.520 --> 36:07.280] Yeah, that's what I was wondering.

[36:07.280 --> 36:14.560] So now we have a lava tube that could be pretty long, could be very long, and it has a pretty

[36:14.560 --> 36:15.560] large diameter.

[36:15.560 --> 36:19.720] Huge, yeah, because a little gravity can make them go much bigger than anything found on

[36:19.720 --> 36:20.720] the Earth.

[36:20.720 --> 36:25.160] We're absolutely going to investigate these lava tubes and these pits and see what we

[36:25.160 --> 36:26.160] find.

[36:26.160 --> 36:31.960] And if things happen correctly the way that we want them to, then we most definitely will

[36:31.960 --> 36:34.680] be building some type of habitat inside of one of these.

[36:34.680 --> 36:35.680] It's too good.

[36:35.680 --> 36:36.680] It's just too many benefits.

[36:36.680 --> 36:41.600] It really seems like a no-brainer in a lot of ways because the surface of the moon is

[36:41.600 --> 36:44.920] far deadlier than people generally can appreciate.

[36:44.920 --> 36:48.920] Jay, you mentioned the micrometeoroids, absolutely.

[36:48.920 --> 36:53.160] These things can come in and if you get hit, you will be taken out.

[36:53.160 --> 36:57.980] I don't care what you're wearing, but not only that, even if it hits near you, the debris

[36:57.980 --> 37:01.400] that's kicked up can cause damage, can ruin habitats.

[37:01.400 --> 37:02.880] So you've got that.

[37:02.880 --> 37:07.880] You mentioned the radiation, cosmic radiation, solar radiation, and also the radiation that's

[37:07.880 --> 37:13.000] created at your feet by the other radiation that's hitting the ground by your feet can

[37:13.000 --> 37:15.240] also do more damage.

[37:15.240 --> 37:19.280] And the other thing, Jay, you didn't quite mention, moon dust is horrible.

[37:19.280 --> 37:25.360] The astronauts hated, hated it more than Darth Vader when he was a kid, he was a punk.

[37:25.360 --> 37:26.360] And a kid.

[37:26.360 --> 37:27.360] And a kid.

[37:27.360 --> 37:32.960] And they hated it more than he hated sand because that stuff, think about it, you've

[37:32.960 --> 37:33.960] got-

[37:33.960 --> 37:34.960] It's abrasive, I know, it's sharp.

[37:34.960 --> 37:35.960] It's sharp edges.

[37:35.960 --> 37:36.960] There's no weathering.

[37:36.960 --> 37:37.960] It's everywhere.

[37:37.960 --> 37:38.960] There's no atmosphere.

[37:38.960 --> 37:39.960] There's no water.

[37:39.960 --> 37:40.960] It's very sharp.

[37:40.960 --> 37:41.960] You breathe that in, not good.

[37:41.960 --> 37:42.960] And it also gets everywhere.

[37:42.960 --> 37:45.920] And we got to get, you know, people are going to want to get away.

[37:45.920 --> 37:47.160] You cannot stay there.

[37:47.160 --> 37:50.580] Yeah, you can stay there for three days like our astronauts did.

[37:50.580 --> 37:51.580] That's fine.

[37:51.580 --> 37:54.020] But if you're going to stay there for longer than that, you got to get out of that.

[37:54.020 --> 37:57.080] That is a horrible place to be for an extended period of time.

[37:57.080 --> 37:58.840] And it's like right there waiting for us.

[37:58.840 --> 38:00.880] Now it's even more comfortable than we thought.

[38:00.880 --> 38:04.380] You know, it's just a much better place, it's much safer.

[38:04.380 --> 38:09.520] To me, I mean, you're going to spend the resources and the money to dig deep holes and bury yourself

[38:09.520 --> 38:11.080] under the regolith that way.

[38:11.080 --> 38:15.320] I mean, when there's places just waiting for you that are better gargantuan.

[38:15.320 --> 38:17.960] Now Bob, how about a pressurized lava tube?

[38:17.960 --> 38:18.960] Sure.

[38:18.960 --> 38:23.440] Imagine if there's one that's deep enough that it can hold pressure.

[38:23.440 --> 38:28.720] All you'd really need to do is build two end caps, airlocks, right, that would contain

[38:28.720 --> 38:30.520] the airlocks.

[38:30.520 --> 38:38.040] And you might have to smooth out the interior surface and maybe even coat it to reduce any

[38:38.040 --> 38:39.040] leaking.

[38:39.040 --> 38:43.520] You pressurize that thing and you just kind of you got a huge underground city.

[38:43.520 --> 38:44.520] Right.

[38:44.520 --> 38:48.460] And also, it's not like what people think that you think, oh, you get a little crack

[38:48.460 --> 38:53.920] in this blocked area so that the air maintains pressure.

[38:53.920 --> 38:57.320] You get a little hole or a crack and the air is going to go rushing out and people are

[38:57.320 --> 38:58.320] going to get sucked out.

[38:58.320 --> 39:03.840] No, you would actually have hours and hours and hours or maybe even days before this would

[39:03.840 --> 39:05.640] reach a critical threshold.

[39:05.640 --> 39:11.600] You have time to fix these, any problems, any cracks or holes that might appear.

[39:11.600 --> 39:12.600] You have time.

[39:12.600 --> 39:13.600] It's not like red alert.

[39:13.600 --> 39:16.200] It's more like a soft yellow alert.

[39:16.200 --> 39:17.960] I read a good science fiction story.

[39:17.960 --> 39:21.800] I forget which one, but there was this was aboard a ship, but the principle could apply.

[39:21.800 --> 39:27.080] You basically have these floating balloons that are filled with a sticky substance.

[39:27.080 --> 39:28.640] I have listened to that story as well.

[39:28.640 --> 39:29.640] Yeah.

[39:29.640 --> 39:30.640] Yeah.

[39:30.640 --> 39:33.480] If there's a leak, the balloons would float to the leak again.

[39:33.480 --> 39:38.040] And when they get into the crack, they break and automatically fill it with the sticky

[39:38.040 --> 39:40.040] substance that seals the crack.

[39:40.040 --> 39:44.680] So you could have this just these balloons floating around in the lava tube that would

[39:44.680 --> 39:50.560] just automatically passively seal at least, you know, small size cracks, not if not bigger

[39:50.560 --> 39:51.560] ones.

[39:51.560 --> 39:52.560] That's pretty cool.

[39:52.560 --> 39:56.440] But you'd probably want to have some airtight habitats inside there as a backup.

[39:56.440 --> 40:01.560] I would want two layers, but absolutely, you know, maybe you're living in the habitats,

[40:01.560 --> 40:07.760] but you can have a farm that is just in the, you know, the regolith just in the lava tube

[40:07.760 --> 40:10.220] with, you know, with artificial lighting.

[40:10.220 --> 40:15.280] You could put a frigging nuclear, you know, power station down there.

[40:15.280 --> 40:16.280] Yeah, baby.

[40:16.280 --> 40:17.280] Yeah.

[40:17.280 --> 40:21.960] I think if we're going to build any permanent or long term large, you know, bases or settlements

[40:21.960 --> 40:24.200] on the moon, they're going to be in lava tubes.

[40:24.200 --> 40:25.200] I mean, it just seems...

[40:25.200 --> 40:26.200] Definitely.

[40:26.200 --> 40:27.200] It's so provocative, right?

[40:27.200 --> 40:29.760] Though, it just it's like stories writing itself.

[40:29.760 --> 40:33.280] I'm just envisioning all the cool things that we could be doing in there.

[40:33.280 --> 40:37.960] You know, imagine you could go to the moon, eventually, you know, there might be a living

[40:37.960 --> 40:42.400] space, you know, where people can go to the moon and vacation there for a week.

[40:42.400 --> 40:43.600] You know what I mean?

[40:43.600 --> 40:44.600] That's incredible.

[40:44.600 --> 40:50.080] Well, I know Kara is probably asking herself right now, why would we even go to the moon?

[40:50.080 --> 40:51.720] Like, why have...why send people there?

[40:51.720 --> 40:54.320] Why not just send robots there to do whatever we want?

[40:54.320 --> 40:58.360] I mean, there are definitely, you know, you could make an argument for we should do whatever

[40:58.360 --> 41:03.840] we need to do on the moon with robots, but there are lots of things to do on the moon

[41:03.840 --> 41:10.000] like research, industry, mining.

[41:10.000 --> 41:15.840] You know, if we end up using H3 for our fusion reactors, then the best source of that is

[41:15.840 --> 41:19.900] the lunar surface and as a platform for deep space.

[41:19.900 --> 41:23.720] So if we want to get to the rest of, you know, the rest of the solar system, the moon, we're

[41:23.720 --> 41:25.120] going to go through the moon.

[41:25.120 --> 41:26.120] Yeah.

[41:26.120 --> 41:28.560] My question isn't why, it's should we?

[41:28.560 --> 41:30.120] It's not why would we, it's should we?

[41:30.120 --> 41:31.880] Well, if there are reasons to go, then...

[41:31.880 --> 41:33.960] Are those reasons good enough to go?

[41:33.960 --> 41:35.960] Yeah, which they are.

[41:35.960 --> 41:38.000] I'll agree with some of them, but not all of them.

[41:38.000 --> 41:42.540] But I think that also, you know, it's good to have humanity not on one planet in case

[41:42.540 --> 41:43.540] something happens.

[41:43.540 --> 41:49.000] Yeah, there's always that, which reminds me, of course, of an image, a still image that

[41:49.000 --> 41:53.600] is kind of unforgettable where you see an astronaut on the moon looking at the earth

[41:53.600 --> 42:01.780] and you see the earth has just been basically run through by like a mini planet.

[42:01.780 --> 42:06.080] So the earth has basically been utterly destroyed and this guy's looking at it happens like

[42:06.080 --> 42:07.080] whoops.

[42:07.080 --> 42:08.080] Whoa.

[42:08.080 --> 42:09.080] I wasn't there.

[42:09.080 --> 42:12.420] Well, everyone, we're going to take a quick break from our show to talk about our sponsor

[42:12.420 --> 42:13.920] this week, BetterHelp.

[42:13.920 --> 42:16.240] And let's not sugarcoat it, everyone.

[42:16.240 --> 42:18.720] It's a tough time out there and a lot of people are struggling.

[42:18.720 --> 42:25.520] And if you are struggling and you have never decided to take the plunge and talk to somebody,

[42:25.520 --> 42:27.320] maybe now is the time.

[42:27.320 --> 42:30.740] It's so important to prioritize our mental health.

[42:30.740 --> 42:35.240] If we put that first, everything else really can follow and BetterHelp can help you with

[42:35.240 --> 42:36.240] that.

[42:36.240 --> 42:38.640] You know, I myself work as a therapist and I also go to therapy.

[42:38.640 --> 42:43.440] And I can tell you that online therapy has been really, really beneficial for a lot of

[42:43.440 --> 42:46.920] folks where it's, you know, it fits better within your day.

[42:46.920 --> 42:50.880] You have limitations to be able to get in the car and drive somewhere.

[42:50.880 --> 42:54.560] Being able to talk to somebody online can be really a lifesaver.

[42:54.560 --> 42:57.160] And it's the model that I'm now using all the time.

[42:57.160 --> 43:02.560] Yeah, Keri, you could do it on your phone or, you know, your iPad if you want to, any

[43:02.560 --> 43:04.480] way that you connect with the video.

[43:04.480 --> 43:08.520] You can even live chat with therapy sessions so you don't have to see anyone on camera

[43:08.520 --> 43:09.520] if you don't want to.

[43:09.520 --> 43:14.440] And the other great thing is you could be matched with a therapist in under 48 hours.

[43:14.440 --> 43:20.040] Our listeners get 10% off their first month at BetterHelp.com slash SGU.

[43:20.040 --> 43:23.640] That's Better H-E-L-P dot com slash SGU.

[43:23.640 --> 43:26.400] All right, guys, let's get back to the show.

Video Games and Well-being (43:27)

[43:26.400 --> 43:33.160] Okay, Kara, tell us about video games and well-being, this endless debate that we seem

[43:33.160 --> 43:34.160] to be having.

[43:34.160 --> 43:35.160] Yeah.

[43:35.160 --> 43:38.120] So new study, really interesting, very, very large study.

[43:38.120 --> 43:40.960] So I'm just going to ask you guys point blank.

[43:40.960 --> 43:42.080] What do you think?

[43:42.080 --> 43:46.080] Does playing video games have a detrimental impact on well-being?

[43:46.080 --> 43:47.080] No.

[43:47.080 --> 43:52.320] I would say generally no, unless you abuse it, like anything else.

[43:52.320 --> 43:53.320] Yeah.

[43:53.320 --> 43:54.320] Okay.

[43:54.320 --> 43:55.320] But not especially.

[43:55.320 --> 43:56.320] What do you think?

[43:56.320 --> 43:58.760] Do video games have a positive impact on well-being?

[43:58.760 --> 43:59.760] They can.

[43:59.760 --> 44:00.760] I think so.

[44:00.760 --> 44:01.760] Yeah, that's exactly what I would say.

[44:01.760 --> 44:02.760] I would guess yes.

[44:02.760 --> 44:03.760] It can.

[44:03.760 --> 44:05.960] Probably not generically, but I think it can in some contexts.

[44:05.960 --> 44:06.960] Right.

[44:06.960 --> 44:13.160] So this study looked at probably it was more on the generic side than on the specific context

[44:13.160 --> 44:16.400] side because it was a really, really large study.

[44:16.400 --> 44:22.800] Ultimately, they looked at 38,935 players' data.

[44:22.800 --> 44:25.760] And it started way bigger than that, like hundreds of thousands.

[44:25.760 --> 44:29.080] But of course, with attrition and people not getting back and dropping out of the study,

[44:29.080 --> 44:33.840] they ended with 38,935 solid participants in this study.

[44:33.840 --> 44:36.160] So that's a big, big data set.

[44:36.160 --> 44:41.900] Their basic takeaway was there's pretty much no causal connection between gameplay and

[44:41.900 --> 44:42.900] well-being at all.

[44:42.900 --> 44:43.900] It doesn't improve.

[44:43.900 --> 44:45.640] It's not detrimental.

[44:45.640 --> 44:49.200] It has no effect at all on well-being for the most part.

[44:49.200 --> 44:51.200] Of course, we want to break that down a little bit.

[44:51.200 --> 44:52.200] Yeah, yeah, yeah.

[44:52.200 --> 44:54.880] So they looked at something else, which was kind of interesting, which we'll get to in

[44:54.880 --> 45:00.400] a second, which is the motivation for playing and how that motivation might be a sort of

[45:00.400 --> 45:02.760] underlying variable.

[45:02.760 --> 45:05.000] But first, let's talk about what they actually did.

[45:05.000 --> 45:06.740] So it was pretty cool.

[45:06.740 --> 45:13.800] These researchers were able to partner with, I think it was seven different gaming publishers,

[45:13.800 --> 45:15.360] gaming companies.

[45:15.360 --> 45:23.880] And in doing so, they were able to get direct, objective data about frequency of play because

[45:23.880 --> 45:28.960] they found that most of the, you know, obviously the reason for this study is exactly what

[45:28.960 --> 45:32.260] you asked at the beginning, Steve, like this endless debate.

[45:32.260 --> 45:37.620] And what we've seen is that there's a fair amount of public policy, like legislation,

[45:37.620 --> 45:44.240] and not just here in the US, but across the globe, that directly concerns the fear that

[45:44.240 --> 45:47.000] playing video games is detrimental to health.

[45:47.000 --> 45:48.000] But it's not evidence-based.

[45:48.000 --> 45:54.480] Like, there's, the researchers cited that in China, there is like a limit to the number

[45:54.480 --> 46:00.240] of hours people are allowed to play video games a day for fear that if somebody plays

[46:00.240 --> 46:02.540] longer than that, it can be detrimental.

[46:02.540 --> 46:06.200] And they were like, okay, if we're making like policy decisions based on this, we should

[46:06.200 --> 46:12.320] probably get to the bottom of whether or not this is even true because the data is complex.

[46:12.320 --> 46:16.600] So they were like, a lot of the data, when you look at previous studies, is subjective

[46:16.600 --> 46:17.600] in nature.

[46:17.600 --> 46:19.360] I should say it's self-report.

[46:19.360 --> 46:23.280] So not only are individuals saying, this is how I feel, but they're also saying, oh, I

[46:23.280 --> 46:27.200] kept a journal, and yeah, look, I played seven hours yesterday, or oh, I play an average

[46:27.200 --> 46:28.200] of two hours a week.

[46:28.200 --> 46:30.600] And it's like, okay, we just got to take your word for it.

[46:30.600 --> 46:36.480] So what they decided to do is figure out how to partner with these different companies.

[46:36.480 --> 46:42.320] So they partnered with Nintendo and EA and CCP Games and Microsoft and Square Enix and

[46:42.320 --> 46:43.320] Sony.

[46:43.320 --> 46:46.820] And so they looked at a handful of games.

[46:46.820 --> 46:50.240] They were Animal Crossing New Horizons.

[46:50.240 --> 46:56.480] That was Nintendo, Apex Legends, which was EA, EVE Online, which is CCP Games, Forza

[46:56.480 --> 47:01.780] Horizon 4, which is a Microsoft game, Gran Turismo Sport, which is Sony, Outriders,

[47:01.780 --> 47:07.620] which is Square Enix, and The Crew 2, which is that last one, Ubisoft.

[47:07.620 --> 47:12.700] And they had players from, I think they wanted to make sure that they were English-speaking

[47:12.700 --> 47:14.700] so that they could complete all of the surveys.

[47:14.700 --> 47:18.400] But they had players from all over the world, English-speaking world, Australia, Canada,

[47:18.400 --> 47:22.760] India, Ireland, New Zealand, South Africa, UK, US.

[47:22.760 --> 47:27.380] And they basically said, hey, if you play this game regularly, you can participate in

[47:27.380 --> 47:28.820] this research study.

[47:28.820 --> 47:35.240] They defined regularly as you've played, let's see, in the past two weeks to two months.

[47:35.240 --> 47:40.280] And then they were able to objectively record based on these players who participated the

[47:40.280 --> 47:43.400] hours that they logged on these games.

[47:43.400 --> 47:48.360] And then they were cross-referencing that or they were actually doing their statistical

[47:48.360 --> 47:58.000] analysis comparing those numbers to the different self-report surveys of the game.

[47:58.000 --> 48:02.140] And they used multiple different self-report surveys.

[48:02.140 --> 48:03.640] So let me find them here.

[48:03.640 --> 48:08.960] So they use something called the SPAIN, which is the Scale of Positive and Negative Experiences.

[48:08.960 --> 48:13.520] It's a Likert scale, one to seven, where people basically just say how frequently they felt

[48:13.520 --> 48:16.180] a certain way in the past two weeks.

[48:16.180 --> 48:21.140] So like, how often did you feel this positive experience or this negative feeling?

[48:21.140 --> 48:25.460] So from very rarely to always or never to always.

[48:25.460 --> 48:30.960] They also used the Cantril Self-Anchoring Scale, and that asks participants to imagine

[48:30.960 --> 48:33.780] a ladder with steps from zero to 10.

[48:33.780 --> 48:35.880] The top of the ladder is the best possible life for you.

[48:35.880 --> 48:37.800] The bottom is the worst possible life.

[48:37.800 --> 48:41.800] Which step were you on in the last two weeks?

[48:41.800 --> 48:47.360] And then they did some very, very complicated statistical analysis where they basically

[48:47.360 --> 48:53.240] were comparing how often people were playing, like the time that they spent playing, and

[48:53.240 --> 48:57.160] also the changes in the time, like did they play more or less over the time that they

[48:57.160 --> 48:58.160] measured them?

[48:58.160 --> 49:04.400] Because I think they had three different measurement points, the sort of before, during, and after.

[49:04.400 --> 49:05.860] And they were slightly different.

[49:05.860 --> 49:10.640] This is one of the problems with doing this kind of study where they're using the publishers

[49:10.640 --> 49:15.240] to help provide the data because, of course, the collections were slightly different between

[49:15.240 --> 49:16.240] them.

[49:16.240 --> 49:20.200] But they were able to sort of normalize everything and look at these changes over time.

[49:20.200 --> 49:28.000] And that's how they were able to statistically try to develop a measure of causality.

[49:28.000 --> 49:29.000] So it's not that they were-

[49:29.000 --> 49:30.000] But no controls though?

[49:30.000 --> 49:33.200] They didn't study anyone with, well, I mean, how would they do it, like people that aren't

[49:33.200 --> 49:34.200] playing games?

[49:34.200 --> 49:37.840] I don't think they had a control group at all of non-game players.

[49:37.840 --> 49:44.040] But I don't think it would be that hard to just look at the norms data tables of responses

[49:44.040 --> 49:49.600] to the ladder and the Spain, the Cantrell self-anchoring scale and the Spain.

[49:49.600 --> 49:50.880] They're all going to have norms tables.