SGU Episode 897: Difference between revisions

| Line 4,906: | Line 4,906: | ||

== Signoff == | == Signoff == | ||

[01:59:48.340 --> 01:59:50.220] Well, thank you all for joining me this week. | |||

[01:59:50.220 --> 01:59:51.220] You got it, Steve. | |||

[01:59:51.220 --> 01:59:52.220] Thanks. | |||

[01:59:52.220 --> 01:59:53.220] Thanks, Steve. | |||

<!-- ** if the signoff includes announcements or any additional conversation, it would be appropriate to include a timestamp for when this part starts | <!-- ** if the signoff includes announcements or any additional conversation, it would be appropriate to include a timestamp for when this part starts | ||

--> | --> | ||

'''S:''' —and until next week, this is your {{SGU}}. <!-- typically this is the last thing before the Outro --> | '''S:''' —and until next week, this is your {{SGU}}. <!-- typically this is the last thing before the Outro --> | ||

{{Outro664}}{{top}} <!-- for previous episodes, use the appropriate outro, found here: https://www.sgutranscripts.org/wiki/Category:Outro_templates --> | {{Outro664}}{{top}} <!-- for previous episodes, use the appropriate outro, found here: https://www.sgutranscripts.org/wiki/Category:Outro_templates --> | ||

== Today I Learned == | == Today I Learned == | ||

* Fact/Description, possibly with an article reference<ref>[url_for_TIL publication: title]</ref> <!-- add this format to include a referenced article, maintaining spaces: <ref>[URL publication: title]</ref> --> | * Fact/Description, possibly with an article reference<ref>[url_for_TIL publication: title]</ref> <!-- add this format to include a referenced article, maintaining spaces: <ref>[URL publication: title]</ref> --> | ||

Revision as of 12:40, 28 October 2022

| This transcript is not finished. Please help us finish it! Add a Transcribing template to the top of this transcript before you start so that we don't duplicate your efforts. |

Template:Editing required (w/links) You can use this outline to help structure the transcription. Click "Edit" above to begin.

| SGU Episode 897 |

|---|

| September 17th 2022 |

|

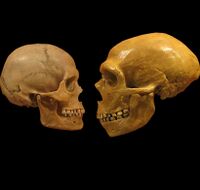

By comparison, Neanderthals needed more brain to control their larger bodies. |

| Skeptical Rogues |

| S: Steven Novella |

B: Bob Novella |

C: Cara Santa Maria |

J: Jay Novella |

E: Evan Bernstein |

| Quote of the Week |

If I want to know how we learn and remember and represent the world, I will go to psychology and neuroscience. |

Patricia Churchland, Canadian-American analytic philosopher |

| Links |

| Download Podcast |

| Show Notes |

| Forum Discussion |

Introduction, Black Mirror reflections

Voice-over: You're listening to the Skeptics' Guide to the Universe, your escape to reality.

[00:12.600 --> 00:17.480] Today is Wednesday, September 14th, 2022, and this is your host, Stephen Novella.

[00:17.480 --> 00:19.280] Joining me this week are Bob Novella.

[00:19.280 --> 00:20.280] Hey, everybody.

[00:20.280 --> 00:21.280] Kara Santamaria.

[00:21.280 --> 00:22.280] Howdy.

[00:22.280 --> 00:23.280] Jay Novella.

[00:23.280 --> 00:24.280] Hey, guys.

[00:24.280 --> 00:25.280] And Evan Bernstein.

[00:25.280 --> 00:26.280] Good evening, everyone.

[00:26.280 --> 00:30.800] You know what, guys, I'm rewatching The Black Mirror because I haven't seen most of those

[00:30.800 --> 00:32.600] episodes since they originally aired.

[00:32.600 --> 00:33.600] Really?

[00:33.600 --> 00:36.080] You mean you started at season one, episode one all over again?

[00:36.080 --> 00:37.080] Yeah.

[00:37.080 --> 00:38.160] I'm just going through in order.

[00:38.160 --> 00:43.840] And I forgot most of the details of the episodes, you know?

[00:43.840 --> 00:48.640] I sort of remember what the episode was about, but don't remember the details.

[00:48.640 --> 00:50.920] So it's almost like watching it again.

[00:50.920 --> 00:51.920] So good.

[00:51.920 --> 00:54.120] It is a brilliant TV series.

[00:54.120 --> 00:55.720] So you just watched the first season?

[00:55.720 --> 00:56.720] No.

[00:56.720 --> 00:57.720] I think I'm in the third season now.

[00:57.720 --> 01:00.800] I mean, there's not that many episodes, like four episodes a season, so I'm burning my

[01:00.800 --> 01:01.800] way through.

[01:01.800 --> 01:02.800] Yeah.

[01:02.800 --> 01:03.800] There's some good stuff in there, man.

[01:03.800 --> 01:04.800] Yeah.

[01:04.800 --> 01:05.800] Sure.

[01:05.800 --> 01:06.800] Very, very good.

[01:06.800 --> 01:07.800] Very good futurism, actually.

[01:07.800 --> 01:08.800] Quite good.

[01:08.800 --> 01:09.800] Even though they're mostly like cautionary tales.

[01:09.800 --> 01:10.800] Oh.

Cheating at Tournament Chess (1:09)

[01:10.800 --> 01:11.800] So speaking of cautionary tales.

[01:11.800 --> 01:12.800] Yeah.

[01:12.800 --> 01:16.040] If you're going to enter a chess tournament, okay?

[01:16.040 --> 01:17.040] Don't cheat.

[01:17.040 --> 01:18.040] Now, what the heck?

[01:18.040 --> 01:19.040] Where did that come from?

[01:19.040 --> 01:21.760] Why are you bringing that up, Evan?

[01:21.760 --> 01:24.320] Because of this particular news item I ran across today.

[01:24.320 --> 01:30.240] Of course, I'm a gamer, I've been a chess player, I've been in tournaments.

[01:30.240 --> 01:32.280] So chess is something that's near and dear to me.

[01:32.280 --> 01:37.520] So when chess pops up in the news, I do pause and I read about it.

[01:37.520 --> 01:42.240] And in this particular case, this headline, it's the New York Post, so take that for

[01:42.240 --> 01:47.920] what it is, but it reads, huge chess world upset of Grandmaster sparks wild claims of

[01:47.920 --> 01:52.160] cheating with vibrating sex toy.

[01:52.160 --> 01:53.160] What a title.

[01:53.160 --> 01:54.160] I love it.

[01:54.160 --> 01:58.240] So if that's not click bait, I don't know what it is.

[01:58.240 --> 01:59.680] But here's the thing.

[01:59.680 --> 02:04.740] The Magnus Carlsen is currently the world's chess champion, he's like a five time world

[02:04.740 --> 02:05.740] chess champion.

[02:05.740 --> 02:12.400] He's on a long streak of wins, I believe he had 59 wins coming into a particular tournament

[02:12.400 --> 02:16.760] in which he was matched up in the first round against the lowest rated player, which obviously

[02:16.760 --> 02:17.760] makes sense.

[02:17.760 --> 02:21.160] Highest versus lowest and you meet in the middle and that's usually how the first round

[02:21.160 --> 02:22.600] works.

[02:22.600 --> 02:23.600] And he was upset.

[02:23.600 --> 02:24.600] He was beaten.

[02:24.600 --> 02:32.520] He was beaten by somebody who's effectively relatively new to the professional chess circuit

[02:32.520 --> 02:35.240] and tournaments and other things.

[02:35.240 --> 02:40.600] And it's causing obviously a controversy, a big one in the world of chess.

[02:40.600 --> 02:47.040] You see, because the person who beat him, his name is Hans Nieman, he admitted to cheating

[02:47.040 --> 02:49.240] in online tournaments when he was younger.

[02:49.240 --> 02:51.640] Oh boy, not good for him.

[02:51.640 --> 02:52.800] Yeah.

[02:52.800 --> 02:59.540] And so he has this cloud of accusations hovering over him that there is really no plausible

[02:59.540 --> 03:04.200] way in the world of chess that the lowest rated player can beat the highest rated who

[03:04.200 --> 03:09.800] happens to be the current grandmaster, world grandmaster, five time world champion in the

[03:09.800 --> 03:12.120] first round of a tournament like this.

[03:12.120 --> 03:18.060] Apparently it's so statistically nearly impossible that it likely would not have happened unless

[03:18.060 --> 03:21.600] there was some kind of cheating and you add on top of that the fact that this person has

[03:21.600 --> 03:26.840] admitted to cheating before.

[03:26.840 --> 03:33.620] He's being questioned by certainly lots of professional organizations about it, this

[03:33.620 --> 03:35.160] kid Nieman.

[03:35.160 --> 03:41.760] He has also been banned from chess.com, the world's number one chess website because of

[03:41.760 --> 03:42.760] the accusations.

[03:42.760 --> 03:43.760] I'm sorry, is it chess.org or chess.com?

[03:43.760 --> 03:44.760] I thought it was chess.com.

[03:44.760 --> 03:45.760] Evan.

[03:45.760 --> 03:50.640] And he's been banned from them because of these cheating accusations, yep.

[03:50.640 --> 03:54.760] The part that I don't get is you can make the accusation.

[03:54.760 --> 04:00.760] Well, first of all, I'm very triggery about someone like, I didn't win so therefore it

[04:00.760 --> 04:04.240] must be cheating, right, because we're seeing that.

[04:04.240 --> 04:05.240] Yes.

[04:05.240 --> 04:09.040] Number two, they either caught the guy or they didn't catch the guy.

[04:09.040 --> 04:10.040] You can't say afterwards.

[04:10.040 --> 04:11.040] They didn't catch him.

[04:11.040 --> 04:12.720] They did not catch him.

[04:12.720 --> 04:14.240] Let's say he had a device on him.

[04:14.240 --> 04:16.000] Let's say he was cheating, right?

[04:16.000 --> 04:17.000] Yes.

[04:17.000 --> 04:19.040] They don't catch him during the competition.

[04:19.040 --> 04:25.040] He gets up, he walks out, he gets rid of anything that could incriminate him.

[04:25.040 --> 04:28.400] So now they're making an accusation that is virtually unprovable.

[04:28.400 --> 04:34.440] So what I read, first of all, Carlson, the champion who lost, did not directly accuse

[04:34.440 --> 04:37.040] him of cheating, but he implied it.

[04:37.040 --> 04:42.520] He quote unquote all but accused him, but he didn't straight up say he cheated.

[04:42.520 --> 04:46.200] And you're right, Jay, from what I'm reading, we're not experts, but this is an interesting

[04:46.200 --> 04:51.480] story is that it's all based on plausibility and game analysis.

[04:51.480 --> 04:54.720] It's based upon like what's more likely to be true.

[04:54.720 --> 04:57.160] There's no direct evidence that he cheated.

[04:57.160 --> 04:58.160] Yeah.

[04:58.160 --> 05:02.720] Speaking of game analysis, though, I just read that both, if you look at gameplay, both

[05:02.720 --> 05:08.000] sides were making mistakes and the author was claiming that, you know, something that

[05:08.000 --> 05:13.080] would make you think that maybe he really wasn't cheating if he was also making mistakes,

[05:13.080 --> 05:17.440] which isn't necessarily true because you could just cheat not for every move, but for just

[05:17.440 --> 05:20.560] some of the critical moves, you know, so you could still make mistakes.

[05:20.560 --> 05:21.560] So yeah.

[05:21.560 --> 05:27.080] So the initial analysis was like when people were watching the game live, like if you were

[05:27.080 --> 05:31.800] listening to the commentary from what I'm reading again, it said that Carlson kind of

[05:31.800 --> 05:32.800] underestimated.

[05:32.800 --> 05:35.360] He was like, this is the first round, this is a low strength player.

[05:35.360 --> 05:41.520] He kind of rushed and that he messed up, like he did not play well early in the game, but

[05:41.520 --> 05:45.080] that he should have still been able to play him to a draw.

[05:45.080 --> 05:50.760] But then he made a bad move late in the game that Neiman exploited and won.

[05:50.760 --> 05:55.760] So it just it looked like he choked because he underestimated based on what you just said,

[05:55.760 --> 05:56.760] man.

[05:56.760 --> 06:02.040] However, once Carlson brought up the possibility that the guy cheated and people like analyze

[06:02.040 --> 06:09.720] the game in detail, some people are saying that Neiman made a clutch, brilliant move

[06:09.720 --> 06:16.680] really quickly and that that might imply that, you know, he that he cheated, that he was,

[06:16.680 --> 06:18.480] you know, that there was some sort of guidance.

[06:18.480 --> 06:19.480] Yeah.

[06:19.480 --> 06:20.480] But of course, we don't.

[06:20.480 --> 06:23.840] This is all, you know, speculation, speculation and probability.

[06:23.840 --> 06:25.600] It's possible that it was just an upset.

[06:25.600 --> 06:29.280] The thing is, unusual outcomes are going to occur from time to time.

[06:29.280 --> 06:33.100] And when they do, you can point to that's an anomaly and therefore there must be something

[06:33.100 --> 06:34.100] going on.

[06:34.100 --> 06:36.540] But anomaly should happen pretty regularly.

[06:36.540 --> 06:37.920] And there are upsets in chess.

[06:37.920 --> 06:38.920] It does happen.

[06:38.920 --> 06:39.920] You know.

[06:39.920 --> 06:40.920] Oh, in all sports.

[06:40.920 --> 06:41.920] Sure.

[06:41.920 --> 06:42.920] Sure.

[06:42.920 --> 06:44.880] So it's not enough to say, oh, this guy should not have won.

[06:44.880 --> 06:49.920] They would they would need to show evidence that he actually cheated, not although it

[06:49.920 --> 06:57.800] is interesting to this idea that we can, quote unquote, prove cheating to a high degree of

[06:57.800 --> 07:00.780] probability by analyzing the game.

[07:00.780 --> 07:03.960] So let me give you an example from a game if you guys remember this.

[07:03.960 --> 07:07.140] But I can't remember the specific video game, which a lot of our listeners know.

[07:07.140 --> 07:10.720] But somebody, you know, how they do like a you try to run through the game as fast as

[07:10.720 --> 07:11.720] possible.

[07:11.720 --> 07:12.720] Yes.

[07:12.720 --> 07:13.720] I've seen some.

[07:13.720 --> 07:17.320] Somebody did that in one of the games on the portal, whatever it was, one of the some

[07:17.320 --> 07:21.400] game where you could play through the beginning to end and broke all records.

[07:21.400 --> 07:23.720] And I think it was from Minecraft.

[07:23.720 --> 07:28.120] I think he did a Minecraft run through like faster than anybody else.

[07:28.120 --> 07:34.820] And somebody calculated the odds of him getting the drops that he got in the game.

[07:34.820 --> 07:36.320] And it was like astronomical.

[07:36.320 --> 07:39.000] I just defied all probability.

[07:39.000 --> 07:41.120] So he said he must have been hacking somehow.

[07:41.120 --> 07:47.520] He was cheating that it wasn't just based on drops, not speed, but but drops.

[07:47.520 --> 07:49.520] And when you say drops for people who aren't familiar with Minecraft.

[07:49.520 --> 07:54.200] So in other words, like you kill a bad guy and he drops treasure and that that drop is

[07:54.200 --> 07:58.480] random and there's a very hard probability.

[07:58.480 --> 07:59.480] It's coded into the game.

[07:59.480 --> 08:03.840] Like there's a one percent chance that you'll get this drop, you know, in a perfect thing.

[08:03.840 --> 08:04.840] Yeah.

[08:04.840 --> 08:10.880] So if you calculate the odds of him getting the favorable drops that he got, it defies

[08:10.880 --> 08:11.880] all.

[08:11.880 --> 08:12.880] It's like winning a lottery.

[08:12.880 --> 08:15.760] You know, it was like, but somebody always wins the lottery.

[08:15.760 --> 08:17.520] Well, that's that's kind of the point.

[08:17.520 --> 08:18.520] It's different.

[08:18.520 --> 08:19.520] No, but it's different.

[08:19.520 --> 08:20.520] It's numbers are different.

[08:20.520 --> 08:21.520] Yeah.

[08:21.520 --> 08:23.480] I have 10 million people play in that game.

[08:23.480 --> 08:24.480] Yeah.

[08:24.480 --> 08:29.640] But but so many but so many attempts at it if it's a large enough number, shouldn't there

[08:29.640 --> 08:30.640] be?

[08:30.640 --> 08:31.720] But it wasn't even close.

[08:31.720 --> 08:33.640] Not that many people do this right.

[08:33.640 --> 08:38.040] Do this like fast running, you know, run through of Minecraft.

[08:38.040 --> 08:42.320] The probability that somebody doing this, let's say there are thousands of people doing

[08:42.320 --> 08:43.320] it, whatever.

[08:43.320 --> 08:48.200] It still is like, you know, trillions to one against like orders of magnitude off it trillions

[08:48.200 --> 08:49.400] is a tough number to overcome.

[08:49.400 --> 08:50.400] It's just yeah.

[08:50.400 --> 08:51.400] Yeah.

[08:51.400 --> 08:54.840] It just should not have happened by right by chance because that doesn't mean it's impossible.

[08:54.840 --> 08:59.120] We're just saying probabilistically it's a huge red flag.

[08:59.120 --> 09:02.660] It's I think a little bit harder to say that with chess because it's not hard probabilities

[09:02.660 --> 09:03.660] that you can calculate.

[09:03.660 --> 09:07.560] It's just like maybe the guy choked and maybe the other guy got lucky or he made a he made

[09:07.560 --> 09:08.600] a move.

[09:08.600 --> 09:11.040] In retrospect, it was a brilliant move, but he could have just got lucky.

[09:11.040 --> 09:13.000] I mean, you know, could have just been.

[09:13.000 --> 09:14.000] Yeah.

[09:14.000 --> 09:15.000] Yeah.

[09:15.000 --> 09:18.860] The big thing for me, the big thing for me was Steve was when you said that this guy made

[09:18.860 --> 09:20.480] some bad moves.

[09:20.480 --> 09:21.480] He did.

[09:21.480 --> 09:24.860] A bunch of uncharacteristically bad moves.

[09:24.860 --> 09:29.600] And to me, that really kind of sways it back into this guy's corner, I think, because if

[09:29.600 --> 09:35.040] he if the champ still played a brilliant game and the guy still took him out, then that

[09:35.040 --> 09:38.520] would be, you know, it would be different, a little bit different.

[09:38.520 --> 09:39.520] Right.

[09:39.520 --> 09:40.520] Now, in terms of the cheating.

[09:40.520 --> 09:43.520] I mean, you know, this is why you don't cheat, man, because then your reputation's in the

[09:43.520 --> 09:44.520] shitter.

[09:44.520 --> 09:45.520] Yeah.

[09:45.520 --> 09:46.520] That's right.

[09:46.520 --> 09:48.080] Then if you do get lucky, no one's going to believe you.

[09:48.080 --> 09:50.280] But he said and even said, listen, he admitted it.

[09:50.280 --> 09:54.760] I admitted that I cheated once when I was 12 years old and when I was six twelve years

[09:54.760 --> 09:55.760] old.

[09:55.760 --> 10:03.160] And then when he was 16, he's now 19 years old, but he says, oh, I know he's sorry about

[10:03.160 --> 10:04.160] those.

[10:04.160 --> 10:05.160] He's reformed, whatever.

[10:05.160 --> 10:06.880] He cheats about every three years.

[10:06.880 --> 10:11.000] That's what you're saying.

[10:11.000 --> 10:12.520] You can kind of take that for what it's worth.

[10:12.520 --> 10:16.820] I mean, if you were like 30, I would say, OK, it was like he was a child and I was.

[10:16.820 --> 10:18.560] But he's 19.

[10:18.560 --> 10:25.560] It's still 16 to 19 is a huge deal, but it's not so much time that we could say he's out

[10:25.560 --> 10:29.880] of the woods in terms of still right bearing the burden of having a reputation of being

[10:29.880 --> 10:30.880] a cheater.

[10:30.880 --> 10:33.920] But it's interesting like you could make a case any way you want with something like

[10:33.920 --> 10:34.920] this.

[10:34.920 --> 10:35.920] You know, it's all about you.

[10:35.920 --> 10:37.920] You're missing like a part of this, Steve.

[10:37.920 --> 10:41.480] Evan, did I hear you correctly?

[10:41.480 --> 10:44.560] Did you say that they accused him of cheating with a sex toy?

[10:44.560 --> 10:48.160] Well, that's well, yeah, that where does that detail come from?

[10:48.160 --> 10:54.740] I'm not one hundred percent sure where that I think they're saying how could he have possibly

[10:54.740 --> 10:56.880] cheated using a piece of technology?

[10:56.880 --> 10:58.200] And this was one scenario.

[10:58.200 --> 11:03.640] And because it is, you know, because of the nature, the sexual nature of it, it obviously

[11:03.640 --> 11:06.520] gets a lot of attention more so than perhaps other.

[11:06.520 --> 11:12.160] But what's the what sex toy did this guy have that was helping him play chess?

[11:12.160 --> 11:18.040] Well, according to the accusation, it's something, you know, you anally insert and you vibrate

[11:18.040 --> 11:19.040] more.

[11:19.040 --> 11:20.480] And it vibrates and it vibrates.

[11:20.480 --> 11:22.720] Somebody would have had to have been controlling it remotely.

[11:22.720 --> 11:29.320] Well, yeah, you can other other another person or a computer or something else can control

[11:29.320 --> 11:30.320] the vibration.

[11:30.320 --> 11:32.800] Oh, and use it as a means of communication.

[11:32.800 --> 11:36.920] That's it's basically a way to send him information remotely.

[11:36.920 --> 11:37.920] Yeah, right.

[11:37.920 --> 11:38.920] Yeah.

[11:38.920 --> 11:39.920] But that's correct.

[11:39.920 --> 11:40.920] Yeah.

[11:40.920 --> 11:46.000] And that has and that and that that is a known thing in cheating when when somebody places

[11:46.000 --> 11:51.160] a device upon their body and it gives them a shock or a vibrational pulse or something

[11:51.160 --> 11:55.600] that that is very well established that people have done that in the past.

[11:55.600 --> 11:58.940] But do you think the guy was sitting there playing chess and every like five minutes

[11:58.940 --> 12:09.060] he'd be like, oh, well, this is what that sounds awfully like an argument from lack

[12:09.060 --> 12:10.060] of evidence.

[12:10.060 --> 12:11.060] Right.

[12:11.060 --> 12:12.720] It's like there's no evidence that he cheated.

[12:12.720 --> 12:18.280] That means he's a really good cheater because he he had something in his but, you know,

[12:18.280 --> 12:20.960] it's just that's not a very compelling argument.

[12:20.960 --> 12:22.360] But it is technically feasible.

[12:22.360 --> 12:25.160] You can communicate with very little information.

[12:25.160 --> 12:30.080] I think it's like three characters, three or four characters for any given chess move.

[12:30.080 --> 12:32.920] So it wouldn't take so that that can be done.

[12:32.920 --> 12:33.920] But yeah.

[12:33.920 --> 12:34.920] Yeah, you're right.

[12:34.920 --> 12:35.920] I mean, yeah.

[12:35.920 --> 12:36.920] Well, right.

[12:36.920 --> 12:37.920] Every piece occupies.

[12:37.920 --> 12:38.920] Yeah, that's right.

[12:38.920 --> 12:39.920] Every piece has a designation, a letter number combination.

[12:39.920 --> 12:44.180] So very, very easy, like you said, but let's follow this has those codes.

[12:44.180 --> 12:45.360] Let's follow this.

[12:45.360 --> 12:52.080] So he had to have a co-conspirator here that was like in the audience pressing.

[12:52.080 --> 12:53.160] Was it televised?

[12:53.160 --> 12:58.000] The button like he'd have to have somebody like looking up the information and then radioing

[12:58.000 --> 12:59.000] it to his butt.

[12:59.000 --> 13:00.000] Right.

[13:00.000 --> 13:01.000] Yeah.

[13:01.000 --> 13:02.800] So I I have to check and I haven't looked for the video.

[13:02.800 --> 13:09.360] I think it was somehow being televised or was able to be watched in real time.

[13:09.360 --> 13:15.200] And so, yeah, there would be some sort of in the audience would be too too risky.

[13:15.200 --> 13:20.080] Co-conspirator or with them or or a or something that's or a I don't know if there are automated

[13:20.080 --> 13:25.360] programs that read the chessboard or it's somehow programmed in or somebody online is

[13:25.360 --> 13:30.240] putting in the moves and then that is being relayed into whatever device supposedly this

[13:30.240 --> 13:31.920] thing is can transmit.

[13:31.920 --> 13:33.480] You know, I get you're right.

[13:33.480 --> 13:38.480] It's it's it's total speculation and unprovable at this point.

[13:38.480 --> 13:45.160] And you know, it does smack of kind of sour grapes overall, if you ask me, you know, queen

[13:45.160 --> 13:54.720] to to two D. Oh, but yes, I mean, Carlson is denying that he accused him of cheating

[13:54.720 --> 14:00.200] because that I think he knows that is bad for him now, unless you have proof.

[14:00.200 --> 14:01.200] Yeah.

[14:01.200 --> 14:03.280] You don't accuse the other guy of cheating.

[14:03.280 --> 14:05.560] Have them play five more games.

[14:05.560 --> 14:08.240] Let's see how this guy does that.

[14:08.240 --> 14:09.360] That proves nothing.

[14:09.360 --> 14:10.360] It proves nothing.

[14:10.360 --> 14:11.360] Yeah.

[14:11.360 --> 14:12.360] Why?

[14:12.360 --> 14:15.960] Because we know that Carlson will lose.

[14:15.960 --> 14:16.960] Yeah.

[14:16.960 --> 14:17.960] Right.

[14:17.960 --> 14:20.680] Because we know that the champion is better than the lowest ranking ranking guy.

[14:20.680 --> 14:23.000] It's just that did he underestimate him and choke?

[14:23.000 --> 14:24.000] Right.

[14:24.000 --> 14:27.080] That's the question that the other guy get lucky that, you know, that's the question.

[14:27.080 --> 14:31.440] And then nothing will answer that because it's done because the guy's clearly not going

[14:31.440 --> 14:32.920] to underestimate him a second time.

[14:32.920 --> 14:34.440] He's going to bring his freaking a game.

[14:34.440 --> 14:35.440] Yeah.

[14:35.440 --> 14:37.400] I played one Grandmaster in my life.

[14:37.400 --> 14:38.400] Really?

[14:38.400 --> 14:39.400] Yes.

[14:39.400 --> 14:40.400] How badly did he wipe you?

[14:40.400 --> 14:42.960] He destroyed me in like nine moves.

[14:42.960 --> 14:44.440] It was pretty much done.

[14:44.440 --> 14:45.440] Nine's not bad.

[14:45.440 --> 14:46.440] You held out for nine moves.

[14:46.440 --> 14:47.440] It was.

[14:47.440 --> 14:48.440] It was.

[14:48.440 --> 14:49.440] Yeah.

[14:49.440 --> 14:50.440] It was humbling.

[14:50.440 --> 14:51.440] It was just fun.

[14:51.440 --> 14:53.240] It was a friend of mine from high school.

[14:53.240 --> 14:54.240] His father.

[14:54.240 --> 14:55.240] Yeah.

[14:55.240 --> 14:56.240] Was technically a Grandmaster.

[14:56.240 --> 14:57.240] He played for 13.

[14:57.240 --> 14:58.240] I'd just like to be one of those guys.

[14:58.240 --> 15:01.240] You don't like to have the Grandmaster play 20 people at once.

[15:01.240 --> 15:02.240] Yeah.

[15:02.240 --> 15:03.240] Oh, gosh.

[15:03.240 --> 15:04.240] Defeats being one of those people.

[15:04.240 --> 15:08.280] You're taking up one twentieth of his attention and he's still wiped the board with you.

[15:08.280 --> 15:09.280] It's humbling.

[15:09.280 --> 15:10.280] Yeah.

[15:10.280 --> 15:11.280] So many moves.

[15:11.280 --> 15:12.280] Expertise.

[15:12.280 --> 15:13.280] Oh, gosh.

[15:13.280 --> 15:14.280] Yes.

[15:14.280 --> 15:15.280] And they're thinking so many moves ahead.

[15:15.280 --> 15:16.280] Yes.

[15:16.280 --> 15:17.280] Yeah.

[15:17.280 --> 15:21.640] The Korovinsky move from 1947 when he played Stratsky in this game and, you know, really

[15:21.640 --> 15:22.640] it comes down to that.

[15:22.640 --> 15:27.080] It's like they analyze they were they you they can memorize all the moves of a particular

[15:27.080 --> 15:31.960] game from a particular tournament from a particular, you know, year 90 that was played 90 years

[15:31.960 --> 15:32.960] ago.

[15:32.960 --> 15:33.960] It's impressive.

[15:33.960 --> 15:37.720] What's interesting from a skeptical point of view is that so many people now are trying

[15:37.720 --> 15:45.320] to infer whether or not he cheated based upon circumstantial and tangential evidence and

[15:45.320 --> 15:49.760] the logical fallacies are flying, you know, the motivated reasoning is flying.

[15:49.760 --> 15:56.900] So it's interesting to watch that from the sidelines having zero stake in the game.

[15:56.900 --> 15:58.480] But it's interesting.

[15:58.480 --> 16:02.280] And if any objective evidence emerges, we'll we'll let you know, because that would be

[16:02.280 --> 16:03.960] then then you have the hindsight.

[16:03.960 --> 16:04.960] Right.

[16:04.960 --> 16:07.960] And we'll look at all those statements and inferences with hindsight.

[16:07.960 --> 16:08.960] All right.

[16:08.960 --> 16:10.240] We're going to start off.

Is It Real: Ear Snake (16:08)

[16:10.240 --> 16:11.800] Evan, you sent this around.

[16:11.800 --> 16:12.800] This is a segment.

[16:12.800 --> 16:14.240] I think we've done this once or twice before.

[16:14.240 --> 16:15.240] Is it real?

[16:15.240 --> 16:16.240] Right.

[16:16.240 --> 16:17.240] Is the segment.

[16:17.240 --> 16:18.240] Is it real?

[16:18.240 --> 16:20.720] Have you guys all seen the YouTube video of the ear snake?

[16:20.720 --> 16:21.720] Oh, yeah.

[16:21.720 --> 16:26.000] I you know, I was going to watch it and then I realized I don't want to see whether it's

[16:26.000 --> 16:27.000] fake or not.

[16:27.000 --> 16:28.000] I don't want to see.

[16:28.000 --> 16:29.000] Oh, my God.

[16:29.000 --> 16:31.200] A snake come out of somebody's ear.

[16:31.200 --> 16:32.380] It's a high creep factor.

[16:32.380 --> 16:33.380] It's like it's.

[16:33.380 --> 16:34.380] Oh, yes.

[16:34.380 --> 16:35.380] I want to see it.

[16:35.380 --> 16:37.600] Well, it's right now.

[16:37.600 --> 16:40.680] Snakes is a natural fear, Steve, or the brain.

[16:40.680 --> 16:42.880] We have a disposition towards fear of snakes.

[16:42.880 --> 16:43.880] Oh, yeah.

[16:43.880 --> 16:44.880] I mean, generally.

[16:44.880 --> 16:47.800] So right there, you know, is the cringe.

[16:47.800 --> 16:48.800] You don't see it come out, Jay.

[16:48.800 --> 16:52.760] It's just basically hanging out in the ear with the opening and closing its mouth.

[16:52.760 --> 16:55.800] I don't like its head is facing outward.

[16:55.800 --> 16:56.800] Yeah.

[16:56.800 --> 16:57.800] Right.

[16:57.800 --> 17:03.320] So it's a it's like a portion of a video of a longer video, which is, you know, cut

[17:03.320 --> 17:09.560] strategically to only show that there's a head of a snake protruding from a woman's

[17:09.560 --> 17:14.800] ear and someone with gloves and tweezers is kind of poking it and provoking it into making

[17:14.800 --> 17:15.800] these mouth gestures.

[17:15.800 --> 17:16.800] Oh, my God.

[17:16.800 --> 17:17.800] Right.

[17:17.800 --> 17:18.800] And they're so they're so funny.

[17:18.800 --> 17:19.800] How did it turn inside?

[17:19.800 --> 17:20.800] Did it enter from another ear?

[17:20.800 --> 17:21.800] I know.

[17:21.800 --> 17:22.800] Oh, gosh.

[17:22.800 --> 17:23.800] It's crazy.

[17:23.800 --> 17:30.800] So as a neurologist, I could tell you this is 100 percent fake.

[17:30.800 --> 17:33.080] There's just no place for the snake to be.

[17:33.080 --> 17:37.800] You would be dead if there was if there was a body attached to that snake head.

[17:37.800 --> 17:42.840] There's the only place for it to be is in your brain's brain, freaking dead if that

[17:42.840 --> 17:43.840] were real.

[17:43.840 --> 17:48.460] If that were coming out of a corpse, OK, then there would be some plausibility there.

[17:48.460 --> 17:54.360] And the other thing is, the doctor is clearly not trying to remove it.

[17:54.360 --> 17:56.960] If you were trying to remove it, you would freaking remove it.

[17:56.960 --> 17:59.200] He's just poking it to make it smoking at it.

[17:59.200 --> 18:00.200] Yeah.

[18:00.200 --> 18:03.640] Like, there's no species of snake that's just a head, right?

[18:03.640 --> 18:09.480] Like, that would be the only plausible thing is if there was just a living head of a snake

[18:09.480 --> 18:10.480] there.

[18:10.480 --> 18:11.480] Right.

[18:11.480 --> 18:12.480] My guess is there's two options.

[18:12.480 --> 18:13.480] Yeah.

[18:13.480 --> 18:16.600] Either CG, which doesn't look CG, but I mean, it's possible.

[18:16.600 --> 18:17.600] No, it doesn't.

[18:17.600 --> 18:18.600] It could be.

[18:18.600 --> 18:19.600] It could be.

[18:19.600 --> 18:20.600] It could be.

[18:20.600 --> 18:21.600] It could be animatronic.

[18:21.600 --> 18:23.800] That's damn good animatronics.

[18:23.800 --> 18:26.240] Do you consider, Bob, that it was a ghost snake?

[18:26.240 --> 18:27.240] You know, it would be ethereal.

[18:27.240 --> 18:28.240] It wouldn't be actually.

[18:28.240 --> 18:29.240] Bigfoot snake.

[18:29.240 --> 18:30.240] It's a bigfoot snake.

[18:30.240 --> 18:31.240] Lots of feet on it.

[18:31.240 --> 18:34.520] It's a psychic, ghost, bigfoot snake from the future.

[18:34.520 --> 18:36.960] No, that's the most plausible explanation I've heard yet.

[18:36.960 --> 18:41.960] Or the most likely explanation, right, we pretty much, most people agree or Snopes agrees

[18:41.960 --> 18:47.880] or whatever, is that it's just a decapitated snake and they will move for a while, even

[18:47.880 --> 18:48.880] after decapitation.

[18:48.880 --> 18:50.440] And that's why he's poking it.

[18:50.440 --> 18:51.440] Yes.

[18:51.440 --> 18:53.760] Yeah, they cut the snake's head off, stuck it in her ear, and they're poking it to make

[18:53.760 --> 18:54.760] it move.

[18:54.760 --> 18:59.000] Okay, so if that's the, ooh, if that's the explanation, I don't know what's worse.

[18:59.000 --> 19:02.160] The false story or the actual explanation for this thing.

[19:02.160 --> 19:05.320] Also then, the question with no context, is this real?

[19:05.320 --> 19:06.320] Yes.

[19:06.320 --> 19:09.440] There is a decapitated snake head in her ear.

[19:09.440 --> 19:10.440] That's real.

[19:10.440 --> 19:16.080] Well, it's not real as presented, like as a living snake nestled in somebody's ear.

[19:16.080 --> 19:19.400] And that is what we're supposed to get from it, because the first thing I said was, why

[19:19.400 --> 19:21.800] is there just a snake head in her ear?

[19:21.800 --> 19:25.440] Because of course, any reasonable person knows that there's nowhere for the body to go, because

[19:25.440 --> 19:28.560] your ear canal, how big is your ear canal?

[19:28.560 --> 19:29.560] It's teeny.

[19:29.560 --> 19:30.560] I don't know.

[19:30.560 --> 19:31.560] Like a centimeter or two?

[19:31.560 --> 19:32.560] Yeah.

[19:32.560 --> 19:33.640] An inch max?

[19:33.640 --> 19:34.760] I don't know.

[19:34.760 --> 19:35.760] And it's narrow.

[19:35.760 --> 19:36.760] Yeah.

[19:36.760 --> 19:37.760] That's what I'm saying.

[19:37.760 --> 19:38.760] Yeah.

[19:38.760 --> 19:39.760] It's short and narrow.

[19:39.760 --> 19:40.760] There's no...

[19:40.760 --> 19:44.880] And then there's your cochlea, your inner ear, and then there's your brainstem.

[19:44.880 --> 19:48.560] You know, it's just, there's no place for the snake body to be.

[19:48.560 --> 19:50.200] So there's clearly no snake body there, right?

[19:50.200 --> 19:51.480] That's that we could say for sure.

[19:51.480 --> 19:56.880] Whether it's CG or a recently decapitated head or whatever, there's no body attached

[19:56.880 --> 19:57.880] to it.

[19:57.880 --> 19:58.880] It's an illusion.

[19:58.880 --> 20:01.440] It's an illusion, right?

[20:01.440 --> 20:06.560] Is surgeon in quotes, struggles to remove live snake bones there.

[20:06.560 --> 20:09.160] It's a surgeon in quotes.

[20:09.160 --> 20:10.160] Yeah.

[20:10.160 --> 20:15.600] Because apparently it started as a clip to Facebook, posted on September 1st by India

[20:15.600 --> 20:21.280] based social media star named Chandan Singh, or 20,000 followers, whatever.

[20:21.280 --> 20:26.900] And surgeon, it was written in a foreign language, I can't read it, but the word surgeon was

[20:26.900 --> 20:27.940] in there.

[20:27.940 --> 20:31.740] And also in quotes, it says the snake has gone in the ear.

[20:31.740 --> 20:35.800] So that's why surgeon is quoted the way it is.

[20:35.800 --> 20:37.140] That guy's not a surgeon.

[20:37.140 --> 20:41.660] Or if he is, he's not trying to remove that snake skin because if he were, he would freaking

[20:41.660 --> 20:42.660] remove it.

[20:42.660 --> 20:47.880] And the other thing is, if he removed the snake, why are we not seeing that portion

[20:47.880 --> 20:48.880] of the video?

[20:48.880 --> 20:49.880] Right.

[20:49.880 --> 20:55.560] Why is it so conveniently cut before you get to see the head pop out or whatever?

[20:55.560 --> 21:00.800] And after the removal of something other than just poking the snake head.

[21:00.800 --> 21:06.800] But sitting there and allowing yourself to be used like that for this purpose is heroic,

[21:06.800 --> 21:08.760] brave or weird.

[21:08.760 --> 21:14.320] I've seen people do weirder, grosser things on the internet, so not surprising.

[21:14.320 --> 21:18.000] All right, let's move on to some news items.

News Items

S:

B:

C:

J:

E:

(laughs) (laughter) (applause) [inaudible]

What Children Believe (21:18)

[21:18.000 --> 21:21.200] Kara, you're going to start us off with what children believe.

[21:21.200 --> 21:22.200] Yeah.

[21:22.200 --> 21:26.520] This is one of those like I could approach this two different ways because as I was reading

[21:26.520 --> 21:29.860] the coverage of this, the headlines made it sound kind of juicy.

[21:29.860 --> 21:34.560] And then the more I dug into the actual paper, like the research study that we're about to

[21:34.560 --> 21:38.600] talk about, the more I was like, uh-huh, uh-huh, well, duh.

[21:38.600 --> 21:45.360] And so let's talk a little bit about how this is a well, duh subject, but also why it matters.

[21:45.360 --> 21:54.400] So an article published in Child Development by some Canadian and I think and U.S. researchers,

[21:54.400 --> 22:02.080] yeah, University of Toronto and also Harvard, were saying kind of do kids believe everything

[22:02.080 --> 22:03.080] that they're told?

[22:03.080 --> 22:07.120] I think any of us, like as you're reading coverage of this, it's really funny to me

[22:07.120 --> 22:10.080] because you're like, have you ever been around a child?

[22:10.080 --> 22:15.680] Like yes, kids are gullible, but yes, kids also are explorers.

[22:15.680 --> 22:18.320] And so the question here, they're observers and they're explorers.

[22:18.320 --> 22:22.200] So the question here is how do those things converge?

[22:22.200 --> 22:24.940] I don't think it's really well answered by this study, but let me tell you what they

[22:24.940 --> 22:26.200] did in the study.

[22:26.200 --> 22:29.520] So there's sort of two paradigms.

[22:29.520 --> 22:33.120] In one of the paradigms, it was quite simple.

[22:33.120 --> 22:38.480] They basically had kids come in and they asked them straightforward questions like, is this

[22:38.480 --> 22:41.600] rock hard or soft?

[22:41.600 --> 22:44.880] And the kids were like, well, the rock's hard.

[22:44.880 --> 22:48.680] Like all of them said that because these were four to seven-year-olds, by the way, because

[22:48.680 --> 22:50.960] even a four-year-old knows that a rock is hard.

[22:50.960 --> 22:53.200] They have seen and felt rocks before.

[22:53.200 --> 22:57.720] And then they sort of randomized them into groups and in one group they were like, yeah,

[22:57.720 --> 22:58.720] it's hard.

[22:58.720 --> 23:02.560] And in the other group they were like, no, this rock is soft.

[23:02.560 --> 23:07.600] And then the researcher was like, oh, quick, I got a phone call, BRB, and left the kids

[23:07.600 --> 23:08.600] in the room.

[23:08.600 --> 23:13.300] And so unbeknownst to the kids, they were being videotaped and their exploratory behavior

[23:13.300 --> 23:14.740] was being observed.

[23:14.740 --> 23:16.280] And that's really what the study was.

[23:16.280 --> 23:22.640] What do the kids do after they're told that there's a surprising piece of information

[23:22.640 --> 23:28.800] that doesn't comport with their preexisting understanding of the world?

[23:28.800 --> 23:29.800] Kids explored.

[23:29.800 --> 23:33.040] I mean, you know, what do you expect them to do?

[23:33.040 --> 23:36.080] I guess you might expect that one or two kids are going to sit there and go, I guess the

[23:36.080 --> 23:39.400] rock's soft, weird, and like never touched the rock.

[23:39.400 --> 23:41.760] But most kids did exactly what you would think they would do.

[23:41.760 --> 23:47.620] They started to observe and explore and see for themselves, see if that adult's claim

[23:47.620 --> 23:50.440] was real.

[23:50.440 --> 23:51.440] What age?

[23:51.440 --> 23:52.440] Four to seven.

[23:52.440 --> 23:53.440] Yeah, sure.

[23:53.440 --> 23:54.440] Yeah.

[23:54.440 --> 24:00.600] What I love about it, though, because they have to summarize the study exactly, is that

[24:00.600 --> 24:07.520] in the pretest or in the pre-experimental condition group, so they ask them all, is

[24:07.520 --> 24:10.320] the rock hard or soft, all the kids said the rock was hard.

[24:10.320 --> 24:15.640] And then in part of the two different groups, one group, no, the rock is soft.

[24:15.640 --> 24:19.280] The other group, yeah, you're right, the rock is hard.

[24:19.280 --> 24:25.680] Most of the kids, it's really funny, they say most but not all of the kids concurred.

[24:25.680 --> 24:29.080] In the group where they were reinforced that the rock was hard, most but not all of the

[24:29.080 --> 24:32.620] kids continued to concur that the rock was hard.

[24:32.620 --> 24:33.960] This is my favorite part of the study.

[24:33.960 --> 24:36.760] That means at least one child was like, no, the rock's hard.

[24:36.760 --> 24:39.120] And then the adult goes, yeah, you're right, the rock's hard, and the kid goes, I think

[24:39.120 --> 24:40.120] it's soft.

[24:40.120 --> 24:41.120] Yeah, right?

[24:41.120 --> 24:43.240] Which is like, because they had to report it that way, right?

[24:43.240 --> 24:45.120] They said most, not all.

[24:45.120 --> 24:46.640] Anyway, that's an aside.

[24:46.640 --> 24:49.920] So some of the kids said, okay, maybe it is soft.

[24:49.920 --> 24:52.160] Some of the kids said, no, I think it's hard.

[24:52.160 --> 24:55.760] But regardless, they went in and they explored for themselves.

[24:55.760 --> 25:01.420] Then they did another study where they actually kind of compared the differences between the

[25:01.420 --> 25:06.540] younger group, so they sort of arbitrarily split them after the fact into a four to five-year-old

[25:06.540 --> 25:10.460] group and then into a six to seven-year-old group.

[25:10.460 --> 25:15.720] And in that one, they were given the vignettes, specific vignettes.

[25:15.720 --> 25:18.840] I think they were sort of based on the ideas from this first group.

[25:18.840 --> 25:24.600] And in those different vignettes, they asked the kids, what do you do if an adult says

[25:24.600 --> 25:28.140] to you, the sponge is harder than the rock?

[25:28.140 --> 25:32.160] What do you do if an adult says to you, blah, blah, blah?

[25:32.160 --> 25:36.680] And so they presented the kids with these vignettes of kind of unbelievable claims or

[25:36.680 --> 25:41.560] claims that shouldn't compute for them because they're between the ages of four and seven

[25:41.560 --> 25:45.560] and are old enough to know that a sponge is soft and a rock is hard.

[25:45.560 --> 25:53.340] And what they found was that the older group kind of identified strategies that were more

[25:53.340 --> 25:55.920] specific and more efficient.

[25:55.920 --> 26:01.080] So the older group would say things like, they should touch the sponge and they should

[26:01.080 --> 26:03.340] touch the rock and compare them.

[26:03.340 --> 26:09.280] And the younger group was less likely to have, I guess, a more sophisticated approach to

[26:09.280 --> 26:11.060] that problem solving.

[26:11.060 --> 26:12.680] This is being reported all over the place.

[26:12.680 --> 26:17.620] And basically, the quote that a lot of people are citing is from one of the researchers

[26:17.620 --> 26:20.160] that says, there's still a lot we don't know.

[26:20.160 --> 26:23.320] This is a senior author from the Toronto Lab.

[26:23.320 --> 26:24.880] It's called the Child Lab.

[26:24.880 --> 26:28.320] But what's clear is that children don't believe everything they're told.

[26:28.320 --> 26:31.520] They think about what they've been told, and if they're skeptical, they seek out additional

[26:31.520 --> 26:34.960] information that could confirm or disconfirm it.

[26:34.960 --> 26:38.480] And I think for me, this is the like, well, duh, haven't you ever been around a child

[26:38.480 --> 26:39.840] situation?

[26:39.840 --> 26:44.280] Because children aren't completely naive to the world by the time they're four.

[26:44.280 --> 26:50.020] They've lived for four years, and they've made their own observations.

[26:50.020 --> 26:55.940] And so Steve, you wrote this up, and you took sort of the study, and you said, well, let's

[26:55.940 --> 26:56.940] think broader.

[26:56.940 --> 27:00.040] Because of course, the findings, the outcome findings of the study are not surprising.

[27:00.040 --> 27:02.400] It's pretty narrow and not surprising, yeah.

[27:02.400 --> 27:03.900] It's super narrow.

[27:03.900 --> 27:06.240] It's really trying to test, are kids ultra gullible?

[27:06.240 --> 27:11.000] And it's like, well, yeah, sometimes they are, but obviously, sometimes they're not.

[27:11.000 --> 27:15.800] And then, you know, the bigger question is, you know, do kids believe everything they're

[27:15.800 --> 27:20.440] told by adults, kind of when does that start to change?

[27:20.440 --> 27:24.760] And sort of what are the factors, I think, that are involved here?

[27:24.760 --> 27:29.740] If the paradigm had been something that was a little bit vaguer or harder to confirm,

[27:29.740 --> 27:32.200] we may have seen a different outcome.

[27:32.200 --> 27:39.160] Very often, we're running into claims that we can't confirm ourselves, that we have to

[27:39.160 --> 27:42.880] confirm by figuring out who are the experts?

[27:42.880 --> 27:44.480] What are they saying?

[27:44.480 --> 27:46.120] Is there a consensus?

[27:46.120 --> 27:50.060] And this skill is not the skill that the study looked at at all.

[27:50.060 --> 27:54.600] This study looked at very basic scientific reasoning skills.

[27:54.600 --> 27:58.880] And so, I'm a little, like, the headline's fine.

[27:58.880 --> 28:01.760] Children don't believe everything they're told, well, again, duh.

[28:01.760 --> 28:06.040] Or children as, it's hard to lie to children according to scientists, that one's a stretch

[28:06.040 --> 28:07.440] for me.

[28:07.440 --> 28:08.440] Not liking that headline.

[28:08.440 --> 28:14.000] It's hard to lie to children about things that they can directly observe themselves.

[28:14.000 --> 28:17.120] They said, you know, an adult, an authority figure is telling them something.

[28:17.120 --> 28:23.480] But whether the child agreed or not, the child then explored to try and test that observation

[28:23.480 --> 28:24.480] for themselves.

[28:24.480 --> 28:27.920] And I think that is a fundamentally important aspect of this study.

[28:27.920 --> 28:32.360] But I don't think there's a lot of inferences that you can draw from it about how to develop

[28:32.360 --> 28:34.920] really strong critical thinking skills later in life.

[28:34.920 --> 28:37.240] Yeah, this one study is such a tiny slice.

[28:37.240 --> 28:39.880] You have to look at it in the context of so much of the research.

[28:39.880 --> 28:43.040] I didn't talk about it in my write-up, though, but we also, there's also research looking

[28:43.040 --> 28:51.520] at like if an adult tells a child, like gives them a toy and shows them how to use the toy,

[28:51.520 --> 28:57.040] the child will use the toy in the way that they were shown, even if it's a very narrow,

[28:57.040 --> 28:58.920] simplistic way of using the toy.

[28:58.920 --> 29:03.880] If they're given the toy with no direction, they will be more creative and they'll explore

[29:03.880 --> 29:07.760] and they'll use it in different ways and they'll try out different things.

[29:07.760 --> 29:14.520] So one factor is does it contradict or conflict with things they already know or are they

[29:14.520 --> 29:18.200] being told information in a vacuum?

[29:18.200 --> 29:23.460] And also, as you say, is it part of, like, are they being told, this is part of our identity

[29:23.460 --> 29:24.840] of who we are, right?

[29:24.840 --> 29:29.400] Obviously, parents convey religious beliefs to children and children believe them.

[29:29.400 --> 29:32.860] Most people have the religious faith that they were raised in.

[29:32.860 --> 29:36.520] And that's one of those things that you can't just turn around and observe for yourself.

[29:36.520 --> 29:38.840] Yeah, you can't say, is God there?

[29:38.840 --> 29:42.520] You know, there's nothing you can do to test that.

[29:42.520 --> 29:47.440] But what's interesting about testing kids, first of all, it's interesting to say, when

[29:47.440 --> 29:50.320] do certain modules, you know, engage?

[29:50.320 --> 29:52.640] When do they start to do things?

[29:52.640 --> 29:56.720] And you can see how they get more sophisticated and nuanced and how they approach things.

[29:56.720 --> 30:03.240] But also, there is this question about like whether or not, you know, children are like

[30:03.240 --> 30:09.600] more curious and more sort of questioning younger, and then it gets beaten out of them

[30:09.600 --> 30:12.800] by the desire to conform to society.

[30:12.800 --> 30:13.800] Right.

[30:13.800 --> 30:16.120] And is there something almost bimodal there, right?

[30:16.120 --> 30:19.800] Where when they're so young, they believe everything they're told because they don't

[30:19.800 --> 30:23.760] have context and they don't have anything to connect it to.

[30:23.760 --> 30:26.120] And they have no reason to question.

[30:26.120 --> 30:30.320] And then as they get older, they start to be more questioning.

[30:30.320 --> 30:35.040] And then as they get even older, still, they want to conform and belong because the idea

[30:35.040 --> 30:40.100] of social in-group, out-group status becomes more salient to them.

[30:40.100 --> 30:42.960] Perhaps it does kind of follow that to some extent.

[30:42.960 --> 30:44.840] I think creativity is the same way.

[30:44.840 --> 30:47.720] Creativity, I mean, it's interesting, you were talking about the research about giving

[30:47.720 --> 30:48.720] a child a toy.

[30:48.720 --> 30:50.260] And this is maybe a little bit of a departure.

[30:50.260 --> 30:55.060] But I worked with a professor who was like fascinated by creativity research.

[30:55.060 --> 30:58.480] And I hated it because I was like, how do you define that?

[30:58.480 --> 30:59.480] Oh, my gosh.

[30:59.480 --> 31:00.480] It's so vague.

[31:00.480 --> 31:02.000] It's all over the place.

[31:02.000 --> 31:09.840] And they often talked about like, give a child anything, a piece of equipment, a paperclip,

[31:09.840 --> 31:13.360] and have them list all the things it can be.

[31:13.360 --> 31:17.580] And for me, I would get frustrated when people would say it's super creative if they just

[31:17.580 --> 31:21.400] made a list of things that it could never be.

[31:21.400 --> 31:27.040] But creativity seemed really in that sweet spot when they would think of things that

[31:27.040 --> 31:31.400] were outside of the box, but they still used some amount of constraint.

[31:31.400 --> 31:33.680] Like a paperclip can't be an airplane.

[31:33.680 --> 31:35.200] It just can't.

[31:35.200 --> 31:37.440] But it can be this, this, this, and this.

[31:37.440 --> 31:41.460] And maybe those are things you wouldn't think of if you're always thinking inside the box.

[31:41.460 --> 31:48.280] And so there does seem to be some amount of developmental correlation there, right?

[31:48.280 --> 31:52.920] The older that you get, the more constrained your thinking becomes.

[31:52.920 --> 31:57.320] And so it is that sort of like the more conformist you are, the more it's being beaten out of

[31:57.320 --> 31:58.320] you.

[31:58.320 --> 32:02.360] But I think that also comes not just with age, but it comes with the amount of time

[32:02.360 --> 32:04.760] you spend in a certain paradigm as well.

[32:04.760 --> 32:09.920] Because you see this a lot with people who work in a certain field being brought into

[32:09.920 --> 32:13.600] another field to try to solve problems in that field.

[32:13.600 --> 32:16.240] And it's amazing what happens where they're like, well, did you try this?

[32:16.240 --> 32:20.760] And people are like, oh, my god, how have we none of us have ever thought of that before.

[32:20.760 --> 32:24.480] Because that's not how you were trained to think.

[32:24.480 --> 32:25.480] Fresh approach to it.

[32:25.480 --> 32:26.480] Yeah.

[32:26.480 --> 32:30.920] I always think of the fact that the Iceman, you know, they didn't know how he died.

[32:30.920 --> 32:39.640] And meanwhile, there's an arrowhead clearly visible on the X-ray in his chest that they

[32:39.640 --> 32:44.160] looked at for years and didn't see it because they weren't looking for it.

[32:44.160 --> 32:45.160] No, no.

[32:45.160 --> 32:46.880] They kept saying, wow, what is this arrow pointing to?

[32:46.880 --> 32:51.160] It must be a clue as to what might have killed him.

[32:51.160 --> 32:52.160] Someone's like, hey, what's that arrowhead?

[32:52.160 --> 32:53.160] You know, whatever.

[32:53.160 --> 33:00.960] Or what's the other one, the gorilla and the scan of the monkey in the brain?

[33:00.960 --> 33:06.240] They did a research study where they showed radiologists a CT scan of the chest.

[33:06.240 --> 33:09.880] And they said, tell us what pathology you see there.

[33:09.880 --> 33:14.000] And there was literally a gorilla in the middle of the chest.

[33:14.000 --> 33:15.000] And nobody found it.

[33:15.000 --> 33:16.000] Oh, that's great.

[33:16.000 --> 33:17.000] Nobody.

[33:17.000 --> 33:20.640] It's like a large percentage of them didn't see it because, of course, they're not looking

[33:20.640 --> 33:21.640] for it.

[33:21.640 --> 33:22.640] They're looking for what they know to be pathology.

[33:22.640 --> 33:23.640] Yeah.

[33:23.640 --> 33:24.640] That's based on that classic.

[33:24.640 --> 33:25.640] Yeah.

[33:25.640 --> 33:26.640] It's inattentional blindness.

[33:26.640 --> 33:27.640] There's a classic psych experiment that you can Google it.

[33:27.640 --> 33:28.640] Like, you can watch the video.

[33:28.640 --> 33:32.160] It's a super classic video where they tell people, count how many times the ball is passed.

[33:32.160 --> 33:36.400] And it's a really complex video where basketball is being passed amongst a lot of people.

[33:36.400 --> 33:39.320] You really have to focus to count the passes.

[33:39.320 --> 33:41.400] And while focusing on it, it's inattentional blindness.

[33:41.400 --> 33:43.640] That's the phenomenon that they're highlighting.

[33:43.640 --> 33:47.360] While you're focusing on the ball, you literally don't see the gorilla walk completely through

[33:47.360 --> 33:48.360] the scene.

[33:48.360 --> 33:49.360] It's amazing.

[33:49.360 --> 33:50.360] It's amazing.

[33:50.360 --> 33:51.360] I know.

[33:51.360 --> 33:54.440] And professors love to show this to first-year psych students and go, anybody notice anything

[33:54.440 --> 33:56.800] weird about the video?

[33:56.800 --> 33:59.240] It's pretty cool how many people are like, what do you mean?

[33:59.240 --> 34:01.880] About 30% see it, 30% or 40%.

[34:01.880 --> 34:02.880] Yeah.

[34:02.880 --> 34:03.880] Yeah.

[34:03.880 --> 34:05.920] But that's why they used a gorilla in the x-ray study.

[34:05.920 --> 34:06.920] Yeah.

[34:06.920 --> 34:07.920] Because it was like a nod.

[34:07.920 --> 34:10.920] An homage to that original gorilla video.

[34:10.920 --> 34:11.920] Yeah.

[34:11.920 --> 34:12.920] Fascinating.

[34:12.920 --> 34:15.800] Well, everyone, we're going to take a quick break from our show to talk about one of our

[34:15.800 --> 34:18.680] sponsors this week, BetterHelp.

[34:18.680 --> 34:21.640] There are so many reasons to go to therapy.

[34:21.640 --> 34:23.760] I mean, too many to list.

[34:23.760 --> 34:28.800] And I think all of us know that whether we're struggling with a mental illness, with an

[34:28.800 --> 34:35.120] actual diagnosis, or whether we're dealing with an experience in our lives, that we just

[34:35.120 --> 34:38.640] need a little bit of support, a little bit of guidance through.

[34:38.640 --> 34:43.760] These are all valid reasons to talk to somebody, and BetterHelp makes it super easy because,

[34:43.760 --> 34:45.560] of course, this is online therapy.

[34:45.560 --> 34:46.560] Yeah.

[34:46.560 --> 34:50.640] Kara, in my personal experience, going to therapy, it's doing multiple things at the

[34:50.640 --> 34:51.640] same time.

[34:51.640 --> 34:53.720] I just feel good after I go to therapy.

[34:53.720 --> 34:55.680] It's like I'm unloading.

[34:55.680 --> 35:01.200] And along with that, I'm learning skills to help me deal with my own anxiety and depression,

[35:01.200 --> 35:02.840] which it's a double win.

[35:02.840 --> 35:06.320] So when you want to be a better problem solver, therapy can get you there.

[35:06.320 --> 35:11.600] Visit BetterHelp.com slash SGU today to get 10% off your first month.

[35:11.600 --> 35:15.000] That's Better H-E-L-P dot com slash SGU.

[35:15.000 --> 35:16.160] All right, guys.

[35:16.160 --> 35:17.640] Let's get back to the show.

[35:17.640 --> 35:18.760] All right.

[35:18.760 --> 35:19.760] Let's move on.

Health Effects of Gas Stoves (35:18)

[35:19.760 --> 35:23.680] Jay, is my gas stove slowly killing me?

[35:23.680 --> 35:25.400] It is, isn't it, Jay?

[35:25.400 --> 35:26.400] Check the gas.

[35:26.400 --> 35:30.320] Do you hear it moving around the house at night when you're in bed?

[35:30.320 --> 35:31.320] Is that what it is?

[35:31.320 --> 35:32.320] Is that my noise?

[35:32.320 --> 35:37.160] Yeah, the question is, is having a gas stove in your house dangerous to your health?

[35:37.160 --> 35:42.000] Yeah, unfortunately, we've known this for a while, but recent research strongly suggests

[35:42.000 --> 35:43.680] the answer is yes.

[35:43.680 --> 35:48.360] Gas stoves are considered, this is my opinion, but lots of people feel this way.

[35:48.360 --> 35:52.440] Gas stoves are considered the best of all versions of stoves out there.

[35:52.440 --> 35:53.440] Disagree.

[35:53.440 --> 35:54.440] Induction.

[35:54.440 --> 35:58.880] I'll explain to you right now why I like it better than induction.

[35:58.880 --> 36:04.000] Because you have more control over the temperature because I can visually tell what my... Once

[36:04.000 --> 36:09.780] you learn your stove, I can visually tell where it's at just by looking at the flame.

[36:09.780 --> 36:13.600] You think you have more control because you can visually see the flame height once you

[36:13.600 --> 36:19.900] get used to it as opposed to being able to dial in a digital display?

[36:19.900 --> 36:22.600] Of course you have more control when you're using technology.

[36:22.600 --> 36:24.800] Yeah, but this is gas.

[36:24.800 --> 36:29.480] I love you, Jay.

[36:29.480 --> 36:31.080] You don't have more control.

[36:31.080 --> 36:32.080] You just think you do.

[36:32.080 --> 36:33.080] It's also instant heat.

[36:33.080 --> 36:34.600] You like the flame, go vroom, vroom, vroom.

[36:34.600 --> 36:38.520] You don't have to wait for it to heat up, which is another thing that I like about it.

[36:38.520 --> 36:39.520] Induction is instant.

[36:39.520 --> 36:40.520] It boils water in 90 seconds.

[36:40.520 --> 36:41.520] It's true.

[36:41.520 --> 36:42.520] I had one.

[36:42.520 --> 36:43.520] Look into it.

[36:43.520 --> 36:45.800] You never have invited me over to show me.

[36:45.800 --> 36:46.800] You've never done this.

[36:46.800 --> 36:47.800] I know.

[36:47.800 --> 36:48.800] Sorry.

[36:48.800 --> 36:49.800] Sorry.

[36:49.800 --> 36:50.800] See?

[36:50.800 --> 36:51.800] You're talking like we hang out.

[36:51.800 --> 36:53.800] Induction can be very fast.

[36:53.800 --> 36:57.640] The one thing I don't like about induction is that you have to use special pottery.

[36:57.640 --> 36:59.320] Not special, just magnetic.

[36:59.320 --> 37:00.560] So long as everything's magnetic.

[37:00.560 --> 37:01.560] And yes, okay.

[37:01.560 --> 37:04.340] Go through your cookware.

[37:04.340 --> 37:05.560] You can't use all your cookware.

[37:05.560 --> 37:09.120] You got to now have all cookware that's consistent with the induction.

[37:09.120 --> 37:11.240] Kara, this is a good throw down.

[37:11.240 --> 37:13.480] We should have George make this a throw down for the Excavator.

[37:13.480 --> 37:14.480] We should have.

[37:14.480 --> 37:15.480] Oh, it'd be great.

[37:15.480 --> 37:20.440] I just can hear the sounds of all of the European listeners nodding their heads vigorously

[37:20.440 --> 37:24.800] because for some reason it's so caught on in Europe and like here in the U.S. induction

[37:24.800 --> 37:25.800] is really rare.

[37:25.800 --> 37:28.520] Anyway, I will not continue to fight for induction.

[37:28.520 --> 37:30.280] I have an induction stove top.

[37:30.280 --> 37:33.320] I have a gas stove, but I have an induction plate.

[37:33.320 --> 37:37.040] Oh yeah, that's not uncommon to have like that, the hybrid kind of thing.

[37:37.040 --> 37:38.040] Oh, so you have a hybrid.

[37:38.040 --> 37:39.040] Let's circle it back.

[37:39.040 --> 37:45.320] So the important topic here tonight is whether or not the gas debate will put down for a

[37:45.320 --> 37:46.320] little bit.

[37:46.320 --> 37:47.880] The question is, does it pollute the air in your home?

[37:47.880 --> 37:48.880] And the answer is yes.

[37:48.880 --> 37:50.680] Let's get into the details.

[37:50.680 --> 37:56.960] Gas stoves give off nitrogen dioxide when combustion happens and nitrogen dioxide exposure

[37:56.960 --> 38:01.280] in the home is associated with an increase in asthma symptoms.

[38:01.280 --> 38:06.080] Also it's associated with a higher level of use of something called a rescue inhaler for

[38:06.080 --> 38:07.080] children, right?

[38:07.080 --> 38:10.600] So that's doing things to people's lungs.

[38:10.600 --> 38:11.600] So that's bad.

[38:11.600 --> 38:15.080] And as far as asthmatic adults go, they're affected as well.

[38:15.080 --> 38:21.080] And breathing in nitrogen dioxide increases the occurrences or worsening of chronic obstructive

[38:21.080 --> 38:22.920] pulmonary disease.

[38:22.920 --> 38:25.240] So you know, that's not good.

[38:25.240 --> 38:26.240] Definitely not good.

[38:26.240 --> 38:29.560] Keep in mind that nitrogen dioxide can come from the outdoors as well, right guys?

[38:29.560 --> 38:31.400] It's not just coming from your stove.

[38:31.400 --> 38:35.040] People who live near busy roads, you know, they're exposed to higher levels as well.

[38:35.040 --> 38:39.480] Indoor emissions of nitrogen dioxide are typically greater than outdoor sources though.

[38:39.480 --> 38:41.100] And this is important.

[38:41.100 --> 38:44.520] For example, during times when people cook, right?

[38:44.520 --> 38:48.400] It's dinnertime and you get in there and you start turning the oven on, you're cooking.

[38:48.400 --> 38:53.960] We call this peak exposure and half of those people that are cooking are exposed to higher

[38:53.960 --> 38:59.080] levels than what health standards suggest we should be, you know, that we should be

[38:59.080 --> 39:00.280] exposed to.

[39:00.280 --> 39:01.280] That's not good.

[39:01.280 --> 39:02.320] That's a lot of people.

[39:02.320 --> 39:07.440] The obvious question is how could one stove expose you to more nitrogen dioxide than all

[39:07.440 --> 39:10.140] of the cars that are driving around where you live?

[39:10.140 --> 39:14.240] And the simple answer is that there is a significant amount of air outside compared to the air

[39:14.240 --> 39:16.360] you have inside your home.

[39:16.360 --> 39:21.800] All of that pollution outside is dramatically diluted in the unbelievable amount of air

[39:21.800 --> 39:22.800] that exists outside.

[39:22.800 --> 39:27.640] But when you're inside your house, you have a very small amount of air.

[39:27.640 --> 39:33.500] And when that air gets polluted in any way, it's significant, you know, and it can stay

[39:33.500 --> 39:34.680] in your house.

[39:34.680 --> 39:39.000] So another factor to consider is that the layout of a home also impacts your exposure.

[39:39.000 --> 39:44.040] So things like a stove exhaust, you know, like if you have a range hood with a vent

[39:44.040 --> 39:48.880] on it, you know, if these vent outside, I'm not talking about the microwave one, which

[39:48.880 --> 39:53.320] just blows air, you know, away from the stove, it has to vent outside.

[39:53.320 --> 39:57.920] Or if you happen to have a well ventilated home or even a larger home that has a lot

[39:57.920 --> 40:01.720] more airspace in it, this could help limit your exposure to what's, you know, what's

[40:01.720 --> 40:05.320] being what's coming out of your your stove.

[40:05.320 --> 40:07.340] So a lot has to do with air circulation.

[40:07.340 --> 40:10.720] If your kitchen is in a small, non ventilated area.

[40:10.720 --> 40:15.000] This is very typical in apartments or, you know, if you're living in a city, for example,

[40:15.000 --> 40:19.460] you're in a small apartment, the kitchen could be in a little, you know, galley way, right?

[40:19.460 --> 40:20.460] That's very bad.

[40:20.460 --> 40:25.320] This is very, very, very likely you'll get greater exposure than a kitchen where the

[40:25.320 --> 40:29.360] air in the kitchen can mix with, you know, the living room and dining room or like an

[40:29.360 --> 40:30.360] open kind of thing.

[40:30.360 --> 40:34.000] I have I have that kind of layout in my house where there's not a lot of walls.

[40:34.000 --> 40:37.840] It's just like, you know, connected rooms that are open for the most part.

[40:37.840 --> 40:41.000] And that happens to be better because then the air in your kitchen will mix with the

[40:41.000 --> 40:44.700] other air and it dilutes down all all of the toxins.

[40:44.700 --> 40:49.860] So simply opening a kitchen window can dramatically decrease the amount of nitrogen dioxide exposure

[40:49.860 --> 40:51.360] that you have in your kitchen.

[40:51.360 --> 40:54.560] So, you know, keep that in mind, you know, just crack open a window, get it open or have

[40:54.560 --> 40:59.000] a fan sucking air out, you know, while you're cooking that that can make a big difference.

[40:59.000 --> 41:00.000] All right.

[41:00.000 --> 41:02.900] Now, let's get to the part where things get a little troublesome.

[41:02.900 --> 41:08.240] Even when your stove is off, you could be exposed to pollutants that can affect your

[41:08.240 --> 41:09.240] health.

[41:09.240 --> 41:10.240] That sucks.

[41:10.240 --> 41:15.020] So a study conducted this year concluded that stoves that are not currently in use emit

[41:15.020 --> 41:19.080] a colorless, odorless gas, also known as methane.

[41:19.080 --> 41:20.080] Right.

[41:20.080 --> 41:22.160] Methane is a major component of natural gas.

[41:22.160 --> 41:28.240] A study conducted this year estimated that gas stoves in the United States emitted enough

[41:28.240 --> 41:35.800] methane equal to about four hundred thousand cars in the same time frame.

[41:35.800 --> 41:36.800] That's bad.

[41:36.800 --> 41:41.080] Now, this is we're talking about little leaks here, like many methane leaks found in the

[41:41.080 --> 41:44.540] home go undetected because it's just a tiny little bit of methane.

[41:44.540 --> 41:47.000] But there happens to be a lot of people.

[41:47.000 --> 41:48.320] So you add up that methane.

[41:48.320 --> 41:53.680] I mean, I remember the few times I lived in Manhattan two times in my life and so many

[41:53.680 --> 41:57.240] times while living in Manhattan, you smell natural gas.

[41:57.240 --> 41:59.440] Walking by a building, you smell natural gas.

[41:59.440 --> 42:03.620] That's because that building was built, you know, a hundred years ago.

[42:03.620 --> 42:08.120] And you know, the the pipes that they use to carry the natural gas to the stoves is

[42:08.120 --> 42:10.360] old and they leak and you're just smelling it.

[42:10.360 --> 42:13.840] You go into an apartment building and you're smelling natural gas the whole time you're

[42:13.840 --> 42:14.840] there.

[42:14.840 --> 42:15.840] It's just natural gas.

[42:15.840 --> 42:20.360] And just to be pedantic, the the odors put in the gas, the gas itself is odorless, as

[42:20.360 --> 42:21.360] you said.

[42:21.360 --> 42:22.360] Yeah.

[42:22.360 --> 42:25.680] They actually they put a chemical in the natural gas so that it does smell so that

[42:25.680 --> 42:26.680] you can detect it.

[42:26.680 --> 42:28.880] You know, because otherwise it would be odorless.

[42:28.880 --> 42:29.880] That's right, Steven.

[42:29.880 --> 42:30.880] That's very important.

[42:30.880 --> 42:33.700] And a lot of gas leaks are found that way.

[42:33.700 --> 42:39.480] But sometimes that smell is not strong enough and people can't detect small leaks.

[42:39.480 --> 42:40.480] Right.

[42:40.480 --> 42:43.680] Because if you have a very small leak, you're not going to be able to detect that smell

[42:43.680 --> 42:45.400] even though it's there.

[42:45.400 --> 42:49.960] Another study found that five percent of homes that had an active natural gas leak significant

[42:49.960 --> 42:53.500] enough to require repair went undetected.

[42:53.500 --> 42:55.540] You know, that five percent is significant.

[42:55.540 --> 43:01.180] Did you know that benzene is also present in natural gas and it causes cancer?

[43:01.180 --> 43:05.320] So one of the worst case scenarios is to be in a poorly ventilated home that has a natural

[43:05.320 --> 43:10.320] gas leak because you're just breathing this in all the time.

[43:10.320 --> 43:11.320] It's there.

[43:11.320 --> 43:15.580] It's just this leak is constantly putting out this gas 24 hours a day.

[43:15.580 --> 43:17.020] You know, it's not just when you cook.

[43:17.020 --> 43:18.020] It's just happening all the time.

[43:18.020 --> 43:21.240] Now, I'm not saying that you need to get rid of your gas stove.

[43:21.240 --> 43:23.740] It wouldn't be a bad idea, but you don't really need to do it.

[43:23.740 --> 43:28.880] There's some things you could do like, you know, my wife and I just got a new stove about

[43:28.880 --> 43:32.960] a year ago, and I am absolutely not going to get rid of that magical box that I now

[43:32.960 --> 43:35.640] have in my kitchen because I adore this stove.

[43:35.640 --> 43:36.640] But that's just me.

[43:36.640 --> 43:39.160] You know, what should you do if you have a gas stove in your home?

[43:39.160 --> 43:43.800] Well, you know, it's it's not a bad idea to improve the overall ventilation in your house,

[43:43.800 --> 43:47.360] open windows, you know, particularly when you're cooking.

[43:47.360 --> 43:50.920] Use your kitchen ventilation over your stove if you have it.

[43:50.920 --> 43:51.920] That that can help a lot.

[43:51.920 --> 43:55.800] And if you have someone in your home that does have some type of breathing condition,

[43:55.800 --> 43:59.920] then, you know, it very well might be a good idea for you to get rid of your gas stove

[43:59.920 --> 44:02.600] and go with a magnetic inductive stove, Kara.

[44:02.600 --> 44:03.600] All right.

[44:03.600 --> 44:05.640] I said I said it.

[44:05.640 --> 44:08.200] Kara, you live in the United States, correct?

[44:08.200 --> 44:09.200] I do.

[44:09.200 --> 44:11.540] I live in Fort Lauderdale now.

[44:11.540 --> 44:12.540] That's right.

[44:12.540 --> 44:13.680] You moved to Florida for your internship.

[44:13.680 --> 44:18.080] Well, if you live in the if you live in the United States, I'm sure other countries have

[44:18.080 --> 44:19.320] incentives as well.

[44:19.320 --> 44:24.160] But specifically in the United States, if you look into the Inflation Reduction Act

[44:24.160 --> 44:30.200] of 2022 that Biden passed recently, this offers rebates if you purchase certain high efficiency

[44:30.200 --> 44:37.720] electric appliances for your home so you can get a break on the cost of some non gas appliances,

[44:37.720 --> 44:38.720] which is a good idea.

[44:38.720 --> 44:44.280] But I sort of put the gas stove thing into a little bit of perspective as well.

[44:44.280 --> 44:48.800] Everything you're saying is true, but the relative risk here is actually fairly small

[44:48.800 --> 44:50.840] in terms of like the asthma risk.

[44:50.840 --> 44:54.680] And if you don't have asthma or somebody in the in the home with asthma, I haven't seen

[44:54.680 --> 45:00.560] any data saying there's any other health risk, asthma and COPD, I'll say, you know, chronic

[45:00.560 --> 45:02.160] obstructive pulmonary disease.

[45:02.160 --> 45:05.820] You also have to put it into the context of the fact that there's lots of other pollutants

[45:05.820 --> 45:15.320] in the home, pet dander, candles, your fireplace, insects, basically they leave their bits themselves

[45:15.320 --> 45:21.640] all over the place, flatulence, dust, flatulence, especially in Jay's home.

[45:21.640 --> 45:26.120] So there's lots of sources of pollutants inside the home.

[45:26.120 --> 45:30.320] It's not like if you get rid of your gas stove, your home, the air inside your home is going

[45:30.320 --> 45:32.600] to be magically perfect.

[45:32.600 --> 45:37.380] The best thing to do for all of those things is to have good ventilation.

[45:37.380 --> 45:41.880] Just think like we ventilate our home whenever we can, like whenever the weather is permitting,

[45:41.880 --> 45:46.640] we try to have as much ventilation going through the home as we possibly can, especially in

[45:46.640 --> 45:49.400] the downstairs kitchen area, you know.

[45:49.400 --> 45:50.520] So that's a good idea.

[45:50.520 --> 45:54.440] You could run a fan, you know, which should be pointed at an open window just to get the

[45:54.440 --> 45:55.440] air circulating.

[45:55.440 --> 46:00.500] It's got to go outside the house, right, you know, just moving air around inside the house.